How to build, test, and integrate async http/2 app (written in Java with Vert.X) using Shell scripts, Boto3 Python, Ansible, Packer, IAM, KMS, CloudFormation, EC2 Container Service (ECS) with lifecyle hooks for Auto Scaling, Lambda, CloudWatch

Overview

- Microtrader microservices

- Integration Configuration

- Code in GitHub

- Exercise files = PDFs

- 04 - Creating Docker Release Images

- 05 - Setting up AWS Access

- 06 - Running Docker Apps in EC2 from ECR in ECS

- 07 - Customizing ECS Container Instances

- 08 - Deploying AWS Infrastructure Using Ansible and CloudFormation

- 09 - Architecting and Preparing Applications for ECS

- 10 - Defining ECS Applicatwions Using Ansible and Cloudformation

- 11 - Deploying ECS Applications Using Ansible and CloudFormation

- 12 - Creating ZCloudFormation Custom Resources Using AWS Lambda

- 13 - Managing Secrets in AWS

- 14 - Managing ECS Infrastructure Lifecycle

- 15 - Auto Scaling ECS Applications

- 16 - Continuous Delivery Using CodePipeline

- Boto

- Siging API Gateway requests

- Resources

This article describes the automation used to install and run a “non-trivial” sample system for use in analyzing cloud-based build and auto-scaling tools plus Azul java compiler diagnostics and container-level tracing such as Amazon X-Ray, etc. that measure the extent of elasticity and resilency promised by the design pattern defined by the reactive manifesto for more tolerance to failure.

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

The sample app was created by Justin Menga (mixja on GitHub) for use in his video course “Docker in Production Using Amazon Web Services” released by Pluralsight on 1 Dec 2017. This article assumes that you have a paid subscription to Pluralsight.com (less than $300 USD per year). His course is rated as 10 hours, but it took me more like 40+ hours of repeated viewing, study, and scripting because Menga’s tour de force covers most of the intricacies one needs to know to be effective in a real job as an AWS Cloud Engineer.

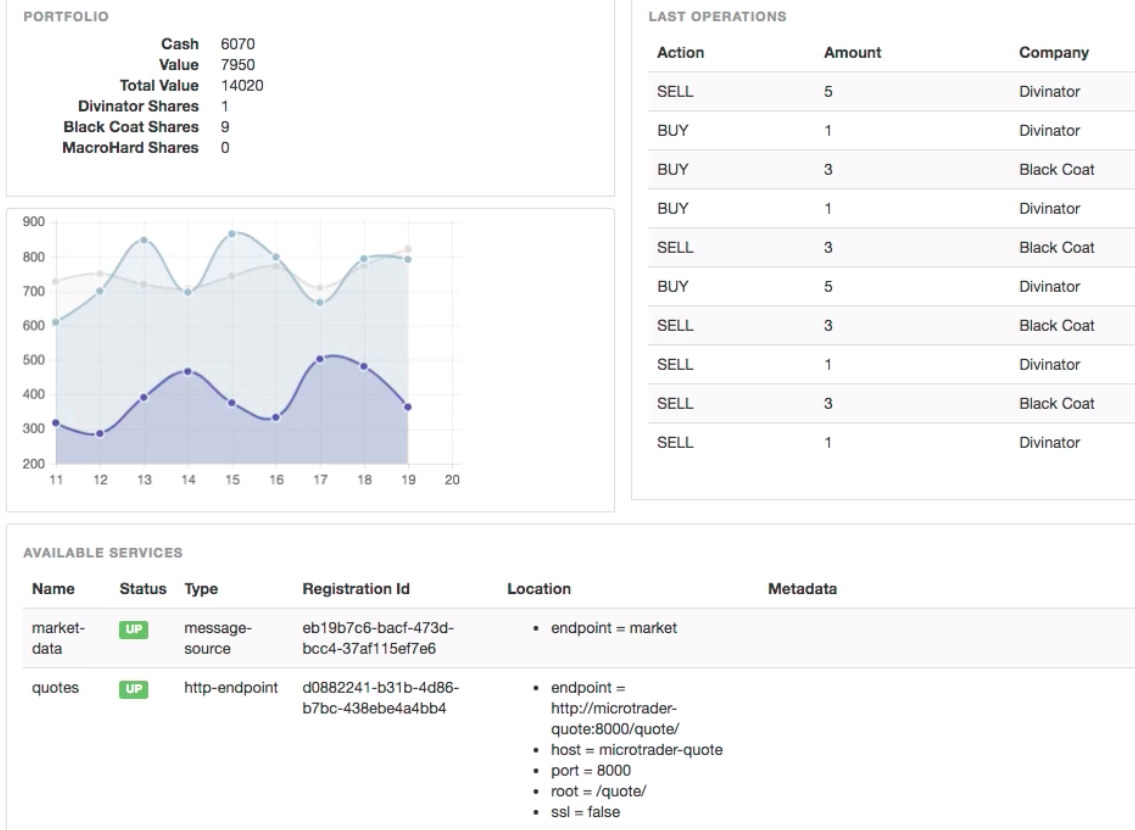

The Microtrader app simulates a stock-trading application (like at TD Ameritrade, (commission-free) Robin Hood, Schwab, ETrade etc):

This UI is generated by the Trader Dashboard microservice component.

Microtrader microservices

The Microtrader app consists of four app processes shown in purple boxes (after install):

- Quote Generator at http://localhost:32770/quote/

- periodically generates stock market quotes for fictitious companies (named “Black Coat”, “Divinator”, “MacroHard”)

- single instance that continues running until the process is stopped

- Portfolio Service

- trades stocks starting from an initial portfolio of $10000 cash on hand. The trading logic is completely random and non-sensical

- single instance

- Trader Dashboard at http://localhost:32771

- provides a web dashboard UI displaying stock market quote activity, recent stock trades and the current state of the portfolio. The dashboard’s “available services” provides an operational view of the status and service discovery information for each service.

- multiple instances for High Availability

- makes use of http/2 web sockets for efficient async communication with clients browsers

- Audit Service at http://localhost:32768/audit/

- audits all stock trading activity, persisting each stock trade to an internal MySQL database

- single instance

Each “microtrader” app process is built to run as a “Fat JAR” as a single deployable and runnable artifact within Docker containers.

Vert.x event bus

Dotted lines between microservices in the diagram above represent modern asynchronous message-driven communications through an event bus provided by Vert.x. Callbacks within each app component use the Eclipse vertx.io that is open-sourced at github.com/vert-x3 [wikipedia]. It was programmed in Java by Tim Fox in 2011 while he was employed by VMware. In January 2013, VMware moved the project and associated IP to the Eclipse Foundation, a neutral legal entity.

Repeated functionality in Vert.x is encapsulated in a “Verticle”. Thus its name. Vert.x assumes single-threaded scalable non-blocking app design, which Justin has modified for Microtraders. Real-time messages are received using the sockJs library described in “Vert.x Microservices Workshop” by Clement Escoffier, who authored this 83-page free pdf book “Building Reactive Microservices in Java” May 2017.

Eclipse Vert.x is a polyglot event-driven application framework that runs on Java. “Polyglot” refers to Vert.x exposing its idiomatic API in Java, JavaScript, Groovy, Ruby Python, Scala, Kotlin, Clojure, and Ceylon.

See Manning Book: “Vert.x in Action” by Julien Ponge from Manning.

WARNING: InfoQ’s JVM Trends for 2019, the editorial team predicts “vert.x will not progress past the EA (Early Adopter) phase, due to it’s relatively niche appeal”.

An alternative is Micronaut, a JVM framework for building fast, lightweight, scalable microservices applications in Java, Groovy or Kotlin. It is inspired by Spring and Grails.

Integration Configuration

There are dozens of little settings one has to get right to get it all working in production. So here are notes on how to do it by automating commands suggested by several resources.

Dockerizing apps and running them as containers are standard DevOps practice. But there are many options to run Docker workloads. This article currently covers uee of AWS cloud and its IAM and ECR image repo service, but using ECS rather Amazon’s multi-zone Elastic Kubernetes (EKS) or Fargate (Kubernetes as a Service).

Custom Shell Scripts

PROTIP: To simplify, I’ve created several shell scripts (in GitHub) that gets it all done instead of manually typing after stopping and rewinding videos to specific spots.

A. microtrader-setup.sh installs on MacOs the 4 microtrader processes after building them from source and testing them using mocha.

B. ecr-setup.sh creates within AWS Docker images in a private Elastic Repository (ECR) and configures Dockerfiles for use in ECS (Elastic Container Service).

PROTIP: Since there is a flood of responses, there is a provision in the script to output to a logfile.

Parts list (ingredients for integration)

“Production” in the blog (and course) title means that we need to cover integration of a large set of components from vendors who don’t necessarily talk with each other. The products needed are listed alphabetically here, with links to my blog about it or the vendor’s marketing page, plus the version shown I am using (and the one in the video course):

- AWS or Azure cloud servies account from email with credit card

- Ansible from Red Hat (IBM) 2.4.0

- Authenticator app (from Google) on iPhone/Android

- Bash (shell) scripts

- Boto3 (Python library for AWS) 1.4.7 pip install boto3 netaddr 0.7.19

- Bower (npm install -g bower)

- Brew package manager for Mac 2.14.2

- brew install jq 1.5.2 to enable shells to handle JSON

- Chai Mocha test (within microtrader-specs repo)

- CodeBuild

- CodePipeline

- CloudFormation (CFN) templates (vs Terraform)

- CloudWatch and Logs Agents

- Config from Lightbend configuration library for JVM languages using HOCON files

- Docker for Mac 17.09.0-ce

- easy_install with sudo -H easy_install pip

- EC2 (Elastic Cloud Compute)

- Elastic (Docker) Container Services (ECR)

- Flyway for lightweight version control of database schema migrations

- Git client

- GitHub account

- Gradle (multi-project Java build tool replacing ant and maven)

- IAM on AWS

- Java (SDK)

- KMS (Key Management Service) in AWS

- Lambda from AWS

- MacOS keyboarding - Sierra version

- MFA (Multi-factor Authentication) in AWS

- NodeJS 4.x or higher (to install the npm package manager)

- Python programming language 2.7.14

- Packer from HashiCorp

- Text editor Sublime Text 3 with (darker) Material Theme.

- iTerm2 Terminal app for Mac

- brew install tree - 1.7.0

- STS (on AWS)

- Vertx for microservices (https://escoffier.me/vertx-hol) event bus

There are several integration points that various courses cover, solved via Stack Overflow, etc.

hosts file fix

Menga’s course from 2017 identified this workaround, which is no longer needed:

-

To improve the performance of docker compose commands on MacOS, workaround an issue fixed Feb 26, 2018 by adding “ localunixsocket” to “127.0.0.1 localhost”:

sudo -H nano /etc/hosts

So it looks like this:

127.0.0.1 localhost localunixsocket

microtrader-setup.sh (app build from code & test locally)

-

PROTIP: I’ve created a shell script (in GitHub) to install the 4 microtrader processes. View it within an internet browser at:

https://github.com/wilsonmar/DevSecOps/blob/master/microtrader/microtrader-setup.sh

-

The default is “yes” to install pre-requsites needed. If you’re running it multiple times, save time by setting it to “no”.

-

The default is “yes” to delete files before and after the run.

-

-

To use the script on your own MacOS laptop Terminal, triple-click this command to highlight the whole line:

sh -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/DevSecOps/master/microtrader/microtrader-setup.sh)"

… then copy it for pasting in your Terminal CLI to run it automatically.

-

Once on your machine, edit the files.

Documentation is in the script. But here are highlights:

-

Create a folder “microtrader” under your “projects” or folder. For idempotency, and to ensure that changes in GitHub are reflected: if the folder is already there, delete it. That’s also why we don’t clone the folders into our own account.

-

Checkout the “final” branch because that is what the files should look like after course exercises are completed successfully. In the above repositories, the “master” branch is the starting point for exercises.

checkout final

- Build “fat” jars for each Microservice, using the Gradle shadowJar plugin:*

./gradlew clean test shadowJar

-

- To stop the run, click the red “X” for the Terminal session.

-

Verify that processes were terminated:

ps -al

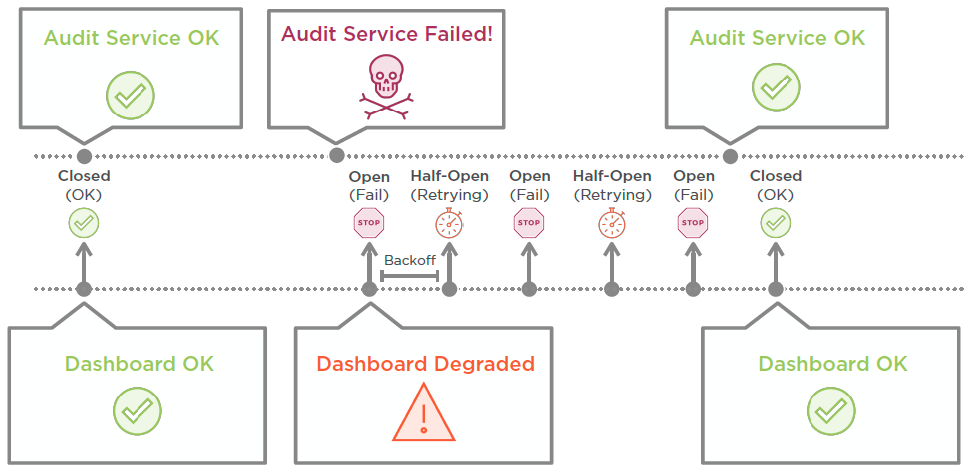

That is because of a Circuit breaker pattern implemented by the Audit Service opening on failure:

Code in GitHub

Since its release back on 1 Dec 2017, some changes have occurred in AWS technologies and workflows. However, Justin has continued work on the 17 repositories under the docker-production-aws GitHub account, which I’ve rearranged below alphabetically:

DOTHIS: In GitHub, watch each of these repositories:

- aws-starter - Starter Template for AWS CloudFormation Playbooks

-

aws-sts - Ansible role for assuming roles using the AWS STS service

- aws-cloudformation - Ansible Role for deploying AWS CloudFormation Infrastructure

-

cloudformation-resources - Ansible playbook and CloudFormation template for deploying supporting CloudFormation resources

-

docker-squid - Docker Image for running Squid Proxy

-

ecr-resources - Ansible playbook and CloudFormation template for deploying EC2 Container Registry (ECR) repositories

- lambda-ecs-capacity - AWS Lambda Function for calculating ECS cluster capacity

- lambda-ecs-tasks - AWS Lambda Function for Running ECS Tasks as CloudFormation Custom Resources

- lambda-secrets-provisioner - AWS Lambda Function for provisioning secrets into the EC2 Systems Manager Parameter Store

-

lambda-lifecycle-hooks - AWS Lambda Function for draining ECS container instances in response to EC2 auto scaling lifecycle hooks

- microtrader - A fictitious stock trading microtrader application

- microtrader-base - Base Docker images for the microtrader applications

- microtrader-deploy - Ansible playbook and CloudFormation template for deploying the Microtrader application into AWS

-

microtrader-pipeline - Ansible playbook and CloudFormation template for deploying a continuous delivery pipeline using CodePipeline, CodeBuild and CloudFormation

-

network-resources - Ansible playbook and CloudFormation template for deploying AWS VPC and other network resources

-

packer-ecs - Packer build script for creating custom AWS ECS Container Instance images

- proxy-resources - Ansible playbook and CloudFormation template for deploying an HTTP proxy stack based upon Squid

Exercise files = PDFs

Click “Exercise files” on Pluralsight.com downloads a folder named “docker-production-using-amazon-web-services”. Its sub-folders contain pdf files within folders of just numbers. So I’ve named chapter names and put them all in one folder:

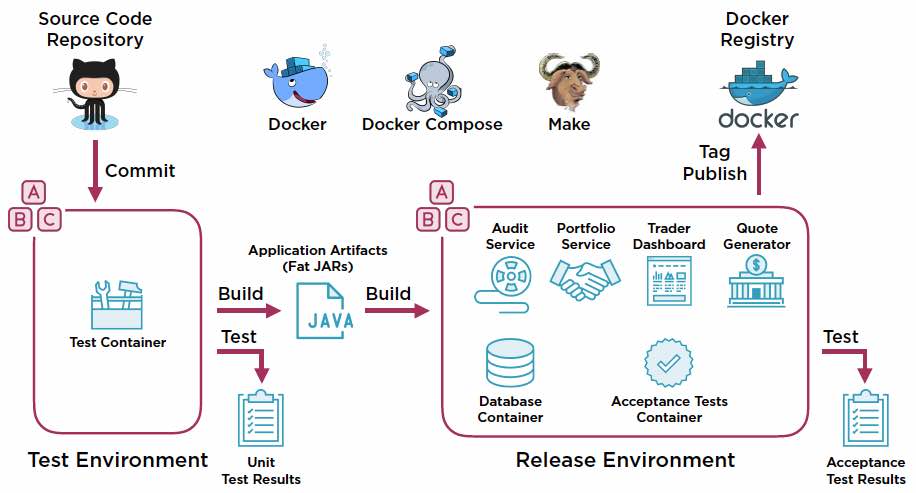

04 - Creating Docker Release Images

The release pipeline automates the workflow to a Release Environment. commit to a Test Environment, performs unit testing, builds, and

The stages:

- make test

- make release

- Tag and Publish

- make clean

05 - Setting up AWS Access

Getting EC2 Key Pairs and keeping them on your laptop is both risky and a hassle.

It’s more secure and flexible to define (in IAM) groups associated with policies by role. When a user is assigned to a group, he/she gets all the permissions for roles in the group.

- Create admin account alias instead of root billing account.

- Create IAM Roles *

- Create IAM Groups

- Create IAM Policies that enforce MFA

- Create users

- Enroll users for MFA

06 - Running Docker Apps in EC2 from ECR in ECS

ECS is like docker-compose. It supports rolling deployments to maintain the Desired Count based on status from the Load Balancer.

PROTIP: TODO: I’m in the process of creating a script that automates the manual steps shown in Justin’s videos:

https://github.com/wilsonmar/DevSecOps/blob/master/microtrader/ecs-setup.sh

Triple-click the following command to highlight the whole line:

sh -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/DevSecOps/master/microtrader/ecr-setup.sh)"

… then copy it for pasting in your Terminal CLI to run it automatically.

The steps to publish and run Docker apps using ECS:

Create repository in Amazon’s ECR (Elastic Container Repository):

- Define ECS clusters from Docker images in my ECR by substituting FROM values in Docker files with *, such as “FROM dockerproductionaws/microtrader-base” to “FROM 543279062384.dkr.ecr.us-west-2.amazonaws.com/dockerproductionaws/microtrader=base”.

- Edit

Makefileto override DOCKER_REGISTRY from “docker.io” to ECR host name (such as “54327906284.dkr.ecr.us-west-2.amazonaws.com”) - Add in

MakefileAWS_ACCOUNT_ID ?= your account, such as “543279062384” - Add in

MakefileDOCKER_LOGIN_EXPRESSION := eval(aws ecr get-login –registry-ids $(AWS_ACCOUNT_ID)) export AWS_PROFILE=docker-prodution-aws-adminmake loginand enter MFA code.make testmake releasemake tag: default-

docker imagesto verify creationPublish using the release pipeline:

make publish-

git commit -a -m "Add support for AWS ECR"&git push origin master - Create ECS Clusters using AMI Optimized AMIs for your region

- Make use of VPC, Subnet, and Security Group created earlier.

- Verify CloudFormation used under the hood.

- Get public and private IP addresses in Cluster dashboard.

- Use the public IP to SSH into the instance

ssh -i ~/.ssh/microtrader.pem ec2-user@34.214.122.6 - Verify with

docker info | moreanddocker ps sudo yum install jq -ydocker inspect -f '' ecs-agent | jqls -l /var/log/ecs- Local introspection end-point

curl -s localhost:51678/v1/metadata | jq -

Local introspection end-point

curl -s localhost:51678/v1/tasks | jqCreate ECS task definitions*

-

HTTP_PORT 8000

Create ECS Service:

- Select Cluster to create and provide new Service name for 1 Task.

- Verify running

- Run ECS services and task definition to trigger Rolling deployments.

07 - Customizing ECS Container Instances

customizing-ecs-container-instances-slides

To create a custom AMI based on ECS-optimized AMIs:

-

Triple-click the following command to highlight the whole line:

sh -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/DevSecOps/master/microtrader/microtrader-setup.sh)"

… then copy it for pasting in your Terminal CLI to run it automatically.

Documentation is in the script. But here are highlights:

-

https://github.com/docker-production-aws/packer-ecs/blob/final/Makefile

In ECS-optimized AMIs, the standard Advanced User Data for EC2 start-up defines part of the “cloud-init” framework at instance creation time:

echo ECS_CLUSTER=microtrader > /etc/ecs/ecs.config

A sample Cloud Formation cfn-init file:*

config:

commands:

01_install_awslogs:

command: "yum install awslogs -y"

env:

MY_ENV: "true"

cwd: "/home/ec2-user"

files:

/etc/awslogs.conf:

content: "ENABLED=true"

services:

sysvinit:

awslogs:

enabled: "true"

ensureRunning: "true"

The aws-cfn-bootstrap yum package is installed by the install-os-packages.sh bash script.

Docker Networking modes like Docker NAT and Bridge networking.

From the repo we use file files/firstrun.sh to do the same but also

define the $PROXY_URL to a HTTP Proxy that denies traffic to malicious sites and allows traffic to trusted sites. In file /etc/sysconfig/docker we specify the Proxy URL the Docker Engine uses when pulling images from the EC2 Container Registry. In file /etc/ecs/ecs.config, we specify NO_PROXY filtering of the EC2 Metadata Service (169.254.169.254) and the ECS Agent (169.254.178.2). In file /etc/awslogs/proxy.conf we define Proxy URL for use by the CloudWatch Logs agent.*

Docker is stopped so removing the /var/lib/docker/network file forces Docker to recreate it when it starts.

The link to docker0 is deleted so that Vert.x binds to eth0 network interface rather than docker0.

|| true makes it so the command always returns a zero exit code no matter what.

-

we create a new shell provisioner that runs

scripts/cloud-init-options.shwhich modifies the cloud-init configuration file/etc/cloud/cloud.cfgto set yum package manager repo_update to false and repo_upgrade to none. This is needed because the HTTP Proxy blocks the yum update process which eventually timeout after several retries, significantly increasing the startup time of your ECS container instances. -

enable DOCKER _NETWORK_MODE as “host” (for Docker host networking) and append lines within the

/etc/sysonfig/dockerinside the instance:--bridge-none --ip-forward=false --ip-masq-false --iptables=false

The above is passed to the Docker engine to disable features.

-

Install Packer. On MacOS use

brew install packer.Downloading https://homebrew.bintray.com/bottles/packer-1.4.1.mojave.bottle.tar.gz zsh completions have been installed to: /usr/local/share/zsh/site-functions ==> Summary 🍺 /usr/local/Cellar/packer/1.4.1: 7 files, 139.8MB

A template json file references within folder

/usr/local/bin/packerthree types of scrips:*- builders that generate machine images (specifying AWS credentials, instance type, AMI name, etc.)

- provisioners configure software to run images

- processors build artifacts from images

-

Run Packer (from HashiCorp):

packer build packer.json

Among provisioners defined in

packer.jsonis a call to shell filescripts/install-os-packages.shto install the “awslogs” yum package that is the CloudWatch Logs agent:sudo yum -y install $packages

To install the CloudWatch Logs agent, we will create a new provisioner that references a script called install-os-packages, and you can see that the script simply installs a CloudWatch Logs agent using the yum install command. Configuration of the CloudWatch Logs agent is documented at the URL shown, and the nature of the configuration required is to configure the logs agent at instance creation time, hence we will add these configuration tasks to the firstrun script we created earlier in this module. In the script, we write to the referenced awscli. conf configuration file to configure the CloudWatch Logs region, and notice this will expect an environment variable called AWS_DEFAULT_REGION to be configured. We then write out the general AWS Logs configuration to the referenced awslogs. conf file, and here we define a standard suite of log groups that we will post logs to from the local operating system. In total, we define five log groups, one per local operating system log file type, including standard operating system logs as well as Docker logs and ECS agent logs. Notice that we are using a standard convention for defining the log group name, and again, this relies on environment variables to inject the CloudFormation STACK_NAME and AUTOSCALING_GROUP name. For the log_stream_name setting, we reference a special placeholder called instance_id, and the CloudWatch Logs agent will automatically set the stream name to the local EC2 instance ID. After writing the configuration file, we need to actually start the CloudWatch Logs agent as it is not started by default after installation. Again, it is important to remember that the firstrun script is only run at EC2 instance creation time and not actually run during the AMI build process, so we explicitly need to start the CloudWatch Logs agent inside our firstrun script.

The file needs aws credential info because Packer (being outside Amazon), doesn’t support IAM.*, so

export AWS_PROFILE=docker-production-aws-admin

aws sts assume-role --role-arn arn:aws:iam::54327906384:role/admin \ --role-session-name justin.menga

-

scripts/configure-timezone.shsets time for Los Anagles and enables NTP (Network Time Protocol) -

CloudWatch Logs Agent for logging and monitoring.

-

First run script to set HTTP proxy to secure communications, ECS Agent Config, CloudWatch Logs config, Health Check

#!/usr/bin/env bash # Install packages: yum install awslogs -y # Configure packages: echo "ENABLED=true" > /etc/awslogs.conf # Start services: service awslogs start

-

Custom Config for Timezone, Enable NTP, Customer Docker config.

-

Enable Docker Host Networking*

-

Script

scripts/cleanup.sh* stops dockers, removes lingering logs, and the dock0 interface, etc. -

Building and Publishing the Image

08 - Deploying AWS Infrastructure Using Ansible and CloudFormation

deploying-aws-infrastructure-using-ansible-and-cloudformation-slides

09 - Architecting and Preparing Applications for ECS

architecting-and-preparing-applications-for-ecs-slides

10 - Defining ECS Applicatwions Using Ansible and Cloudformation

defining-ecs-applications-using-ansible-and-cloudformation-slides

11 - Deploying ECS Applications Using Ansible and CloudFormation

deploying-ecs-applications-using-ansible-and-cloudformation-slides

12 - Creating ZCloudFormation Custom Resources Using AWS Lambda

creating-cloudformation-custom-resources-using-aws-lambda-slides

13 - Managing Secrets in AWS

managing-secrets-in-aws-slides

14 - Managing ECS Infrastructure Lifecycle

managing-ecs-infrastructure-lifecycle-slides

15 - Auto Scaling ECS Applications

auto-scaling-ecs-applications-slides

16 - Continuous Delivery Using CodePipeline

continuous-delivery-using-codepipeline-slides

Boto

-

To avoid boto installer updating the six package:

sudo -H /usr/bin/python -m pip install boto3 --ignore-installed six

brew unlink python

brew link –overwrite python

Health checks

“The Healthcheck will curl the port defined by the HTTP_PORT environment variable (or default port 35000) looking for a zero exit code (healthy) every 3 seconds and up to 20 reties. In Dockerfile.quote file:

HEALTHCHECK --interval=3s CMD curl -fs http://localhost:$(HTTP_PORT:-35000)/$(HTTP_ROOT)

Siging API Gateway requests

https://github.com/jmenga/requests-aws-sign is a Boto3 Python package that enables AWS V4 request signing using the Python requests library.

Resources

It’s irritating, but many courses, even at the same vendor (such as Pluralsight) cover the same beginner information:

-

Stephen Grider’s Udemy course “ Docker and Kubernetes: The Complete Guide”

-

Managing Docker Containers in AWS Pluralsight 1h 8m video course 4 Jun 2019 by Jean Francois Landry covers the fundamentals of Docker containers in AWS. Hands-on labs teach you how to easily get started by running your first Docker container.

Intermediate:

- Justin Menga (mixja on GitHub) manually typed in his video course “Docker in Production Using Amazon Web Services. His course was released by Pluralsight. And I think it would be difficult to follow along here unless you get a subscription at Pluralsight.com (less than $300 USD per year). His videos are rated as 10 hours, but it took me more like 70+ hours of repeated viewing, study, and scripting because Menga’s tour de force covers most of the intricacies one needs to know and be able to actually do in a real job as an AWS Cloud Engineer.

The course doesn’t cover use of Fargate and EKS. That’s covered by:

- Using Docker on AWS Pluralsight 1hr24m video course 12 Jun 2019 by David Clinton (@davidbclinton, bootstrap-it.com/docker4aws) covers CLI tools to use the ECR image repo service to manage containers using ECS (including Amazon’s managed container launch type), Fargate, and Kubernetes (EKS).