What can possibly go wrong with autonomous vehicle robots smarter than humans?

Overview

Here are my notes about technical aspects of how cars can drive themselves to provide personal mobility.

TAGS: #autonomousdriving #AI

Why AV (Autonomous Vehicles)

“Cut traffic deaths by half”.

Part of the facination (and fear) about Artificial Intelligence (AI) is computers becoming better and faster than humans in many arenas.

Driving takes concentration and is tiring. Because human drivers can be inexperienced, drunk, too tired, too distracted, etc., at the current rate of progress on AVs (Autonomous Vehicles), eventually the cost of “human error” (1 accident every 100,000 miles) will be higher than misjudgements by computers controlling vehicles.

Then, governments, auto makers, insurance companies, and others will make it more difficult to own human-driven cars.

Over time, as AI take over the road, self-driving cars can travel faster than what people can safely handle (around 70 mph). This would create a market for mini-hotels such as Volvo’s 360c concept car unveiled in 2018* Without constant attention to driving, personal vehicles can be RVs with a desk, bed, toilet, frige, etc. Commute without stress. Travel between cities without going through security.

Less drag.

The race to AV

Every auto manufacturer has a self-driving car program. Among the 40 companies:

| Company | Automaker | Notes |

|---|---|---|

| Apple | Mercedes | Drive.ai |

| Bosch | Daimler | - |

| Ford/VW/Amazon | Lyft | |

| Audi | Audi | |

| Baidu | Lincoln MKZ | China |

| Comma | (Honda) | |

| Cruise | GM/Honda | |

| Intel,MobileEye | BMW | |

| Lyft | Aptiv | |

| Tesla | Tesla | |

| Uber | - | |

| Waymo/Google | Volvo XC90 | |

| Zoox/Amazon | custom |

Honda’s 2017 models and onward are built with self-driving features. (I have a 2018 model) Honda Sensing suite to Honda Sensing Elite to Honda Sensing 360. with Renesas Electronics Corporation utilizes five millimeter-wave radars

Ford BlueCruise system

Driver tasks

Lateral control - steering

Longitudinal control - braking, accelerating

It’s more complex than flying an airplane.

OEDR: Object and Event Detection and Response - The ability to detect objects and events that immediately affect the driving task, and to react to them appropriately. Slowing down when seeing a construction zone ahead. Swerve and slow down to avoid a pedestrian. Pulling over upon hearing sirens. Stopping at a red light.

Planning: Short term, Long term

- https://arxiv.org/abs/2011.13099 An Autonomous Driving Framework for Long-term Decision-making and Short-term Trajectory Planning on Frenet Space. Intelligent driving models (IDM and MOBIL)

- S. M. LaValle. Planning Algorithms. Cambridge University Press, Cambridge, U.K., 2006. Available at http://planning.cs.uiuc.edu/.

- S. Thrun, W. Burgard, and D. Fox, Probabilistic robotics. Cambridge, MA: MIT Press, 2010.

- N. J. Nilsson, “Artificial intelligence: A modern approach,” Artificial Intelligence, vol. 82, no. 1-2, pp. 369–380, 1996.

Miscellaneous: Signaling other drivers

Levels of autonomy

ODD (Operational Design Domain) - The set of conditions under which a given system is designed to function. For example, a self driving car can have a control system designed for driving in urban environments, and another for driving on the highway. In the rain. Chaning lanes.

Taxonomy from the Society of Automotive Engineers in 2014 SAE J3016:

\0. No automation

-

Driver Assistance - driver is fully engaged. Voice prompts.

-

Partial Automation - lane keeping with adaptive cruise control to maintain constant speed. ADAS [advanced driver assistance systems] No OEDR.

-

Conditional Automation - “Traffic Jam Pilots” - Partial Driving Automation with OEDR. Change lanes. Driver is ready to take over. GM Super Cruise & Nissan ProPilot Assist Audi A8 sedan can perform OEDR in slow traffic. Ford is hoping to do it too.

-

High Automation - Fully autonomous in controlled conditions. Does not require full user alertness. no controls for human use, operating within a geofence. Waymo.

-

Full Automation - unrestricted ODD (any road surface and road type) starting from without a geofence in a closed venue low-speed environment by minibuses, valet parking, delivery robots.

PROTIP: As cars automate more, and human drivers have less to do, inattention becomes even more of an issue.

Volvo’s Road Train “platooning” had automated vehicles following a human lead vehicle (at high speeds).

In 2012, Nevada issued Google the first autonomous vehicle testing license on public roads. California followed.

HAZOP: Hazard and Operability Study - A variation of FMEA (Failure Mode and Effects Analysis) which uses guide words to brainstorm over sets of possible failures that can arise.

NHTSA: National Highway Traffic Safety Administration - An agency of the Executive Branch of the U.S. government who has developed a 12-part framework PDF to structure safety assessment for autonomous driving.

Shared rides

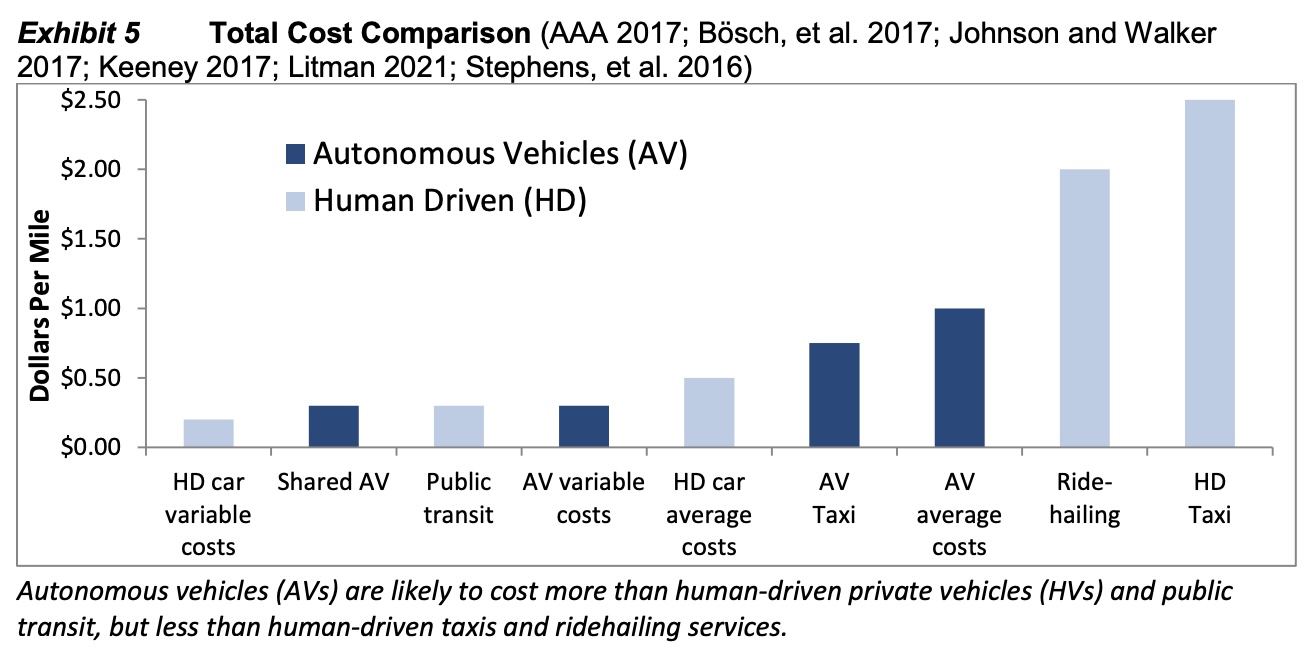

From https://www.vtpi.org/avip.pdf:

The high cost of computing power on purpose-built AVs forces amortization across many rides in taxis.

Uber and Lyft has dessicimated the jobs of human drivers.

COVID-19 is making it even more dangerous to be a driver.

City governments may resist because this undermines parking revenue from cark parks and traffic tickets.

Uber has, since 2012, been offering free rides in driverless cars aroud Pittsberg and Chandler, AZ. Zoox got the first California permit to transport passengers in self-driving cars, in 2018. Waymo in 2019. Uber in 2020. Cruise has been offering rides in San Francisco, California.

Las Vegas.

NOTE: On Teslas even the glovebox lock is controlled by the computer.

DARPA

Carnagie Mellon university won the 2nd DARPA grand challenge. Stanford was second.

Amazon

https://www.fastcompany.com/90754276/inside-the-design-of-zoox-amazons-quirky-self-driving-car

https://www.businessinsider.com/amazon-zoox-seattle-self-driving-cars-test-rain-weather-climate-2021-10

Companies

- https://www.technologyreview.com/s/604006/autox-has-built-a-self-driving-car-that-navigates-with-a-bunch-of-50-webcams/

Apple

Apple has not openly discussed their self-driving car program.

In 2016, Apple’s “Titan” program scaled back its 1,000 employee self-driving car platform.

A disclosure in 2018 states that 5,000 employees at Apple know about a self-driving car program in the company.

In April 2018, Apple hired Google’s former AI boss to run Siri and machine learning.

Argo

https://www.wired.com/story/ford-abandons-the-self-driving-road-to-nowhere/ Argo had pedigree, thanks to president Peter Rander, an alumnus of Uber’s abandoned self-driving project and among those the ride-hailing company had poached from the National Robotics Engineering Center, and CEO Bryan Salesky, a veteran of the Darpa challenges that kicked off the 21st century’s rush to autonomy. home base of Pittsburgh.

https://www.cnn.com/2022/10/26/tech/ford-self-driving-argo-shutdown Ford takes $2.7 billion hit as it drops efforts to develop full self-driving cars

https://www.bloomberg.com/news/articles/2022-11-21/amazon-amzn-self-driving-car-deal-with-ford-f-vw-fell-through Amazon Backed Out of Taking a Stake in Argo. Then the Self-Driving Startup Folded.

https://corporate.ford.com/operations/ford-autonomous-vehicles-and-mobility.html Ford is building a commercial self-driving service for ride-hailing and goods delivery starting in Austin, TX, Miami, FL and Washington, D.C. with plans to scale.

Clemson

modelers at Revolution Design Studios

Ford is bringing together all the complex pieces needed to launch a scalable, successful self-driving service: this includes the self-driving system we are developing with our technology partner Argo AI, integrated into our Ford Escape Hybrid vehicle platform, a thoughtful customer experience across all touchpoints, physical infrastructure in the cities where we operate, as well as working with ride-hailing and delivery demand partners to bring our service to customers. See https://medium.com/self-driven

Alphabet (Google)

Alphabet (Google) holds a seven percent stake in Uber. Google also owns Waymo.

VIDEO: Chris Urmson, head of Google’s driverless car program, shares footage showing how cars see.

https://www.wikiwand.com/en/Waymo

Google had a “firefly” vehicle.

Baidu’s Apollo

Baidu is the Google of China, providing a search engine.

Apollo (@apolloplatform) Baidu’s self-titled “the world’s first production-ready” AV.

Apollo Valet Parking is due to launch in 2020.

Silver at Udacity created a free intro class using Baidu’s Apollo library at https://github.com/ApolloAuto/apollo

Baidu’s AV has 5 cameras and 12 ultrasonic radars. Processors onboard run Xilinx processors on Infineon chips.

DuerOS is Baidu’s conversational AI program with embedded AI speech and image recognition. *

Cruise

GM cars have “SuperCruise”

Comma.ai

Rather than building vehicles, George Hotz, founder of Comma.ai in San Diego, has its $100 “Comma two” Android mobile app to provide self-driving capabilities by a CAN bus wire harness taping via ODB-II port on several recent models of cars: Acura RDX, Chrysler Pacifica, Honda Accord/CRV 2015+/Fit, Jeep Grand Cherokee, 2015+, Kia, Lexus CT/ES/IS/NX/RX, Subaru, Toyota Avalon/Camry/C-HR/Corolla/Highlander 2017+/Prius 2016+/RAV4, Volkswagon Golf 2015+). “The 2020 Corolla is the best car with OpenPilot. It has less lag”..

Comma’s “OpenDriver” software is open sourced, so it’s difficult to regulate by governments.

Heads-up Display (HUD) streams video for view online at https://my.comma.ai/cabana. It uses OpenStreetMap.

Comma’s access to ODB-II enables response to ABS (Anti-brake System) triggers.

VIDEO: The camera facing the driver detects whether the driver is paying attention to the road. There are also infrared LEDs on Comma’s windshield case to provide night-time driver monitoring.

- VIDEO:

- https://www.youtube.com/watch?v=2Veptye978c

Lyft

Tesla Motors

Elon Musk became the wealthiest person in the world with 25% ownership in Tesla.

VIDEO: “Lidar is a fool’s errand”.

X-Motors

Hardware

Baidu uses the Surround Computer Vision Kit hardware and Responsibility Sensitivity Safety (RSS) model from Intel’s Mobileye.

ASUS GTX1080 GPU-A8G- Gaming GPU Card

GNSS: Global Navigation Satellite System - A generic term for all satellite systems which provide position estimation. The Global Positioning System (GPS) made by the United States is a type of GNSS. Another example is the Russian made GLONASS (Globalnaya Navigazionnaya Sputnikovaya Sistema).

IMU: Inertial Measurement Unit - A sensor device consisting of an accelerometer and a gyroscope. The IMU is used to measure vehicle acceleration and angular velocity, and its data can be fused with other sensors for state estimation.

RADAR: Radio Detection And Ranging - A type of sensor which detects range and movement by transmitting radio waves and measuring return time and shifts of the reflected signal.

SONAR: Sound Navigation And Ranging - A type of sensor which detects range and movement by transmitting sound waves and measuring return time and shifts of the reflected signal.

Camera sensor:

- Forsyth, D. A. and J. Ponce. (2003). Computer vision: a modern approach (2nd edition). New Jersey: Pearson. Read sections 1.1, 1.2, 2.3, 5.1, 5.2.

- Szeliski, R. (2010). Computer vision: algorithms and applications. Springer Science & Business Media. Read sections 2.1, 2.2, 2.3 (PDF available online: http://szeliski.org/Book/drafts/SzeliskiBook_20100903_draft.pdf)

- Hartley, R., & Zisserman, A. (2003). Multiple view geometry in computer vision. Cambridge university press. Read sections 1.1, 1.2, 2.1, 6.1, 6.2

Controls

Computers needs to be able to control the vehicle’s steering, throttle, and breaking systems to execute its planning.

So vehicles need to be equipped with by-wire systems: brake by-wire, steering by-wire, throttle by-wire and shift by-wire, etc.

Additional organizations work with the Autonomous Technology Certification Facility (ATCF)

LTI: Linear Time Invariant - A linear system whose dynamics do not change with time. For example, a car using the unicycle model is a LTI system. If the model includes the tires degrading over time (and changing the vehicle dynamics), then the system would no longer be LTI.

MPC: Model Predictive Control - A method of control whose control input optimizes a user defined cost function over a finite time horizon. A common form of MPC is finite horizon LQR (linear quadratic regulation). LQR: Linear Quadratic Regulation - A method of control utilizing full state feedback. The method seeks to optimize a quadratic cost function dependent on the state and control input.

Software

PID: Proportional Integral Derivative Control - A common method of control defined by 3 gains:

1) A proportional gain which scales the control output based on the amount of the error

2) An integral gain which scales the control output based on the amount of accumulated error

3) A derivative gain which scales the control output based on the error rate of change

3D Point Coordinates from Stereopsis to derive depth [R t] to b (baseline) and f (focal length).

Disparity estimation: Use stereo rectification to force Epipolar lines to be horizontal.

- http://vision.middlebury.edu/stereo/eval3/ benchmark

Convolution is an associative cross-correlation where the filter is flipped both horizontally and verticallly before being applied to the image. For template matching. For Gradient Computation.

Image filtering (OpenCV), https://docs.opencv.org/3.4.3/d4/d86/group__imgproc__filter.html

LAB: Applying Stereo Depth to a Driving Scenario

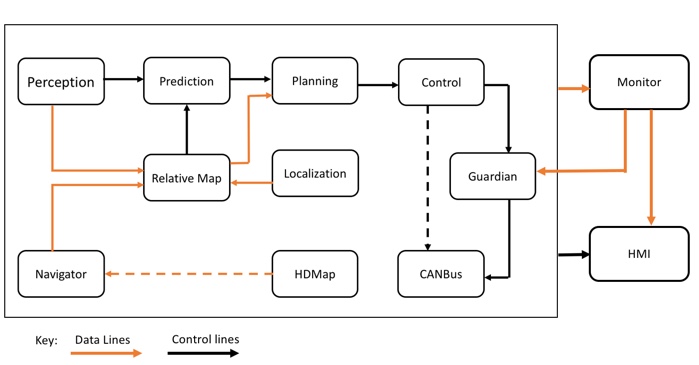

Architecture of Processes

This 2017 TED Talk [9:10] by David Silver describes the various technologies necessary:

An updated diagram:

The eventual design for version 3.0 of Baidu’s design adds a “Guardian” component:

The “Canbus” is a Controller Area Network (CAN) which transfers data between devices without the assistance of a host computer. Attach a temperature sensor to the surface of the main IC chip on ESD CAN (an Altera FPGA chip) to monitor the surface temperature of the chip to make sure it is not overheating.

HMI (Human-Machine Interface)

An off-line demo without the expensive hardware can install and run on laptops. It’s kinda like the Grand Theft Auto game. It uses Baidu’s Python-based Apollo Dreamview visualization software running under Linux: Ubuntu 14.04. Apollo is based on Linux Kernel 4.4.32).

Cruse’s Rosbg.js is a node.js & browser compatible Node@10.x module for reading rosbag binary data files.

RTOS

GPS

It needs a three-dimensional model (point cloud) of the road network, including the road, buildings, tunnels, etc. with road names and the speed limit for each stretch of road, traffic lights, and other traffic control information.

Apollo uses the OpenDRIVE map standard used by its competitors. Baidu has 300 survey vehicles to map all the highways in China.

[4:50] A particle filter, a sophisticated type of triangulation which calculates how far the vehicle is from various landmarks (street lights, traffic signs, manhole covers).

Localization

For a vehicle to “localize” itself to single-digit centimeter accuracy, it currently needs to use several technologies.

Self-driving cars need to figure out more precisely where it is in the world than what GPS (Global Positioning System) can provide. A GNSS (Global Navigation Satellite System) receiver needs at least 3 of 30 satellites to calulate its location (based on time of flight). Also, GPS updates every 10 seconds, which is too slow.

RTK (Real Time Kinematic) positioning uses ground stations to provide “ground truth” used to ensure GPS accuracy to 10 meters.

The Inertial Measurement Unit (IMU) consists of a 3-axis gyroscope and accelerometer. It updates at 1000 Hz (near real time). The system has to reconcile two XY coordinate frames: the vehicle and the map. In the 3D Gyroscope, the spin axis is set to the global coordinate system while the 3 gimbals rotate.

LiDAR

LiDARs today use 32 lasers and 1 or 2 million beams per second, and that a 64-laser system emitting 6.4 million beams a second would give superior vertical resolution and quicker refreshes. This would be better able to capture small, fast objects such as animals darting into the road.

Alex Lidow, CEO and cofounder of Efficient Power Conversion, a provider of the gallium nitride chips found in many modern lidars. https://backchannel.com/how-my-public-records-request-triggered-waymos-self-driving-car-lawsuit-1699ff35ac28#.vi4talr7i by @meharris

Random variables are IID (independent and identically distributed) - Each random variable follows the same probability distribution and all the variables are mutually independent (i.e., the cross-covariance of any pair is zero). So use VIDEO: Weighted Least Squares. The higher the expected noise, the lower the weight on the measurement.

- Jupyter Notebook - define H (the Jacobian matrix) & R.

- Here’s an interactive least squares fitting simulator provided by the PhET Interactive Simulations project at the University of Colorado: https://phet.colorado.edu/sims/html/least-squares-regression/latest/least-squares-regression_en.html

- You can find an overview of the Method of Least Squares in the Georgia Tech online textbook: Dan Margalit and Joseph Rabinoff, Interactive Linear Algebra.

- Wikipedia

- Read Chapter 3, Sections 1 and 2 of Dan Simon, Optimal State Estimation (2006).

VIDEO: Wikipedia: Linear Recursive Estimator using Recursive Least Squares of running measurement of the previous time step.

Least Squares and Maximum Likelihood: interactive explanation of the Central Limit Theorem by Michael Freeman. STAT 414/415 website from Penn State. History of the method of maximum likelihood and its relationship to least squares.

Iterative Closest Point (ICP) algorithm

Perception

Ego localization: Position, Velocity, acceleration, Orientation, angular motion.

High defition (HD) maps use computer vision to recognize objects within images captured:

- Road and lane markings on-road

- Potholes on-road

- Construction signs, obstructions on-road

- Curbs off-road

- Traffic lights off-road

- Road signs off-road

Sensor uncertainty.

Occlusion, reflection, illumation, lens flare. So redundant sensors are useful.

Classification, detection, segmentation.

Perception using CNN (Convolutional Neural Networks) cameras, radar, LiDAR (Light Detection and Ranging System).

Deep (learning) Neural Networks are used to draw bounding boxes to identify which lane the car is using.

The KITTI Vision Benchmark Suite

[Forsyth] – Forsyth, David A., and Jean Ponce. “A modern approach.” Computer vision: a modern approach (2003): 88-101.

[Goodfellow] – Goodfellow, I., Bengio, Y., Courville, A., & Bengio, Y. (2016). Deep learning (Vol. 1). Cambridge: MIT press. PDF available online: https://www.deeplearningbook.org/

[Szeliski] – Szeliski, R. (2010). Computer vision: algorithms and applications. Springer Science & Business Media. PDF available online: http://szeliski.org/Book/drafts/SzeliskiBook_20100903_draft.pdf

[Hartley] – Hartley, R., & Zisserman, A. (2003). Multiple view geometry in computer vision. Cambridge university press.

Prediction

A RNN (Recurrent Neural Network) is used to project trajectories, Frenet coordinates on short and long time horizons.

Software creates waypoints that plot the plan.

Planning

Predictive planning - Planning the expected route…

Reactive planning

Analyzing the actual route traveled.

Frazzioli’s Survey for Autonomous Planning [for purchase] for motion planning and other high-level behaviour

Autonomous driving in urban environments: “Boss” and the Urban Challenge discusses one of the very early mixed planning systems from the winner of the DARPA Urban Challenge.

Here are some rules for driving at a stop sign. Which of the following is an appropriate priority ranking?

1) For non all-way stop signs, stop at a point where you can see oncoming traffic without blocking the intersection

2) If there are pedestrians crossing, stop until they have crossed

3) If you reach a stop sign before another vehicle, you should move first if safe

Trainings

VIDEO: MIT 6.S094: Introduction to Deep Learning and Self-Driving Cars

BTW David Silver worked at Ford’s self-driving car program and is now teaching online Udacity’s hands-on Nanodegree programs on self-driving cars at the 4-month Intro and advanced Engineer (2 three-month terms). Students work on Udacity’s car named Nanna.

- https://discussions.udacity.com/

- Slack for students

Udacity is founded by Sabastian Thrun (from Sweden), the “father” of self-driving car. When he was a professor at Stanford, his team won the first DARPA Grand Challenge car race. He then joined Google.

Coursera

Coursera.com offers a 100% on-line “Self-Driving Cars” specialization from the University of Toronto, Canada professors of Applied Engineering (Aerospace Studies) Steven Waslander and Jonathan Kelly at the Waterloo Autonomous Vehicles LaboratoryWaterloo Autonomous Vehicles Laboratory (wavelab.uwaterloo.ca). Giving talks are experts from Oxbotica and Zoox.

Build your own self-driving software stack and get ready to apply for jobs in the autonomous vehicle industry.

Your own final project is to implement a Python controller and create a trajectory.txt file containing waypoints to navigate a provided track using Throttle, Steering, and Brake commands.

Install a customized version of the CARLA simulator created using Unreal Engine: macOS is not natively supported by CARLA and therefore the CARLA binaries that we provide also do not support macOS. It is recommended to create a dual-boot to either Linux or Windows in order to setup CARLA for the course.

- Dual-Boot between Ubuntu and MacOS - https://help.ubuntu.com/community/MactelSupportTeam/AppleIntelInstallation

- Install Windows on your Mac with Boot Camp - https://support.apple.com/en-ca/HT201468

- Windows 7 64-bit (or later) or Ubuntu 16.04 (or later), Quad-core Intel or AMD processor (2.5 GHz or faster), NVIDIA GeForce 470 GTX or AMD Radeon 6870 HD series card or higher, 8 GB RAM, and OpenGL 3 or greater (for Linux computers).

https://github.com/carla-simulator/carla/ Use the shell script to start CARLA.

https://www.coursera.support/s/article/360044758731-Solving-common-issues-with-Coursera-Labs?

Server mode

Background is preferred in

- linear algebra, probability, statistics, calculus, physics, control theory, and Python programming.

- intermediate programming experience in Python 3

- familiarity with linear algebra (matrices, vectors, matrix multiplication, rank, eigenvalues and vectors, and inverses)

- statistics (Gaussian probability distributions)

- multivariate calculus (derivatives, partial derivatives)

- physics (forces, moments, inertia, Newton’s laws)

4-courses:

-

Introduction to Self-Driving Cars

-

State Estimation and Localization for Self-Driving Cars (Kalman filters)

-

Motion Planning for Self-Driving Cars - mission planning, behavior planning, and local planning. Find the shortest path over a graph or road network using Dijkstra’s and the A* algorithm, use finite state machines to select safe behaviors to execute, and design optimal, smooth paths and velocity profiles to navigate safely around obstacles while obeying traffic laws. Build occupancy grid maps of static elements in the environment and use them for efficient collision checking.

1,840 views of the specialization congratulations video.

References

https://interestingengineering.com/transportation/autonomous-self-driving-cars-tesla

https://www.mckinsey.com/features/mckinsey-center-for-future-mobility/our-insights/whats-next-for-autonomous-vehicles