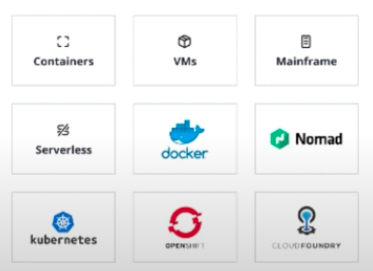

Enterprise-grade secure Zero-Trust routing to replace East-West load-balancing using service names rather than static IP addresses. Enhance Service Mesh with mTLS and health-based APIs in AWS, Azure, GCP, and other clouds running Kubernetes as well as ECS, EKS, VMs, databases, even mainframes outside Kubernetes

Overview

- Most Popular Websites about Consul

- Why Consul?

- Due to Microservices

- 4 pillars and 6 principles of modern app design

- Legacy networking infrastructure mismatches

- Legacy mismatches solved by Consul Editions

- Security Frameworks

- Mitigation Actions

- BOOK: Consul: Up and Running

- Ways to setup Consul with demo infra

- Demo apps

- Certification exam

- B. On HashiCorp’s Consul Cloud SaaS HCP (HashiCorp Cloud Platform)

- The Automated Way

- Create resources within AWS

- CTS for NIA

- Hashicorp Cloud Account

- Store secrets

- (optional) Configure kubectl

- Create a HashiCorp Virtual Network (HVN)

- Peer HVN to a AWS VPC

- Create a HCP Consul cluster

- Enable a public or private IP

- Configure L3 routing and security ???

- Configure Consul ACL Controller

- Run Consul clients within the provisioned AWS VPC

- Run a demo application on the chosen AWS runtime

- Destroy Consul

- Service Discovery Workflow

- C. On a macOS laptop using Docker

- Consul CLI commands

- Consul Keyboard shortcuts

- Raft configuration

- serf_lan and serf_wan

- Chaos Engineering

- Service Graph Intentions

- Services

- (Consul) Nodes (Health Checks)

- ACL (Access Control List) Operations

- D. In a single datacenter (with Kubernetes)

- Kubernetes with Consul

- Sidecar proxy injection

- Service Discovery Registry DNS Queries

- Assist or Replaces Kubernetes

- D. In a single datacenter using Kubernetes

- E. In a single 6-node datacenter (survive loss of an Availability Zone)

- F. For HA on multiple datacenters federated over WAN

- Enterprise configuration

- Manage from another Terminal

- Consul Tutorials from HashiCorp

- G. Integrations to legacy VMs, mainframes, etc.

- Customize HTTP Response Headers

- Collaborations

- Competitors

- References

- References

- HashiCorp Corporate Social

Consul is “a multi-cloud service networking platform to connect and secure any service across any runtime platform and public or private cloud”.**

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

This is not a replacement for you going through professionally developed trainings.

This takes a deep dive bottom-up hands-on appraoch to usage in production. So automation (shell scripts) are used to minimize you typing and clicking (which is not repeatable and error-prone). But rather than throwing a bunch of buzzwords for you to memorize, commentary logically sequenced to introduce concepts in the context of what you just typed. All without sales generalizations. All in this one single big page for easy search.

Most Popular Websites about Consul

The most popular websites about Consul:

-

The marketing home page for HashiCorp’s Consul:

https://www.consul.io/ -

Wikipedia entry:

https://www.wikiwand.com/en/Consul_(software)“Consul was initially released in 2014 as a service discovery platform. In addition to service discovery, it now provides a full-featured service mesh for secure service segmentation across any cloud or runtime environment, and distributed key-value storage for application configuration. Registered services and nodes can be queried using a DNS interface or an HTTP interface.[1] Envoy proxy provides security, observability, and resilience for all application traffic.”

-

Live classes on HashiCorp’s Enterprise Academy:

https://events.hashicorp.com/hashicorp-enterprise-academy -

Source code:

https://github.com/hashicorp/consulInitiated in 2014, this repo has garnered nearly 25,000 stars, with over a million downloads monthly. It’s 1.5K bytes after git clone … -depth 1 (just the latest main branch contents)

-

Detailed technical documentation:

https://www.consul.io/docs -

Tutorials from HashiCorp:

https://learn.hashicorp.com/consulhttps://learn.hashicorp.com/tutorials/consul/service-mesh

-

Hands-on tutorials:

https://play.instruqt.com/hashicorp?query=Consul -

Specifications:

https://cloud.hashicorp.com/docs/consul/specifications -

Technical Discussions:

https://discuss.hashicorp.com/c/consul/29 -

Stackoverflow has highly technical questions & answers:

https://stackoverflow.com/search?q=%23hashicorp-consul -

Reddit:

https://www.reddit.com/search/?q=hashicorp%20consul -

Licensed Support from HashiCorp is conducted using those authorized to access HashiCorp’s ZenDesk system:

https://hashicorp.zendesk.com/agent/dashboard

Why Consul?

There are several compelling uses for HashiCorp Consul.

Fundamentally, Consul secures networking between microservices based on “Zero Trust” principles.

Consul overcomes several deficiencies in Kubernetes.

The most compelling use of Consul is for those who have installed a Service Mesh. Adding Consul as an additional sidecar to Envoy provides the enterprise a multi-DC, hybrid-cloud, multi-cloud global mesh.

Consul’;’s “Secure Service Networking” provides Enterprise-scale features and support needed by complex Global 2000 - multi-cloud, hybrid-cloud, multi-platform global mesh

Generic benefits from adoption of Consul and just about anything else:

- Faster Time to Market and velocity of getting things done from less manual mistakes

- Reduce cost via tools (operational efficiency through more visibility and automation)

- Reduce cost via people from improved availability (uptime)

- Reduce risk of downtime from better reliability

- Reduce risk of breach from better guardrails (using Sentinel & OPA)

- Compliance with regulatory demands (central source of truth, immutable, automated processes)

Technical words that describe what Consul can do (listed at https://www.consul.io/):

- Consul on Kubernetes

- Control access with Consul API Gateway

- Discover Services with Consul

- Enforce Zero Trust Networking with Consul

- Load Balancing with Consul

- Manage Traffic with Consul

- Multi-Platform Service Mesh with Consul

- Network Infrastructure Automation with Consul

- Observability with Consul

Due to Microservices

Consul provides a “backbone” for a microservice architecture.

In hopes of building more reliable systems in the cloud faster and cheaper, enterprises create distributed microservices instead of monolithic architectures (which are more difficult to evolve).

“Microservices is the most popular architectural approach today. It’s extremely effective. It’s the approach used by many of the most successful companies in the world, particularly the big web companies.” –Dave Farley

Microservices is based on development of separate programs providing services. Each program can move and scale independently of other programs, existing in an ephemeral (temporary) way. Because dev teams won’t have the same “hard-coded” dependencies in monolithic approaches, there is less need to wait for teams to finish work at the same time. This increases agility and greater operational efficiency.

4 pillars and 6 principles of modern app design

from Libby Meren, Senior Manager for OSS Evangelism, NGINX at F5.

Here are nuances of attributes of ‘modern apps’ crucial to respond to the intense pressure of spinning up additional compute infrastructure quickly to meet unexpected demand: four pillars: scalability, portability, resiliency, and agility.

-

Pillar 1: Scalability

Fast scaling - increase an application’s capacity by 100% within five minutes. Can the application expand quickly capacity to meet unforeseen increases in demand?

Long scaling - increase an application’s capacity 10x over a year or more, without requiring a major refactoring of code or large shifts in infrastructure requirements. Does the app have a clean design with loose dependencies and loose couplings to infrastructure components.

-

Pillar 2: Portability

Functional portability - app code runs inside a container without external dependencies tightly coupled to a single environment. Can core functional elements, code, and logic of an application remain the same regardless of the environment in which it is running?

Management portability - Can the app be monitored, secured, and observed in the same way, with the same tooling and same sets of reporting capabilities, regardless of the environment?

-

Pillar 3: Resiliency

User‑facing resiliency - Do application users, either machine or human, notice a performance issue or functionality problem caused by a fault or failure of either a modern app itself or any service or infrastructure it depends on? Or do failures cascade, impacting even automated services and highly dependent microservices?

Failover resiliency - A modern app is able to restore within five minutes any critical service to 100 percent of what is necessary to handle average workloads. Designers should think about failing over to unaffected compute resources as a key part of application design and one that is implicit in self‑healing, and environmentally aware.

-

Pillar 4: Agility

Code agility - Is the app’s code designed to constantly absorb new code? To enforce loose coupling and reduce intra‑application code dependencies and rigidity, applications are composed of microservices and linked via APIs.

Infrastructure agility - Can the app’s infrastructure be spun up or down to satisfy the needs of all customers including application development teams, security teams, and DevOps teams?

The six principles of modern apps most modern apps employ architectures following these six principles:

-

Be platform agnostic - Build applications to run without any consideration of the platforms or environments where it is likely to run containers have become the de-facto standard for platform‑agnostic runtimes.

-

Prioritize open source software - So that developers can “look under the hood of the code” in order to design for portability and scalability.

-

Define everything (possible) by code - To move at faster-than-human speed to make changes and approvals.

-

Design with automated CI/CD as a native/default state - for complete and quick code pushes, infrastructure deployment, and security requirements.

-

Practice secure development - “shift left” to test all code as early as possible in the development process using software composition analysis (SCA), static application security testing (SAST), dynamic code analysis (DCA), and formatting checkers.

-

Widely distribute storage and infrastructure. Replicating storage and compute across multiple zones or clouds or hybrid deployments can ensure greater resiliency and scalability.

Legacy networking infrastructure mismatches

However, each new paradigm comes with new problems.

Implementation of microservices within legacy infrastructure and “fortress with a moat” mindset (rather than “Zero Trust” and other security principles) creates these concerns:

A. When traffic is routed based on static IP addresses, traffic is sent blindly without identity authentication (a violation of “Zero Trust” mandates).

B. Traffic routing mechanisms (such as IPTables) were designed to manage external traffic, not traffic internally between services.

C. Mechanisms intended to secure external traffic (such as IPTables) are usually owned and managed for the whole enterprise by the Networking department. So when their mechanism is drafted for use to secure internal traffic, app services developers need to spend time requesting permissions for accessing IP addresses. And Network departments now spend too much time connecting internal static IP addresses for internal communications among services when many don’t consider it part of their job.

D. Due to lack of authentication (using IP Addresses), current routing does not have mechanisms for fine-grained permission policies that limit what operation (such as Read, Write, Update, Delete, etc.) is allowed. That implements “Least Privilege” principles.

E. Also due to lack of authentication, current routing does not have the metadata to segment traffic in order to split a percentage of traffic to different targets for various types of testing.

-

DEFINITION: "Micro segmentation" is the logical division of the internal network into distinct security segments at the service/API level. Its use enables granular access control to, and visiblity of, discrete service interface points. [Reference]

The segmentation that "East-West" (internal) Load Balancers with advanced "ISO Level 7" capability (such as F5) can perform is more limited that what Consul can do with its more granualar metadata about each service.

Not only that, Load Balancers are a single point of failure. So an alternative is needed which has been architected for (lower cost) resilience and high availability to failures in individual nodes, Availability Zones, and whole Regions.

F. To mitigate the network features lacking, many developers now feel they spend too much time coding network-related communication logic into each application program (for retries, tracing, secure TLS, etc.). When different developers use different techniques for that, errors occur which are difficult to track down.

Kubernetes a partial solution

Kubernetes (largely from Google) has been popular as “orchestrator” to replace instances of pods (holding Containers) when any of them go offline.

However, core Kubernetes defaults currently has these deficiencies:

G. Kubernetes does not check if a service is healthy before trying to communicate with it. This leads to the need for coding of applications to perform time-outs, which is a distraction and usually not a skill by most business application coders.

H. Kubernetes does not encrypt communications between services.

I. Kubernetes does not provide a way to communicate with components and cloud services outside Kubernetes such as databases, ECS, other EKS clusters, Serverless, Observability platforms, etc. Thus, Kubernetes by default does not by itself enable deep transaction tracing.

J. Kubernetes is currently not mature when it comes to automatically adding more pods (to scale up) or removing pods (to scale down).

References:

Legacy mismatches solved by Consul Editions

Consul provides a mechanism for connecting dynamic microservices with legacy networking infrastructure.

The list below send you to how each edition of Consul solves the mismatches described above.

- Paid Enterprise for self-installed/managed on-prem or in private clouds

- SaaS in the HCP (HashiCorp Platform) in the HashiCorp-managed cloud

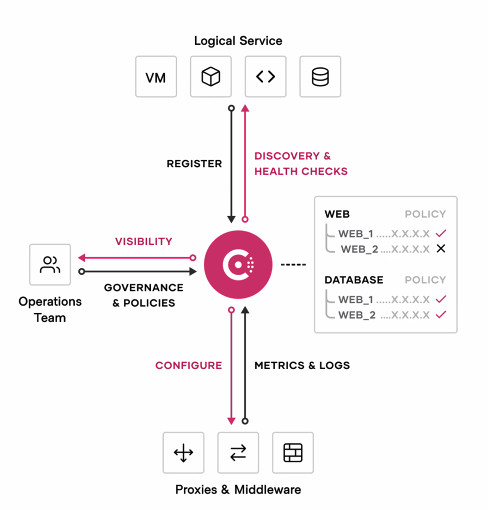

A common explanation of what Consul does references three technical categories:

“Consul is a datacenter runtime that provides 1) service discovery, 2) network segmentation, and 3) orchestration.”

This is explained here.

Consul Concepts in UI Menu

PROTIP: Here are Agile-style stories requesting use of HashiCorp Consul (written by me):

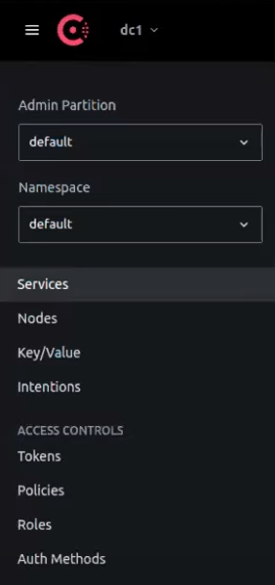

The Consul Enterprise edition menu can serve as a list of concepts about Consul:

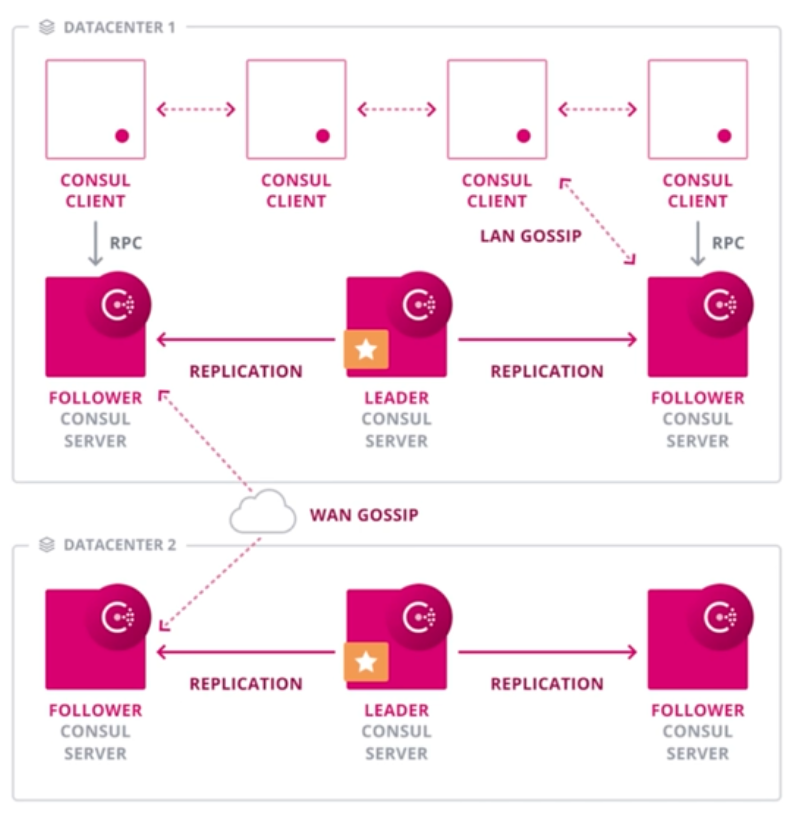

“dc1” is the name of a Consul “datacenter” – a cluster of Consul servers within a single region.

“dc1” is the name of a Consul “datacenter” – a cluster of Consul servers within a single region.

Multiple “Admin Partitions” and “Namespaces” are Consul Enterprise features.

Consul manages applications made available as Services on the network.

Nodes are Consul servers which manage network traffic. They can be installed separately from application infrastructure.

-

Rather than A. blindly routing traffic based on IP addresses, which have no basis for authentication (a violation of “Zero Trust” mandates), Consul routes traffic based on named entities (such as “C can talk to A” or “C cannot talk to A.”).

Consul Enterprise can authenticate using several Authentication Methods

-

Rather than B. routing based on IPTables designed to manage external traffic, Consul routes from its list of “Intentions” which define which other entities each entity (name) can access.

Consul does an authentication hand-shake with each service before sending it data. A rogue service cannot pretend to be another legitimate service unless it holds a legitimate encryption certificate assigned by Consul. And each certificate expires, which Consul works to rotate.

-

Rather than C. manually creating a ticket for manual action by Networking people connecting internal static IP addresses, Consul discovers the network metadata (such as IP addresses) of each application service when it comes online, based on the configuration defined for each service. This also means that Network people would spend less time for internal communications, freeing them up for analysis, debugging, and other tasks.

Roles and Policies

-

Consul’s Key/Value store holds a “service registry” containing ACL (Access Control List) policy entries which define what operations (such as Read, Write, Update, Delete, etc.) is allowed or denied for each role assigned to each named entity. This adds fine-grained security functionality needed for “Zero Trust”.

As Consul redirects traffic, it secures the traffic by generating certificates used to encrypt traffic on both ends of communication, taking care of automatic key rotation hassles, too. BTW This mechanism is called “mTLS” (mutual Transport Layer Security).

-

Instead of E. requiring a Load Balancer or application coding to split a percentage of traffic to different targets for various types of testing, Consul can segment traffic based on attributes associated with each entity. This enables more sophisticated logic than what traditional Load Balancer offer.

Consul can route based on various algorithms (like F5) “Round-robin”, “Least-connections”, etc.

That means Consul can, in many cases, replace “East-West” load balancers, to remove load balancers (in front of each type of service) as a single-point-of-failure risk.

-

With Consul, instead of F. Developers spending too much time coding network communication logic in each program (for retries, tracing, secure TLS, etc.), networking can be managed by Consul and made visible in the Consul GUI.

Consul is “platform agnostic” because Consul is added as additional servers in parallel in the same infrastructure, changes usually involve configuration rather than app code changes. Thus, Consul can connect/integrate services running both on-prem servers and in clouds, inside and outside Kubernetes.

-

Within the system, obtain the health status of each app server so that traffic is routed only to healthy app services, so provide a more aware approach than load balancers blindly routing (by Round-Robin, etc.).

Partial Kubernetes Remediation using Service Mesh

References:

To overcome G. Kubernetes not checking if a service is healthy before trying to communicate, many are adding a “Service Mesh” to Kubernetes. Although several vendors offer the addition, “Service Mesh” generally means the installation of a network proxy agent (a “sidecar”) installed within each pod alongside app containers.

“Envoy” is currently the most popular Sidecar proxy. There are alternatives.

When app developers allow all communication in and out of their app through a Sidecar proxy, they can focus more on business logic rather than the intricacies of retries after network failure, traffic encryption, transaction tracing, etc.

Due to G. Kubernetes and Sidecars not encrypting communications between services, Consul is becoming a popular add-on to Kubernetes Service Mesh because it can add mTLS (use of mutual TLS certificates used to encrypt transmissions on both server and clients) without coding in application code.

Although H. Kubernetes does not check if a service is healthy before trying to communicate, Consul performs health checks and maintains the status of each service. Thus, Consul never routes traffic to known unhealthy pods. And so apps don’t need to be coded with complex timeouts and retry logic.

Although I. Kubernetes does not provide a way to communicate with components and cloud services outside Kubernetes, Consul can dynamically configure sidecars such as Envoy to dynamically route or duplicate traffic to “Observability” platforms such as Datadog, Prometheus, Splunk, New Relic, etc. who performs analytics they display on dashboards created using Grafana and other tools.

Paid Enterprise (Production) Features

Larger enterprises running in production need features for higher security, greater teamwork, and larger scalability that open-source can provide. Those additional features are provided by the Enterprise edition of Consul which is self-installed and managed by customers.

- Namespaces

- Enhanced read scalability

- Network segments

- Redundancy zone

- Advanced federation for complex network topologies

- Automated backups

- Automated upgrades

- Audit Logging

- SSO Support

- Multi-Tenancy with Admin Partitions

Below are more details.

A. Authenticate using a variety of methods. In addition to ACL Tokens, use enteprise-level identity providers (such as Okta and GitHub, Kuberos with Windows, etc.) for SSO (Single Sign On) based on indentity information maintained in email and other systems, so that additions, modifications, and deletions of emails get quickly reflected in Consul. Such immediacy is important to minimize the time when credentials are stale and thus available for compromise.

B. Automatic Upgrades (“Autopilot” feature) of a whole set of nodes at once – this avoids the need for manual effort and elimination of times when different versions exist at the same time.

C. Enhanced Audit logging – to better understand access and API usage patterns. A full set of audit logs makes Consul a fully enterprise-worthy utility.

D. Policy enforcement using Sentinel extend the ACL system in Consul beyond the static “read”, “write”, and “deny” policies to support full conditional logic during writes to the KV store. Also integrates with external systems. For example:

- when ACL processing is disabled, the SOC team is alerted.

- When the consul agent is set to bind with all IP addresses on the open internet, a warning is issued.

E. Enable Multi-Tenancy of tenants enabled using “Admin Partitions” as “Namespaces” to segment data into separate different teams within a single Consul datacenter, a key “Zero Trust” principal to diminish the “blast radius” from potential compromise of credentials to a specific partition.

- https://learn.hashicorp.com/tutorials/consul/amazon-ecs-admin-partitions

- Consul on ECS & Admin Partitions Learn Guide

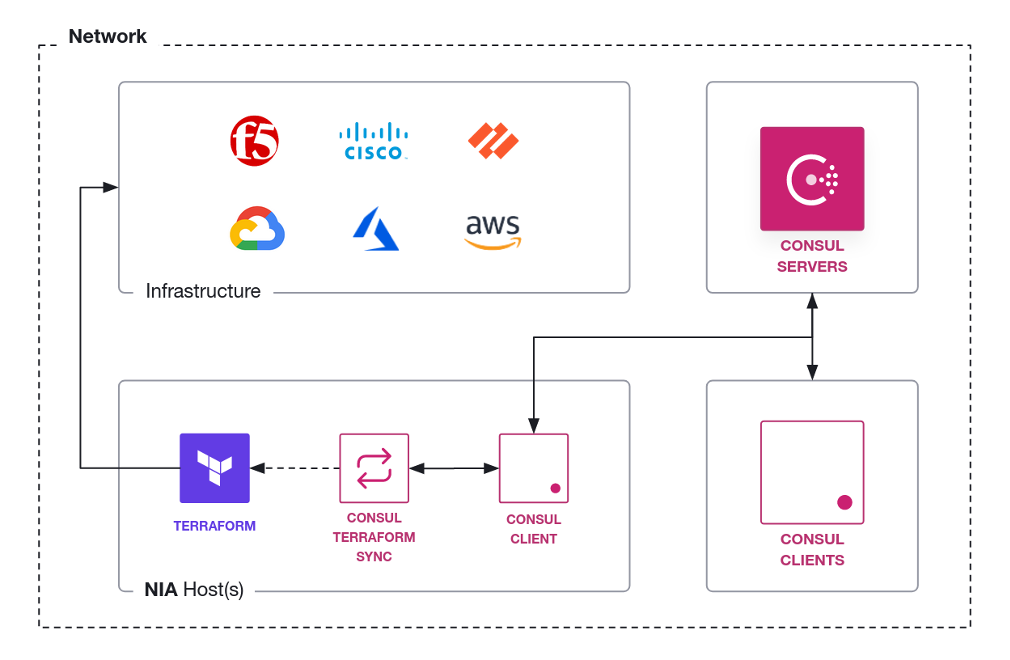

F. Consul can take automatic action when its metadata changes, such as notifying apps and firewalls, to keep security rules current (using NIA CTS).

-

The "consul-terraform-sync" (CTS) module broadcast changes recognized which can be used to update Terraform code dynamically for automatic resources reconfiguration -- This decreases the possibility of human error in manually editing configuration files and decreases time to propagate configuration changes to networks.

G. Better Resilency from scheduled Backups of Consul state to snapshot files – this makes backups happen without needing to remember to take manual effort.

H. Consul is designed for additional Consul servers to be added to a Consul Cluster to achieve enterprise-scale scalability. The performance scaling mechanism involves adding “Redundancy Zones” which only read metadata (as “non-voting” nodes).

- Large enterprises have up to 4,000 microservices running at the same time.

- “Performance begins to degrade after 7 voting nodes due to server-to-server Raft protocol traffic, which is expensive on the network.”

- Global Consul Scale Benchmark tests (below) proved Consul’s enterprise scalability.

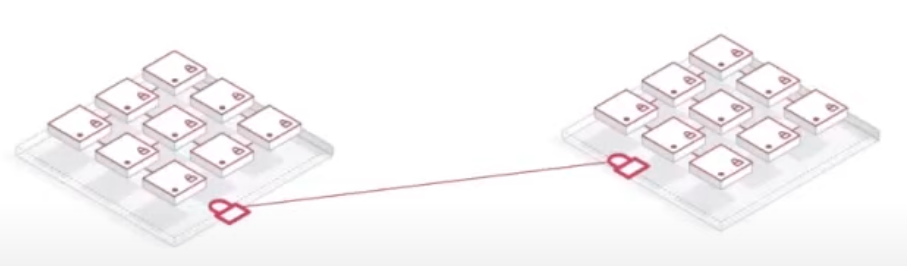

I. Consul Service Mesh (also called Enterprise “Consul Connect”) enables a Kubernetes cluster to securely communicate with services outside itself. Connect enables communication between a Sidecar proxy in Kubernetes to reach an API Gateway (which acts like a K8s Sidecar proxy) surrounding stand-alone databases, ECS, VMs, Severless, even across different clouds.

-

As with HashiCorp's Terraform, because the format of infrastructure configuration across multiple clouds (AWS, Azure, GCP, etc.) are similar in Consul, the learning necessary for people to work on different clouds is reduced, which yields faster implementations in case of mergers and acquisitions which require multiple cloud platforms to be integrated quickly. VIDEO

J. Consul can be setup for Disaster Recovery (DR) from failure to an entire cloud Region. Consul has a mechanism called “WAN Federation” which distributes service metadata across regions to enable multi-region capability. Alternately, use

-

Fail-over to a whole Region is typically setup to involve manual intervention by the SOC (Security Operations Center).

Use of Consul Service Mesh with Health Checks enables automated failover.

Multi-region redundancy using complex Network Topologies between Consul datacenters (with "pairwise federation") -- this provides the basis for disaster recovery in case an entire region disappears.

References:

- VIDEO: “Consul and Complex Networks”

- https://hashicorp-services.github.io/enablement-consul-slides/markdown/architecture/#1

- Consul’s network coordinate subsystem

Security Frameworks

This section provides more context and detail about security features of Consul.

There are several frameworks which security professionals use to organize controls they install to prevent ransomware, data leaks, and other potential security catatrophes. Here are the most well-known:

- Well-Architected Framework

- “Zero Trust” in CIA

- “Kill Chain”

- ATT&CK Enterprise Framework

- SOC2/ISO 27000 attestations

PROTIP: Within the description of each framework, links are provided here to specific features which Consul provides (as Security Mitigations).

Well-Architected Framework (WAF)

A “Well-Architected Framework” is referenced by all major cloud providers.

- https://wa.aws.amazon.com/wat.pillar.security.en.html

Security professionals refer to the “CIA Triad” for security:

- Confidentiality by limiting access

- Integrity of data that is trustworthy

- Availability for reliable access

Zero-trust applies to those three:

-

Identity-driven authentication (by requester name instead of by IP address)

-

Mutually authenticated – both server and client use a cryptographic certificate to

-

Encrypt for transit and at rest (baked into app lifecycle via CI/CD automation)

-

Each request is time-bounded (instead of long-lived static secrets to be hacked)

-

Audited & Logged (for SOC to do forensics)

References:

- VIDEO: “The six pillars of Zero Trust”

- US NIST SP 800-207 defines “Zero Trust Architecture” (ZTA) at PDF: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-207.pdf (50 pages)

- INSTRUQT Consul: Zero Trust Networking with Service Mesh”

The “Kill Chain” (created by Lockheed-Martin) organizes security work into the 9 stages how malicious actors work.

Specific tools and techniques that adversaries use (on specific platforms) are organized within PDF: 14 goals in the “ATT&CK” Enterprise Matrix lifecycle from Mitre Corporation (a US defense think-tank) in 2013.

A comparison between the above:

| Kill Chain | Mitre ATT&CK | Mitigations |

|---|---|---|

| 1. Reconnaissance (harvesting) |

Reconnaissance, Resource Development | Authentication |

| 2. Weponization (exploit of backdoor into a deliverable payload) |

Initial Access, Execution | mTLS |

| 3. Delivery (into victim) |

Persistence, Privilege Escalation | Audit logs & Alerts |

| 4. Exploitation (of vulnerability) | Defense Evasion (Access Token Manipulation) | ACL |

| 5. Installation (of malware) |

Credential Access, Discovery (System Network Connections Discovery), Lateral Movement (Exploitation of Remote Services, Remote Service Session Hijacking ), Collection (Man-in-the-Middle) | Authorization |

| 6. Command and Control (remote manipulation) | Command and Control (Application Layer Protocol, Web Service, Dynamic Resolution) | Segmentation |

| 7. Actions on Objectives |

Exfiltration, Impact | DLP (Data Loss Prevention) |

Mitigation Actions

Part of a Cloud Operating Model suite

Consul is part of the HashiCorp “Cloud Operating Model” product line which provides modern mechanisms for better security and efficiency in access and communication processes:

These products are collectively referred to as “HashiStack”.

Consul, Vault, and Boundary together provides the technologies and workflows to achieve SOC2/ISO27000 and “Zero Trust” mandates in commercial enterprises and within the U.S. federal government and its suppliers.

References:

- VIDEO Microservices with Terraform, Consul, and Vault

Zero Trust Maturity Model

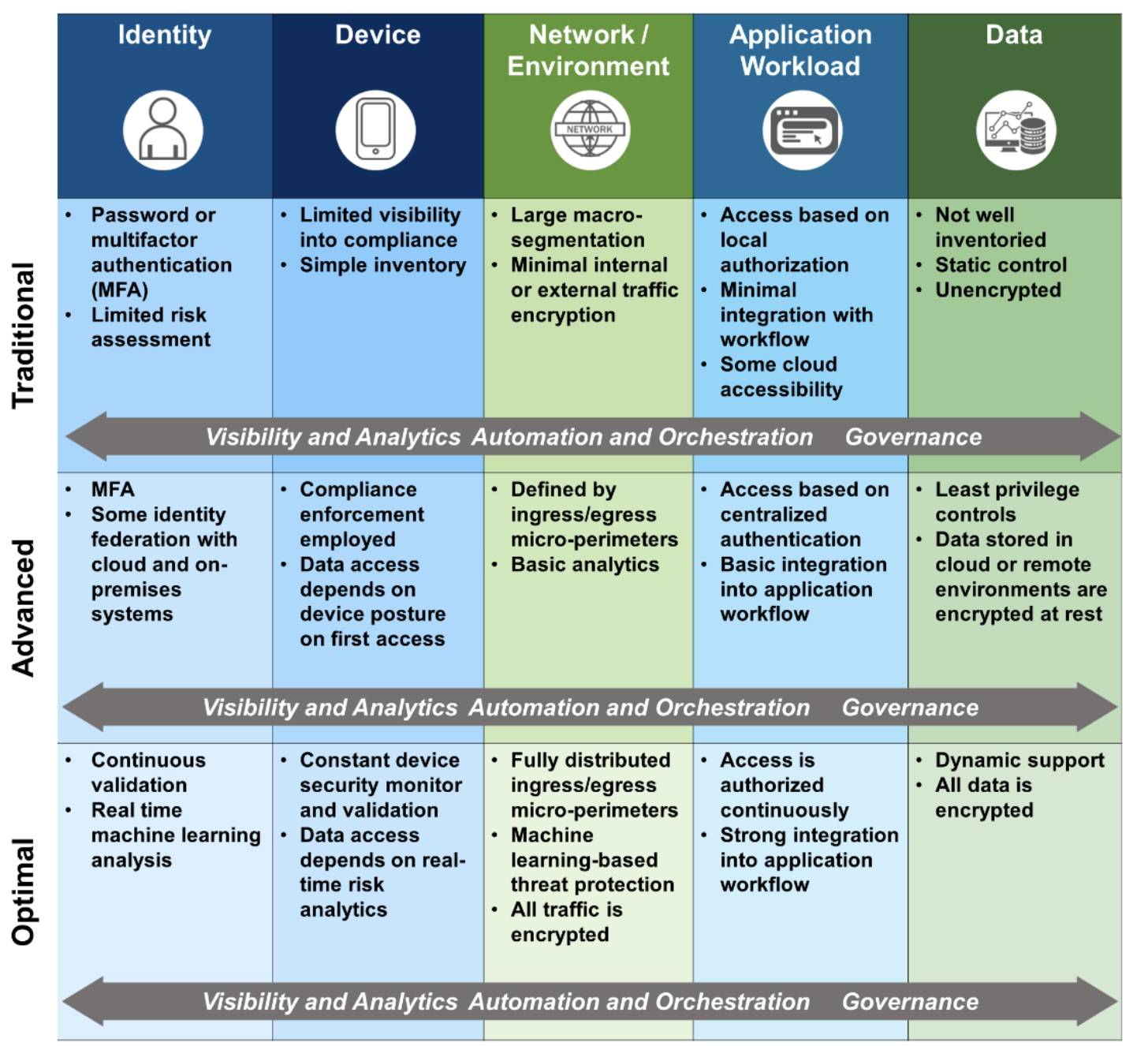

HashiCorp’s HashiStack is used by many enterprises to transition from “Traditional” to “Optimal”, as detailed by the US CISA “Zero Trust Maturity Model”:

Categories of “Defense in Depth” techniques listed in PDF: Mitre’s map of defense to data sources:

- Password Policies

- Active Directory Configuration

- User Account Control

- Update Software

- Limit Access to Resources Over Network

- Audit (Logging)

- Operating System Configuration

- User Account Management

- Execution Prevention

- Privileged Account Management

- Disable or Remove Feature or Program

- Code Signing

- Exploit Protection

- Application Isolation and Sandboxing

- Antivirus/Antimalware

- Filter Network Traffic

- Network Segmentation

- User Training

- SSL/TLS Inspection

- Restrict Web-based Content

Additionally:

-

To prevent Lateral Movement (Taint Shared Content): Immutable deployments (no live patching to “cattle”)

-

IaC CI/CD Automation (processes have Security and Repeatability baked-in, less toil)

-

Change Management using source version control systems such as Git clients interacting with the GitHub cloud

BOOK: Consul: Up and Running

Canadian Luke Kysow, Principal Engineer on Consul at HashiCorp, top contributor to hashicorp/consul-k8s, wrote in his BOOK: “Consul: Up and Running”:

“A small operations team can leverage Consul to impact security, reliability, observability, and application delivery across their entire stack —- all without requiring developers to modify their underlying microservices.”

Code for the book (which you need to copy and paste into your own GitHub repo) is organized according to the book’s chapters:

- Service Mesh 101

- Introduction to Consul

- Deploying Consul within K8s (in cloud or minikube for automatic port-forwarding) and on VMs

- Adding Services to the Mesh

- Ingress Gateways

- Security

- Observability

- Reliability

- Traffic Control

- Advanced Use Cases

and Discord server for the book)

The above are used for showing Proof of Value (POV) from product/workflow adoption.

- https://www.consul.io/docs/intro

- https://learn.hashicorp.com/well-architected-framework

YouTube: “Getting into HashiCorp Consul”

VIDEO: Consul Roadmap – HashiConf Global 2021

Ways to setup Consul with demo infra

PROTIP: Become comfortable with the naming conventions used by the architecture, workflows, and automation by building several environments, in order of complexity:

By “use case” (Sales Plays):

A. There is a public demo instance of Consul online at:

https://demo.consul.io/ui/dc1/overview/server-status

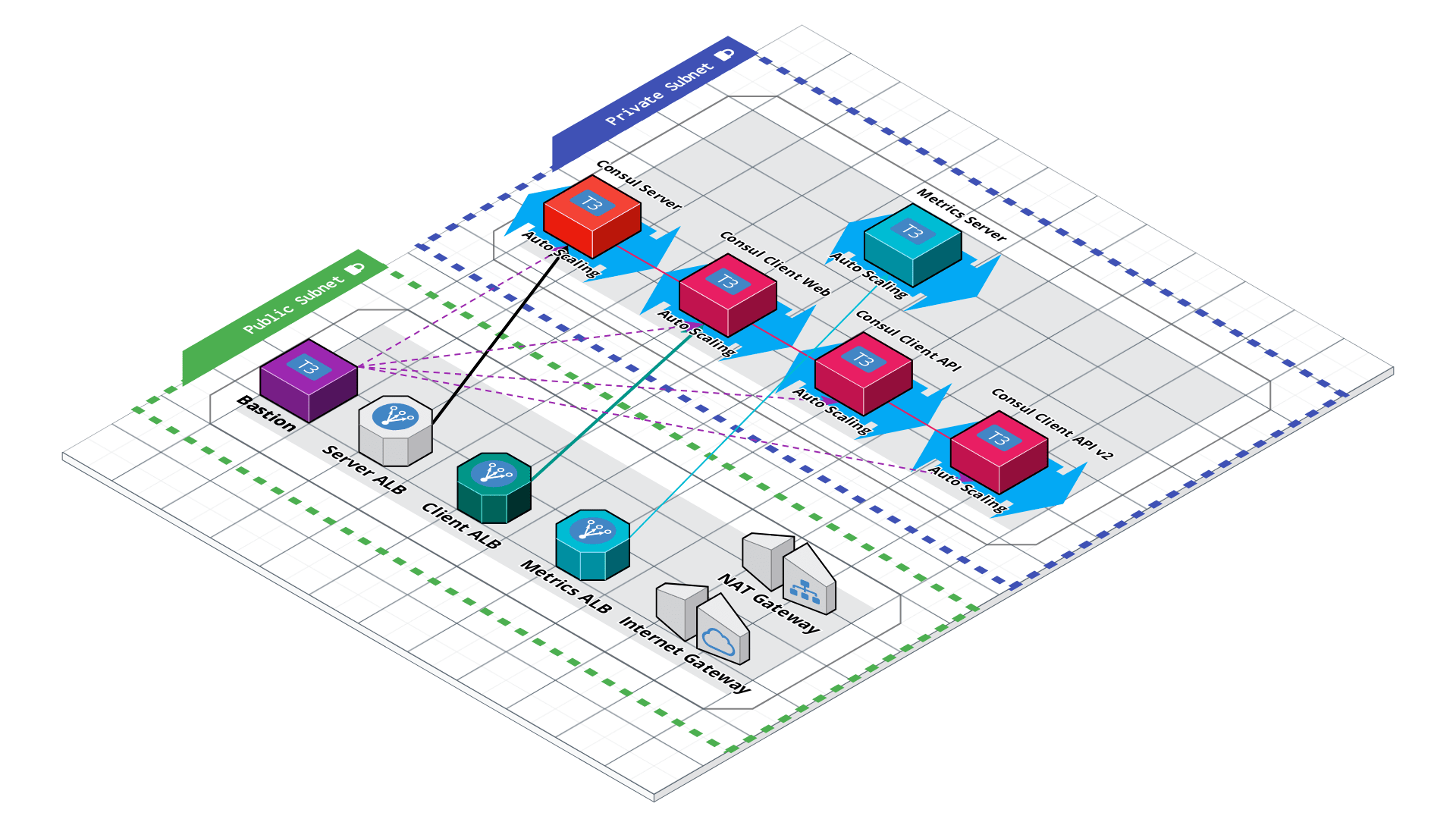

B. On HashiCorp’s Consul SaaS on the HCP (HashiCorp Cloud Platform):

- QUESTION: You can use Consul this way with just a Chromebook laptop???

- Use this to learn about creating sample AWS services in a private VPC using Terraform, createing a HCP account, cloud peering connections across private networks to HVN, day-to-day workflows on https://cloud.hashicorp.com/products/consul

- On AWS or Azure

C. On a macOS laptop install to learn Consul Agent with two nodes (to see recovery of loss from a single node):

- Use automation to install the Consul agent along with other utilities needed

- Use this to learn about basic CLI commands, starting/stopping the Agent, API calls, GUI menus using a single server within a Docker image

- Follow a multi-part video series on YouTube to install and configure 5 Consul nodes in 3 Availability Zones (AZs) within a single region, with app Gateways, Sidecar monitoring

E. In a single 6-node datacenter (with Nomad) to survive loss of an Availability Zone

- Use this to learn about manual backup and recovery using Snapshots and Enterprise Snapshot Agents,

- Conduct Chaos Engineering recovering failure of one Availability Zone

- Telemetry and Capacity proving to identify when to add additional Consul nodes

F. For multiple datacenters federated over WAN

- Use this to learn about configuring the Enterprise Autopilot feature for High Availability across multiple regions (which is a major differentiator of HashiCorp Consul), Chaos Engineering.

G. Integrations between K8s Service Mesh to outside database, ECS, VMs, mainframes, etc.

- Discovery to central service registry across several Kubernetes clusters

- Use this to learn about configuring for integrating HashiCorp Consul to work with a Payment processor, integrate with load balancers that isn’t Consul-aware, and across the entire Enteprise landscape of technologies (another major differentiator of HashiCorp Consul)

Other demos:

- https://www.hashicorp.com/resources/getting-started-with-managed-service-mesh-on-aws First Beta Demo of HCP Consul Service Mesh on AWS.

Demo apps

PROTIP: Adapt the samples and naming conventions here to use your own app after achieving confidence you have the base templates working.

- VIDEO: 12-Factor Apps and the HashiStack by Kelsey Hightower (Google)

https://medium.com/hashicorp-engineering/hashicorp-vault-performance-benchmark-13d0ea7b703f

https://cloud.hashicorp.com/docs/hcp/supported-env/aws

https://github.com/pglass/202205-consul-webinar-demo

-

HashiCorp-provided demo apps included in the practice environments are defined at:

https://github.com/hashicorp-demoapp/

“Hashicups” from https://github.com/hashicorp-demoapp/hashicups-setups comes with a Go library.

-

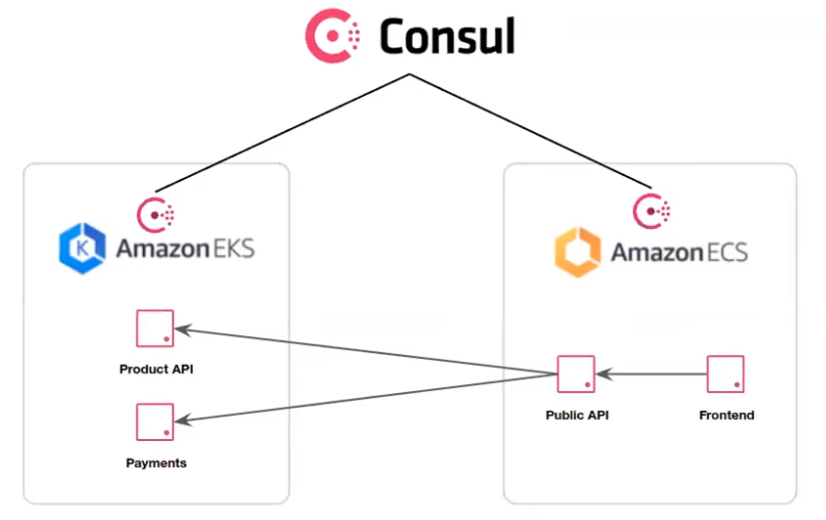

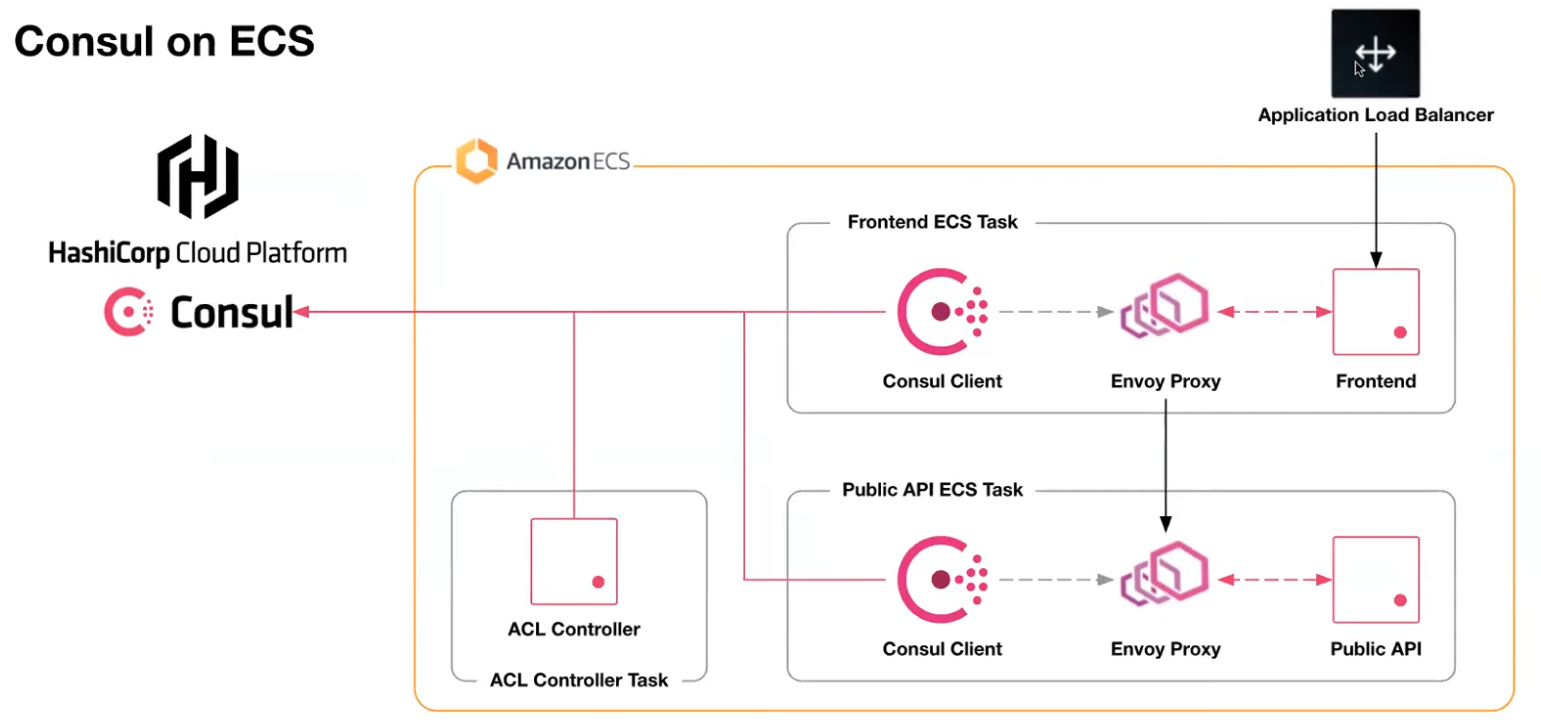

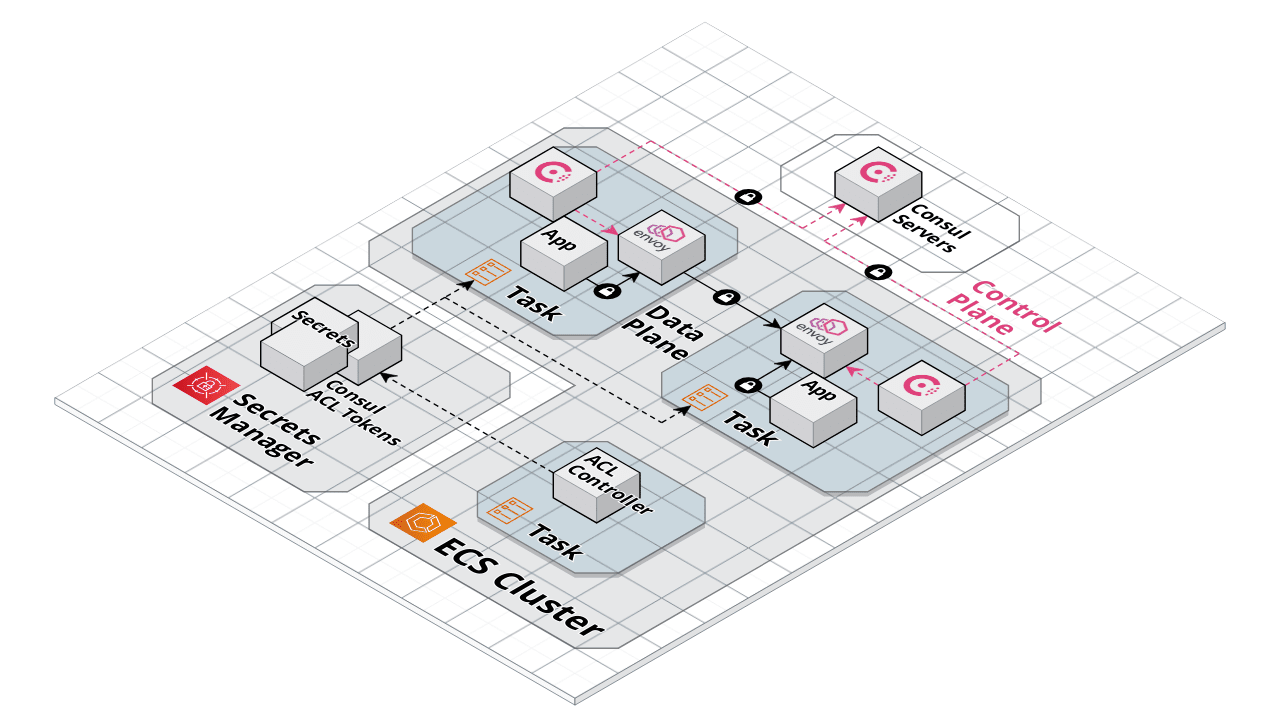

Consider the HashiCups datacenter which uses both ECS and EKS within AWS:

- Run front-end services task within a ECS (Elastic Container Service) cluster

- Run back-end services task within a EKS (Elastic Kubernetes Service) cluster

See VIDEO “Securely Modernize Application Development with Consul on AWS ECS” by Jairo Camacho (Marketing), Chris Thain, Paul Glass (Engineering)

-

Create the above environment by running Terraform ???

https://github.com/pglass/202205-consul-webinar-demo

https://github.com/hashicorp/terraform-aws-consul-ecs

-

Use HCP Consul for Service Mesh (without Kubernetes)

The Envoy proxy in Data Plane ???

Control Plane to Consul servers within HCP ???

Consul’s Layer 7 traffic management capabilities. ???

ACL Controller

The ACL (Access Control List) Controller is provided by HashiCorp for install within AWS.

To provide least-privilege access to Consul using Terraform and Vault: https://www.hashicorp.com/blog/managing-hashicorp-consul-access-control-lists-with-terraform-and-vault

Observability

REMEMBER: Enterprise editions of Consul is a different binary than OSS edition.

Terraform adds Datadog for Observability.

https://www.pagerduty.com/docs/guides/consul-integration-guide/ shows how to configure Consul-Alerts to trigger and resolve incidents in a PageDuty service. PagerDuty is an alarm aggregation and dispatching service for system administrators and support teams. It collects alerts from monitoring tools, gives an overall view of all of monitoring alarms, and alerts an on-duty engineer if there’s a problem. The Terraform Pagerduty provider is a plugin for Terraform that allows for the management of PagerDuty resources using HCL (HashiCorp Configuration Language).

Certification exam

Because this document aims to present concepts in a logic flow for learning, it has a different order than topics for the Consul Associate one-hour proctored on-line $70 exam at: https://www.hashicorp.com/certification/consul-associate

-

Explain Consul architecture

1a. Identify the components of Consul datacenter, including agents and communication protocols

1b. Prepare Consul for high availability and performance

1c. Identify Consul’s core functionality

1d. Differentiate agent roles -

Deploy a single datacenter

2a. Start and manage the Consul process

2b. Interpret a Consul agent configuration

2c. Configure Consul network addresses and ports

2d. Describe and configure agent join and leave behaviors -

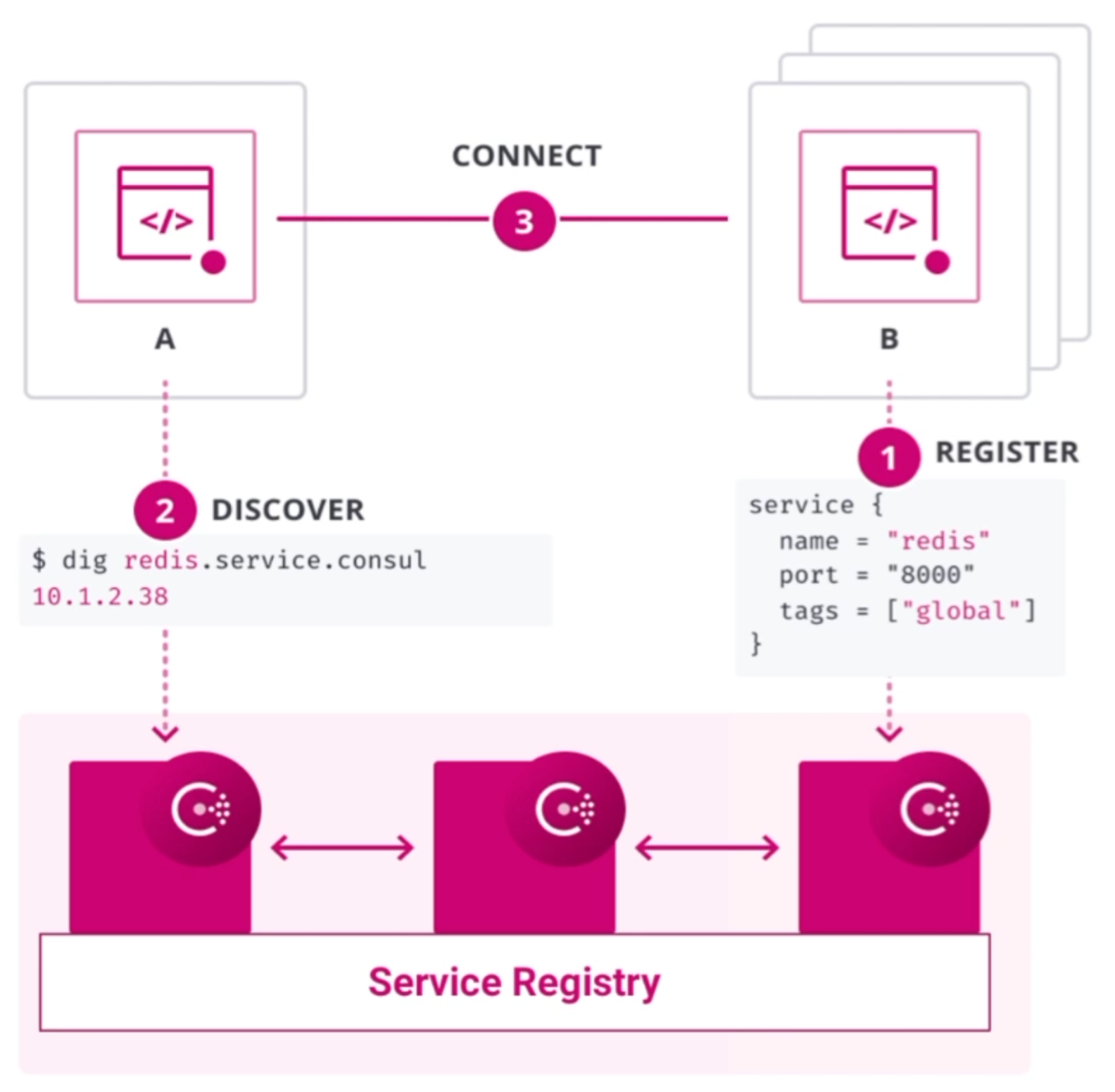

Register services and use Service Discovery [BK]

3a. Interpret a service registration

3b. Differentiate ways to register a single service

3c. Interpret a ServiceConfiguration”>service configuration with health check

3d. Check the service catalog status from the output of the DNS/API interface or via the Consul UI

3e. Interpret a prepared query

3f. Use a prepared query

-

Access the Consul key/value (KV)

4a. Understand the capabilities and limitations of the KV store

4b. Interact with the KV store using both the Consul CLI and UI

4c. Monitor KV changes using watch

4d. Monitor KV changes using envconsul and consul-template -

Back up and Restore [BK]

5a. Describe the content of a snapshot 5b. Back up and restore the datacenter

5c. [Enterprise] Describe the benefits of snapshot agent features -

Use Consul Service Mesh

6a. Understand Consul Connect service mesh high-level architecture

6b. Describe configuration for registering a service proxy

6c. Describe intentions for Consul Connect service mesh

6d. Check intentions in both the Consul CLI and UI -

Secure agent communication

7a. Understanding Consul security/threat model

7b. Differentiate certificate types needed for TLS encryption

7c. Understand the different TLS encryption settings for a fully secure datacenter -

Secure services with basic access control lists (ACL)

8a. Set up and configure a basic ACL system

8b. Create policies

8c. Manage token lifecycle: multiple policies, token revoking, ACL roles, service identities

8d. Perform a CLI request using a token

8e. Perform an API request using a token -

Use Gossip encryption

9a. Understanding the Consul security/threat model

9b. Configure gossip encryption for the existing data center

9c. Manage the lifecycle of encryption keys

Bryan Krausen provides links to discount codes to his Udemy, “Getting Started with HashiCorp Consul 2022” has 8.5 hours of video recorded at Consul 1.7. It provides quizzes and a >mind-map of each topic and references https://github.com/btkrausen/hashicorp/tree/master/consul

Also from Bryan is “HashiCorp Certified: Consul Associate Practice Exam” three full exams of 57 questions each.

KodeKloud Q&A for HashiCorp Certification Courses

B. On HashiCorp’s Consul Cloud SaaS HCP (HashiCorp Cloud Platform)

- LEARN: “Create a HCP Consul cluster for an existing EKS run time”

- VIDEO: “Community Office Hours: HCP Consul with Terraform” with Daniele Carcasole (HC Ed.)

Perhaps the fastest and easiest way to begin using Consul is to use the Hashcorp-Managed HashiCorp Cloud Platform (HCP) Consul Cloud. It provides a convenient clickable Web GUI rather than the CLI/API of FOSS (free open-source software).

HCP provides a fully managed “Service Mesh as a Service (SMaaS)” Consul features not provided with the “self-managed” Enterprise edition. That means:

- Monitoring to ensure disk space, CPU, memory, etc. is already staffed

- Capacity testing to ensure configurations are made optimal by specialists

- No risk of security vulnerabilities introduced by inexperienced personnel

- Backups taken care of automatically

-

Restores performed when needed

- Rest from on-going hassles of security patches and version upgrades

- Enable limited in-house IT personnel to focus on business needs.

- Faster time to value and time to market

On the other hand, at of this writing, HCP does not have all the features of Consul Enterprise.

References about HCP Consul:

- https://github.com/hashicorp/learn-hcp-consul

- https://github.com/hashicorp/learn-terraform-multicloud-kubernetes

-

Part 12: HCP Consul [2:18:49] Mar 17, 2022

- HashiCorp’s 7 tutorials on HCP Consul:

- https://www.hashicorp.com/products/consul/service-on-azure

- announced Sep 2020

-

VIDEO: “Introduction to HashiCorp Cloud Platform (HCP): Goals and Components”

- VIDEO: “Service Mesh - Beyond the Hype”

-

hashicorp/consul-snippets Private = Collection of Consul snippets. Configuration bits, scripts, configuration, small demos, etc.

- https://github.com/hashicorp/field-workshops-consul = Slide decks and Instruqt code for Consul Workshops

- https://github.com/hashicorp/demo-consul-101 = Tutorial code and binaries for the HashiCorp Consul beginner course.

-

https://github.com/hashicorp/learn-consul-docker = Docker Compose quick starts for Consul features.

-

https://github.com/hashicorp/terraform-aws-vault A Terraform Module for how to run Consul on AWS using Terraform and Packer

-

https://github.com/hashicorp/hashicat-aws = A terraform built application for use in Hashicorp workshops

-

https://github.com/hashicorp/consul-template = Template rendering, notifier, and supervisor for @hashicorp Consul and Vault data.

-

https://github.com/hashicorp/consul-k8s = First-class support for Consul Service Mesh on Kubernetes, with binaries for download at https://releases.hashicorp.com/consul-k8s/

-

https://github.com/hashicorp/consul-replicate = Consul cross-DC KV replication daemon.

- hashicorp/learn-consul-kubernetes

-

https://github.com/hashicorp/learn-consul-service-mesh

-

https://github.com/hashicorp/consul-demo-traffic-splitting = Example application using Docker Compose to demonstrate Consul Service Mesh Traffic Splitting

-

hashicorp/consul-esm = External service monitoring for Consul

- https://github.com/hashicorp/terraform-aws-consul-starter = A Terraform module for creating an OSS Consul cluster as described by the HashiCorp reference architecture.

The Automated Way

- Obtain an AWS account credentials with adequate premissions

- Create an AWS VPC and associated resources to be managed by additional Consul infra

- Identify your lb_ingress_ips used in the load balancer security groups, needed to limit access to the demo app.

- Configure kubectl

- Create a HashiCorp Platform (HCP) cloud account and organization

- Store secrets in a safe way

- Create a HashiCorp Virtual Network (HVN)

- Peer the AWS VPC with the HVN

- Create a HCP Consul cluster

- Configure Consul ACL Controller

- Run Consul clients within the provisioned AWS VPC

- Destroy Consul cluster and app infra under test

Obtain AWS account credentials

-

Obtain AWS credentials (AWS_) and populate file ~/.aws/configuration or environment variables.

export AWS_ACCESS_KEY_ID=your AWS access key ID export AWS_SECRET_ACCESS_KEY=your AWS secret access key export AWS_SESSION_TOKEN=your AWS session token

Alternately, copy and paste credentials in the ~/.aws/credentials file that every AWS CLI command references.

BTW If you are a HashiCorp employee, they would be obtained for the “Doormat” website, which grants access to your laptop’s IP address for a limited time.

Create resources within AWS

There are several ways to setup infrastructure in a cloud datacenter managed by Consul.

Instead of performing manual steps at https://learn.hashicorp.com/tutorials/cloud/consul-deploy, this describes use of Terraform to create a non-prod HCP Consul environment to manage an ECS cluster, and various AWS services:

-

Navigate to where you download GitHub repo.

-

Do not specify –depth 1 when cloning (because we will checkout a branch):

git clone git@github.com:hashicorp/learn-consul-terraform.git cd learn-consul-terraform

-

Before switching to a branch, get a list of the branches:

git tag

git checkout v0.5

-

Navigate to the folder within the repo:

cd datacenter-deploy-ecs-hcp

TODO: Study the Terraform specifications:

- variables.tf - Parameter definitions used to customize unique user environment attributes.

- data.tf - Data sources that allow Terraform to use information defined outside of Terraform.

- providers.tf - AWS and HCP provider definitions for Terraform.

-

outputs.tf - Unique values output after Terraform successfully completes a deployment.

- ecs-clusters.tf - AWS ECS cluster deployment resources.

- ecs-services.tf - AWS ECS service deployment resources.

- load-balancer.tf - AWS Application Load Balancer (ALB) deployment resources.

- logging.tf - AWS Cloudwatch logging configuration.

- modules.tf - AWS ECS task application definitions.

- secrets-manager.tf - AWS Secrets Manager configuration.

- security-groups - AWS Security Group port management definitions.

-

vpc.tf - AWS Virtual Private Cloud (VPC) deployment resources.

- network-peering.tf - HCP and AWS network communication configuration.

- hvn.tf - HashiCorp Virtual Network (HVN) deployment resources.

- hcp-consul.tf - HCP Consul cluster deployment resources.

See https://learn.hashicorp.com/tutorials/consul/reference-architecture for Scaling considerations.

https://learn.hashicorp.com/tutorials/consul/production-checklist?in=consul/production-deploy

-

Identify your IPv4 address (based on the Wi-Fi you’re using):

curl ipinfo.io

{ "ip": "129.222.5.194", -

terraform.tfvars.example

-

Configure Terraform variables in a .auto.tfvars (or terraform.tfvars) file with, for example:

lb_ingress_ips = "47.223.35.123" region = "us-east-1" suffix = "demo"

region - the AWS region where resources will be deployed. PROTIP: Must be one of the regions HCP suppors for HCP Consul servers.

lb_ingress_ips - Your IP. This is used in the load balancer security groups to ensure only you can access the demo application.

suffix text value AWS appends to resource names its creates. This needs to be changed in each run because, by default, secrets created by AWS Secrets Manager require 30 days before they can be deleted. If this tutorial is destroyed and recreated, a name conflict error will occur for these secrets.

-

Run using terraform init

VIDEO: Try it:

-

In the folder containing main.tf, run terraform to inititate :

terraform init

Example response:

Initializing modules... Downloading registry.terraform.io/hashicorp/consul-ecs/aws 0.2.0 for acl_controller... - acl_controller in .terraform/modules/acl_controller/modules/acl-controller Downloading registry.terraform.io/hashicorp/consul-ecs/aws 0.2.0 for example_client_app... - example_client_app in .terraform/modules/example_client_app/modules/mesh-task Downloading registry.terraform.io/hashicorp/consul-ecs/aws 0.2.0 for example_server_app... - example_server_app in .terraform/modules/example_server_app/modules/mesh-task Downloading registry.terraform.io/terraform-aws-modules/vpc/aws 2.78.0 for vpc... - vpc in .terraform/modules/vpc Initializing the backend... Initializing provider plugins... - Finding hashicorp/hcp versions matching "~> 0.14.0"... - Finding hashicorp/aws versions matching ">= 2.70.0, > 3.0.0"... - Installing hashicorp/hcp v0.14.0... - Installed hashicorp/hcp v0.14.0 (signed by HashiCorp) - Installing hashicorp/aws v4.16.0... - Installed hashicorp/aws v4.16.0 (signed by HashiCorp) Terraform has created a lock file .terraform.lock.hcl to record the provider selections it made above. Include this file in your version control repository so that Terraform can guarantee to make the same selections by default when you run "terraform init" in the future. Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary. -

In the folder containing main.tf, run terraform to design:

time terraform plan

After many minutes, sample response ends with:

Apply complete! Resources: 64 added, 0 changed, 0 destroyed. Outputs: client_lb_address = "http://learn-hcp-example-client-app-1643813623.us-east-1.elb.amazonaws.com:9090/ui" consul_ui_address = "https://dc1.consul.b17838e5-60d2-4e49-a43b-cef519b694a5.aws.hashicorp.cloud"

-

If Sentinel or TFSec was installed:

tfsec

-

In the folder containing main.tf, run terraform to instantiate in AWS:

time terraform apply

-

(optional) Configure kubectl

aws eks --region $(terraform output -raw region) update-kubeconfig --name $(terraform output -raw local.eks_cluster_name) kubectl get pods -A \

-

To access the Consul UI in HCP, print the URL and bootstrap token to access the Consul UI. The bootstrap token can be used to login to Consul.

terraform output consul_public_endpoint_url terraform output consul_bootstrap_token

-

Access the demo application in ECS: print the URL for the demo application:

terraform output ecs_ingress_address

CTS for NIA

HashiCorp’s “Network Infrastructure Automation (NIA)” marketing page (consul.io/docs/nia) promises to scale better, decrease the possibility of human error when manually editing configuration files, and decrease overall time taken to push out configuration changes.

PROTIP: There are current no competitors in the market for this feature.

LEARN: Network Infrastructure Automation with Consul-Terraform-Sync hands-on, which uses the sample counting service at port 9003 and dashboard service in port 9002, from https://github.com/hashicorp/demo-consul-101/releases

-

Intro (using terraform, Consul “consul-terraform-sync” CLI) 17 MIN

- Consul-Terraform-Sync Run Modes and Status Inspection task execution status using REST API. 9 MIN

- CTS and Terraform Enterprise/Cloud integration. 14 MIN

- Build a Custom CTS Module. 20 MIN

- Secure Consul-Terraform-Sync for Production. 13 MIN

- Partner Guide - Consul NIA, Terraform, and A10 ADC. 12 MIN

- Partner Guide - Consul NIA, Terraform, and F5 BIG-IP. 12 MIN

- Partner Guide - Consul NIA, CTS, and Palo Alto Networks. 12 MIN

References:

- https://www.consul.io/docs/nia/configuration

- https://www.consul.io/docs/nia/terraform-modules

- VIDEO by Kim Ngo & Melissa Kam.

- Part 13: Consul-Terraform-Sync

CTS (Consul-Terraform Sync) Agent is an executable binary (“consul-terraform-sync” daemon separate from Consul) installed on a server.

NOTE: HashiCorp also provides binaries for various back releases at

https://releases.hashicorp.com/consul-terraform-sync/

Notice the “+ent” for enterprise editions.

brew tap hashicorp/tap brew install hashicorp/tap/consul-terraform-sync consul-terraform-sync -h

When the daemon starts, it also starts up a Terraform CLI/API binary locally.

See https://www.consul.io/docs/nia/configuration

CTS interacts with the Consul Service Catalog in a publisher-subscriber paradigm.

CTS has Consul acting as the central broker – changes trigger Consul to subscribe to Terraform assets. CTS can respond to changes in Service Registry. CTS can also watch for changes in its KV (Key-Value) store.

When CTS recognizes relevant changes requiring action, it dynamically generates files that invoke Terraform modules. Thus, CTS can interact with Terraform Cloud Driver’s Remote Workspaces. Advantages of this:

- Remote Terraform execution

- Concurrent runs within Terraform using secured variables

- State versions, audit logs, run history with triggers and notifications

- Option for Sentinel to enforce governance policies as code

CTS is how changes can trigger automatic dynamic update of network infrastructure devices such as applying firewall policies, updating load balancer member pools, etc.

- VIDEO: CTS can update network devices that are not Consul-aware (not F5 or NGINX, which are).

- VIDEO: Network Automation on Terraform Cloud With CTS

- CTS is used to keep configurations up-to-date on Fortinet physical and virtual NGFW (Next-Generation FireWall)

- VIDEO: “Future of Service Networking”

CTS v0.3 was announced Sep 2021

References:

- VIDEO “Integrating Terraform with Consul”

- https://learn.hashicorp.com/tutorials/cloud/consul-end-to-end-ecs

Each task consists of a runbook automation written as a CTS compatible Terraform module using resources and data sources for the underlying network infrastructure. The consul-terraform-sync daemon runs on the same node as a Consul agent.

Alternative repo:

Consul Global Scale Benchmark

The biggest way to go is using https://github.com/hashicorp/consul-global-scale-benchmark used to prove that a Service Mesh Control Plane of 5 HashiCorp Consul Servers across 3 availability zones in us-east-1 are able to update 10,000 Consul/Nomad client nodes and 172,000+ services in under 1 second. Each Consul Server run on c5d.9xlarge instance types on EC2 having 36 vCPUs and 72 Gigabytes of memory. It’s described by White paper: “Service Mesh at Global Scale” and Podcast with creator: Anubhav Mishra (Office of the CTO).

See also: https://github.com/hashicorp/consul-global-scale-benchmark = Terraform configurations and helper scripts for Consul Global Scale Benchmark

Identify Terraform repo in GitHub

To create the app infra which Consul works on, consider the

https://github.com/hashicorp-guides

Consistent workflows to provision, secure, connect, and run any infrastructure for any application.

* https://github.com/hashicorp-guides/hashistack

They reference 22 https://github.com/hashicorp-modules such as:

* https://github.com/hashicorp-modules/network-aws

Each module has an examples folder.

https://www.terraform.io/language/settings/backends/remote Terraform Remote State back-ends

https://github.com/hashicorp/field-workshops-consul/tree/master/instruqt-tracks/secure-service-networking-for-aws

a. https://learn.hashicorp.com/tutorials/cloud/terraform-hcp-consul-provider - it provisions resources that qualify under the AWS free-tier.

Files:

- consul.tf: describes the HPC Consul cluster you are going to create.

- vpc_peering.tf: describes the AWS VPC and the peering with the HVN.

- variables.tf: sets the variables for your deployment.

b. The following steps are based on https://learn.hashicorp.com/tutorials/cloud/consul-deploy referencing https://github.com/hashicorp/terraform-aws-hcp-consul which uses Terraform to do the below:

Among https://github.com/hashicorp/docker-consul = Official Docker images for Consul.

https://github.com/hashicorp/terraform-aws-hcp-consul is the Terraform module for connecting a HashiCorp Cloud Platform (HCP) Consul cluster to AWS. There are four examples containing default CIDRs for private and public subbnets:

- existing-vpc

- hcp-ec2-demo

- hcp-ecs-demo

-

hcp-eks-demo

- hcp-ec2-client - [For Testing Only]: installs Consul and runs Consul clients with EC2 virtual machines.

- hcp-eks-client - [For Testing Only]: installs the Consul Helm chart on the provided Kubernetes cluster.

- k8s-demo-app - [For Testing Only]: installs a demo application onto the Kubernetes cluster, using the Consul service mesh.

https://github.com/hashicorp/terraform-azurerm-hcp-consul

Hashicorp Cloud Account

-

Sign into: https://cloud.hashicorp.com/products/consul

- Verify your email if it’s your first time, or type your email.

- The first time, select the Registration Name (such as “wilsonmar-org”), country to create a new org.

-

You get $50! You can skip giving out your credit card until you want a PRODUCTION instance or use larger size node servers. For development use, an extra-small (XS) cluster size is deployed by default to handle up to 50 service instances.

-

Select Consul on the left product menu. Bookmark the URL, which contains your account ID so you’ll go straight to it:

https://portal.cloud.hashicorp.com/services/consul?project_id=…

- Click “Access control (IAM)” menu.

-

Click “Service principals” from the menu and specify the 3 examples below (with your name) for each of 3 roles with ID such as wilsonmar-123456@12ae4567-f584-4f06-9a9e-240690e2088a

- Role “Admin” (as full access to all resources including the right to edit IAM, invite users, edit roles)

- Role “Contributor” (Can create and manage all types of resources but can’t grant access to others.)

- Role “Viewer” (Can only view existing resources.)

PROTIP: Once logged in, a cookie is saved in the browser so that you will be logged in again automatically.

-

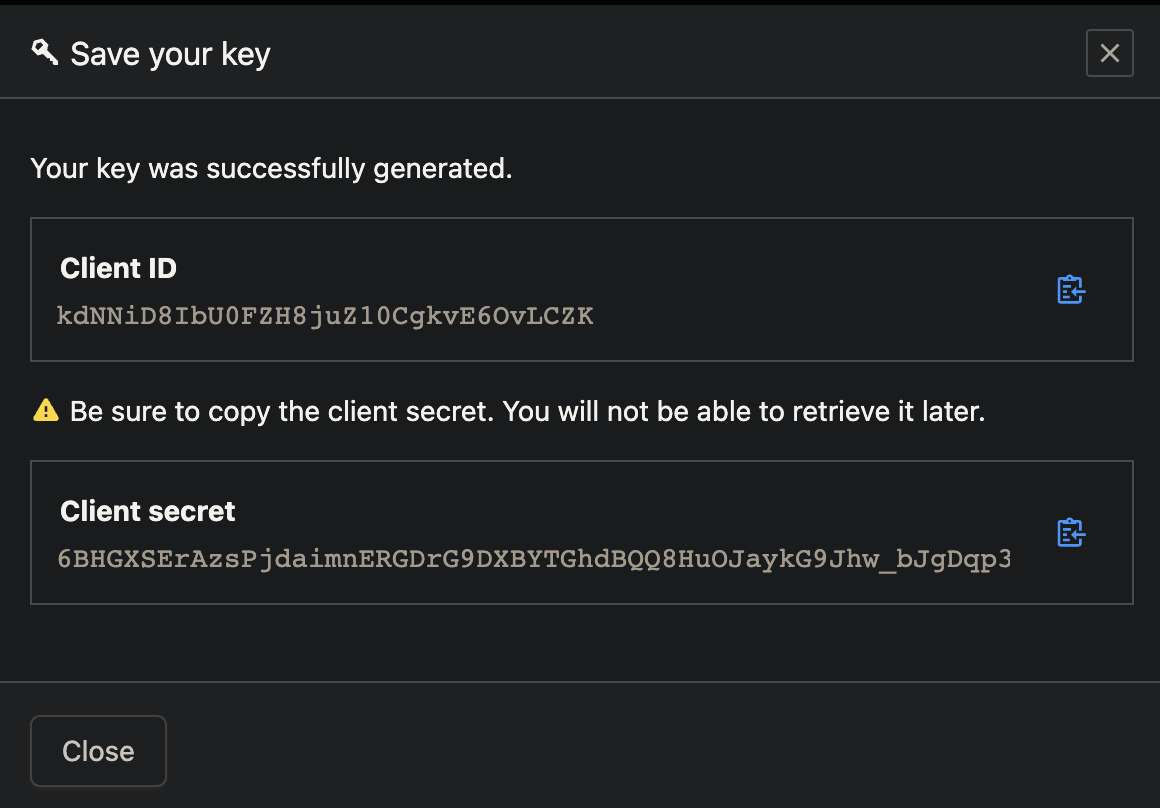

For each service principal, click the blue “Create service principal key”.

-

Click the copy icon to save each generated value to your Clipboard (for example):

export HCP_CLIENT_ID=kdNNiD8IbU0FZH8juZ10CgkvE6OvLCZK export HCP_CLIENT_SECRET=hvs.6BHGXSErAzsPjdaimnERGDrG9DXBYTGhdBQQ8HuOJaykG9Jhw_bJgDqp35OkYSoA

Alternately, copy-paste the values directly into provider config file:

provider "hcp" { client_id = "service-principal-key-client-id" client_secret = "service-principal-key-client-secret" }CAUTION: The secret is not shown after you leave the screen.

Store secrets

-

In a file encrypted and away from GitHub, store secrets:

TODO: Use Vault to keep the above secrets secure (in a cloud).

For now, create file config

https://github.com/hashicorp/consul-guides = Example usage of HashiCorp Consul

(optional) Configure kubectl

aws eks --region $(terraform output -raw region) update-kubeconfig --name $(terraform output -raw local.eks_cluster_name) kubectl get pods -A

Create a HashiCorp Virtual Network (HVN)

REMEMBER: Each resource in HCP can only be located in one HVN. You cannot span two different HVNs with a single product deployment, and product deployments cannot be moved from one HVN to another. Additionally, HVNs cannot be changed after they are deployed.

References:

- https://registry.terraform.io/providers/hashicorp/hcp/latest/docs/resources/hvn

Peer HVN to a AWS VPC

- https://registry.terraform.io/providers/hashicorp/hcp/latest/docs/resources/hvn

-

In the HVN overview page, select the Peering connections tab, and click the Create peering connection link.

-

Input the following information:

-

AWS account ID

-

VPC ID

-

VPC region

-

VPC CIDR (Classless Inter-Domain Routers) block

-

-

Click the Create connection button to begin the peering process.

Peering status begins at “Creating”.

-

Accept the connection at the AWS console.

-

Navigate to the Peering Connections area of your AWS Console.

You should have an entry in the list with a status of Pending Acceptance.

-

Click Actions -> Accept Request to confirm acceptance.

Status should change to “active”.

-

Once the HVN is deployed, the status updates to “Stable” on the HVN overview tab.

-

You can return to this screen to delete the peering relationship. However, deleting this peering relationship means you will no longer be able to communicate with your HVN.

Create a HCP Consul cluster

- Enterprise Academy: Deploy a Consul Cluster (Configure, start, and validate high availability of a Consul Enterprise cluster).

- Enterprise Academy: Deploy a Consul Cluster (Configure, start, and validate high availability of a Consul Enterprise cluster).

-

Create Cluster (such as “consul-cluster-1”), Network ID (“hvn”), Region,

CIDR Block 172.25.16.0/20 is the default CIDR block value.

In HVN, IPv4 CIDR ranges used to automatically create resources in your cloud network are delegated in HVN. The CIDR range you use cannot overlap with the AWS VPC that you will be peering with later.

Enable a public or private IP

WARNING: A public IP makes the Consul UI and API conveniently available from anywhere in the public internet for development use. But it is not recommended for production because it is a less secure configuration.

Configure L3 routing and security ???

-

Configure L3 routing and security

-

Create a security group

-

Create a route

-

Define ingress and egress rules

https://learn.hashicorp.com/tutorials/cloud/terraform-hcp-consul-provider

Configure Consul ACL Controller

The Consul ACL Controller is added by Terraform code used to create other app VPC resources.

TODO: Auto-discovery?

Run Consul clients within the provisioned AWS VPC

-

Connect your AWS VPCs to the HVN so that the clients in your VPC can communicate with the HCP server after the next step.

-

Install Consul into those AWS VPC.

This is not in Terraform code???

Run a demo application on the chosen AWS runtime

Destroy Consul

-

Destroy resources

TODO:

References about HVN (HashiCorp Virtual Network):

- https://cloud.hashicorp.com/docs/hcp/network

- https://learn.hashicorp.com/tutorials/cloud/consul-deploy

- https://learn.hashicorp.com/tutorials/cloud/terraform-hcp-consul-provider#hcp_consul_base

Service Discovery Workflow

- Instruqt: Consul F5 Service Discovery

- Enterprise Academy: Service Discovery (See how Consul’s Service Discovery feature works by connecting multiple services)

- Enterprise Academy: Service Discovery and Health Monitoring

HCP Consul Cloud Pricing

https://registry.terraform.io/providers/hashicorp/hcp/latest/docs

https://cloud.hashicorp.com/products/consul/pricing

https://cloud.hashicorp.com/docs/consul#features

| Plan | Base | + per svc instance hr | Limits |

|---|---|---|---|

| Individual Development | 0.027/hr $20/mo | - | Up to 50 service instances. No uptime SLA. |

| "Standard" prod. | $0.069/hr $49/mo | Small: $0.02/hr | SLA |

| "Plus" prod. | $0.104/hr | - | SLA, multi-region |

PROTIP: Assume a 5:1 Consul node to app services ratio.

C. On a macOS laptop using Docker

- https://learn.hashicorp.com/tutorials/consul/get-started-agent?in=consul/getting-started

- https://cloudaffaire.com/how-to-install-hashicorp-consul/

One Agent as Client or Server

PROTIP: The Consul executable binary is designed to run either as a local long-running client daemon or in server mode.

CAUTION: Do not use the manual approach of downloading release binaries from GitHub because

So that you avoid the toil the configuring PATH, etc. see install instructions below to use a package manager for each operating system (x86 and ARM):

* Homebrew (brew command) on macOS

* apt-get on Linux

* Chocolately (choco command) on Windows

Work with the Consul Agent using:

- CLI (Command Line Interface) on Terminal sessions

- API calls using curl or within a custom program (written in Go, etc.)

- GUI (Graphic User Interface) on an internet browser such as Google Chrome

REMEMBER: Normally, there is no reason to SSH directly into Consul servers.

The UI and API are intended to be consumed from remote systems, such as a user’s desktop or an application looking to discover a remote service in which it needs to establish connectivity.

The API at /connect/intentions/exact provides the most features to create Service Intentions.

### Environment Variables

The shell script I wrote makes use of several custom environment variables, which minimizes mistakes when several commands use the same values. When applicable, my script also captures values output from one step to use in subsequent commands, to avoid the toil and mistakes from manual copy and pasting.

Use of environment variables also enable the same command call to be made for both DEV and PROD use, further avoiding mistakes.

-

export DATACENTER1_ID=”dc1” - or by default is obtained from my laptop’s $(hostname)

-

CONSUL_AGENT_TOKEN

-

export LICENSE_FILE=”/etc/consul.d/consul.hclic”

### Install Consul Agent on Linux

Accordingly: TODO: Add signature verification.

apt-get update # Install utilities curl, wget, jq, apt-get -y install curl wget software-properties-common jq curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add - # Get version: lsb_release -cs # Add the official HashiCorp Linux repository: apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com \ $(lsb_release -cs) main" # Install Consul Enterprise on the node: apt-get -y install consul-enterprise

Install Enterprise Consul Agent on macOS

A different installer for Consul (named “+ent”) contains Enterprise features such that both FOSS and Enterprise editions of the Consul agent have the same name (“consul”). This makes for minimal impact when upgrading from FOSS to Enterprise.

But the Enterprise edition looks for a license file within configuration settings. The Enterprise edition is provided in the AMI image of consul on Amazon Marketplace, which charges $8,000 per year for up to 50 nodes and bronze support.

Homebrew does not have an installer for the enterprise edition of Consul.

-rwxr-xr-x 1 wilsonmar staff 127929168 Jun 3 13:46 /usr/local/bin/consul

PROTIP: I wrote a shell script to make it both safer and easier for you to install the Enterpise edition of Consul. It uses the GPG utility to ensure that what is downloaded is exactly what the author intended, as defined in signature files the author created at time of creation. The shell script which follows the manual install, but adds automated checks before and after each step.

The shell script makes use of Homebrew’s brew command for other utilities.

https://github.com/wilsonmar/hashicups/blob/main/consul-download.sh

This command below runs the RAW format of the script.

-

Use your mouse to triple-click zsh in the command below to highlight the line, then press command+C to copy it to your Clipboard:

TODO: https://raw.githubusercontent.com/wilsonmar/hashicups/main/consul-download.sh

zsh -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/mac-setup/main/mac-setup.zsh)" \ -v -I -U -consul

CAUTION: Do not click on the URL (starting with httpd) since the terminal program opens a browser to that URL.

-v specifies optional verbose log output.

-Golang specifies install of Go programming language development components

-I specifies -Install of utilities XCode CLI, Homebrew, git, jq, tree, Docker, and components in the HashiCorp ecosystem, including Terraform, Vault, Nomad, envconsul.

-U specifies -Update of utilities. Do not specify -I and -U after initial install (to save a few seconds).

Utilities for working with AWS, Azure, GCP, and other clouds require their own parameter to be specified in order to be installed.

When no version is specified, the script identifies the latest version and installs that. Alternately, a specific verify can be specified.

-

Press command+Tab to switch to the Terminal.app.

-

Click anywhere in the Terminal window and Press command+V to paste the command from your Clipboard.

-

Press Return/Enter on your keyboard to begin execution.

-

Skip to use CLI commands

Here is a description of the steps that script takes:

-

Use a browser to view a list of releases:

https://releases.hashicorp.com/consul/

-

Click one that is NOT “alpha” or “beta”, such as:

consul_1.12.2+ent

for https://releases.hashicorp.com/consul/1.12.2+ent/

-

Click the “darwin”. “arm64” if your macOS laptop has Apple Silicon M1/M2 chip.

consul_1.12.2+ent_darwin_arm64.zip

Check SHA256SUM

File names containing “SHA256SUMS” are for verifying whether download was complete.

The steps below generate a hash of the downloaded file, then compares it with the hash generated by the author, also downloaded. Since even one bit difference between the zip file would generate a different hash, we compare the before and after to determine if the file was corrupted.

HashiCorp provides 3 different hash files. See https://www.hashicorp.com/security

-

Show the hash of files listed within the SHA256SUMS file:

cat consul_1.12.2+ent_SHA256SUMS

dc7d0b536b2646812a3b6bea88648f9b0b6f9ec13a850ebc893383faf2267f1d consul_1.12.2+ent_darwin_amd64.zip 1213b93c6465de0c66de043bc3f7afa9934d5122e8f662cb76885a156af58a88 consul_1.12.2+ent_darwin_arm64.zip

-

Select whether to use gpg instead of shasum.

See https://www.youtube.com/watch?v=4bbyMEuTW7Y

Use GPG

-

Click to download the “.sig” file such as

consul_1.12.2+ent_SHA256SUMS.72D7468F.sig

BTW: “72D7468F” is described at “PGP Public Keys” within https://www.hashicorp.com/security

BTW: The file was created using a command such as

gpg –detach-sign consul_1.12.2+ent_darwin_arm64.zip (which outputs gpg: using “C7AF3CB20D417CAE08C03507A931D0E933B64F94” as default secret key for signing).consul_1.12.2+ent_SHA256SUMS.sig

- Switch to Terminal.

-

If you have not installed gpg, do so. Verify if you have it installed:

where gpg

The desired response is:

/usr/local/bin/gpg -

Command:

gpg --verify consul_1.12.2+ent_SHA256SUMS.sig \ consul_1.12.2+ent_darwin_arm64.zip

The response desired is “Good signature”:

gpg: assuming signed data in 'consul_1.12.2+ent_SHA256SUMS' gpg: Signature made Fri Jun 3 13:58:17 2022 MDT gpg: using RSA key 374EC75B485913604A831CC7C820C6D5CD27AB87 gpg: Can't check signature: No public key

QUESTION: Huh?

- Skip to use CLI commands

Use shasum to check SHA256SUM

-

Verify if you have the program installed:

where shasum

If the response is “/usr/bin/shasum”, download

consul_1.12.2+ent_SHA256SUMS for use with the shasum utility -

Generate a hash based on the zip file downloaded:

shasum consul_1.12.2+ent_darwin_arm64.zip

-

Compare hashes:

sha256sum -c consul_1.12.2+ent_SHA256SUMS

The zip file is not corrupted if you see:

consul_1.12.2+ent_darwin_arm64.zip: OK

Ignore lines containing “FAILED open or read” for other hashes in the SHASUM file.

-

Unzip the zip file: within Finder, click the zip file to unzip it to yield file: consul (with no file extension).

- mv consul /usr/local/bin

- rm consul_1.12.2+ent_darwin_arm64.zip

-

rm consul_1.12.2+ent_SHA256SUMS

- Skip to use CLI commands

B. Install from Homebrew using brew

-

I

brew search consul

==> Formulae consul hashicorp/tap/consul ✔ hashicorp/tap/consul-template consul-backinator hashicorp/tap/consul-aws hashicorp/tap/consul-terraform-sync consul-template hashicorp/tap/consul-esm hashicorp/tap/envconsul envconsul hashicorp/tap/consul-k8s iconsur ==> Casks console

Install using Brew taps on MacOS

In the script, the Consul Agent is installed using HashiCorp’s tap, as described at:

- https://learn.hashicorp.com/tutorials/consul/get-started-install?in=consul/getting-started

Instead of the usual:

brew install consul

or

brew tap hashicorp/tap brew install hashicorp/tap/consul

Notice the response caveats from brew install consul:

The darwin_arm64 architecture is not supported for this product at this time, however we do plan to support this in the future. The darwin_amd64 binary has been installed and may work in compatibility mode, but it is not fully supported. To start hashicorp/tap/consul now and restart at login: brew services start hashicorp/tap/consul Or, if you don't want/need a background service you can just run: consul agent -dev -bind 127.0.0.1 ==> Summary 🍺 /opt/homebrew/Cellar/consul/1.12.0: 4 files, 117.1MB, built in 3 seconds

-bind is the interface that Consul agent itself uses.

-advertise is the interface that Consul agent asks others use to connect to it. Useful when the agent has multiple interfaces or the IP of a NAT device to reach through.

Consul CLI commands

-

Verify install:

consul version

Alternately:

consul --version

The response if the Enterprise (“+ent”) version was installed:

Consul v1.12.2+ent Revision 0a4743c5 Protocol 2 spoken by default, understands 2 to 3 (agent will automatically use protocol >2 when speaking to compatible agents)

The response if the Open Source version was installed:

Consul v1.12.2+ent Revision 19041f20 Protocol 2 spoken by default, understands 2 to 3 (agent will automatically use protocol >2 when speaking to compatible agents)

-

Obtain the menu of 31 command keywords listed alphabetically:

consul

(–help is not needed)

Usage: consul [--version] [--help] <command> [<args>] Available commands are: acl Interact with Consul's ACLs agent Runs a Consul agent catalog Interact with the catalog config Interact with Consul's Centralized Configurations connect Interact with Consul Connect debug Records a debugging archive for operators event Fire a new event exec Executes a command on Consul nodes force-leave Forces a member of the cluster to enter the "left" state info Provides debugging information for operators. intention Interact with Connect service intentions join Tell Consul agent to join cluster keygen Generates a new encryption key keyring Manages gossip layer encryption keys kv Interact with the key-value store leave Gracefully leaves the Consul cluster and shuts down lock Execute a command holding a lock login Login to Consul using an auth method logout Destroy a Consul token created with login maint Controls node or service maintenance mode members Lists the members of a Consul cluster monitor Stream logs from a Consul agent operator Provides cluster-level tools for Consul operators reload Triggers the agent to reload configuration files rtt Estimates network round trip time between nodes services Interact with services snapshot Saves, restores and inspects snapshots of Consul server state tls Builtin helpers for creating CAs and certificates validate Validate config files/directories version Prints the Consul version watch Watch for changes in Consul

Links have been added above.

CLI commands are used to start and stop the Consul Agent.

-

Get a list that all fits on the screen by typing consul then press the Tab key on the keyboard:

acl event keygen logout rtt watch agent exec keyring maint services catalog force-leave kv members snapshot config info leave monitor tls connect intention lock operator validate debug join login reload version

The above appears only if ~/.zshrc or ~/.bashrc contains:

complete -o nospace -C /usr/local/bin/consul consul

That line is inserted to the correct file by:

consul -autocomplete-install

Each command in the list above are defined by code within the GitHub repository at:

https://github.com/hashicorp/consul/tree/main/command

NOTE: Subcommand force-leave is in folder forceleave

These folders are in addition to subcommands: cli, flags, helpers.

Since Consul is written in the Go programming language, each command is process by a go language file in each folder.

The GUI is in JavaScript with Handlebars templating, SCSS, and Gherkin.

Consul Keyboard shortcuts

Below are the most commonly use command statements typed on Terminal.

PROTIP: If you find it tedious to repeatedly type out long commands every time, consider memorizing these keyboard shortcuts, defined as aliases in a file that executes everytime your Terminal starts (https://github.com/wilsonmar/mac-setup/blob/master/aliases.zsh). You may change your alias key to anything that is not already used by another program.