A deep dive on how to orchestrate containers, especially in clouds, including OpenShift. Pass the CKAD and CKA exams.

Overview

- TL;DR My contribution

- Why Kubernetes?

- Index: Kubernetes Vocabulary

- Insider Knowledge

- Predecessor to K8s

- Distributions of Kubernetes

- CNCF Versions

- Kubernetes is complex

- What Kubernetes contributes

- Organize for Kubernetes as a Team Sport!

- Self-Healing Automation

- This article: Automation

- TL;DR Professional certifications in Kubernetes

- Kubernetes CSPs

- Cloud Playgrounds

- Hands-on Demos/labs

- Control Plane Orchestration within a Master Node

- Core K8s Internals

- Kubernetes Security Hardening

- Sidero Talos OS on Bare Metal

- Cloud K8s GUI & CLI

- CLI Setup

- On-premise

- Kubectl Commands

- Docker and Alternative Runtimes

- Inside each Node

- Networking

- Endpoints

- Aggregation Layer

- What’s CNCF?

- Container Orchestration?

- KCNA Exam

- CKAD Exam

- Exam Preparations

- Social media communities

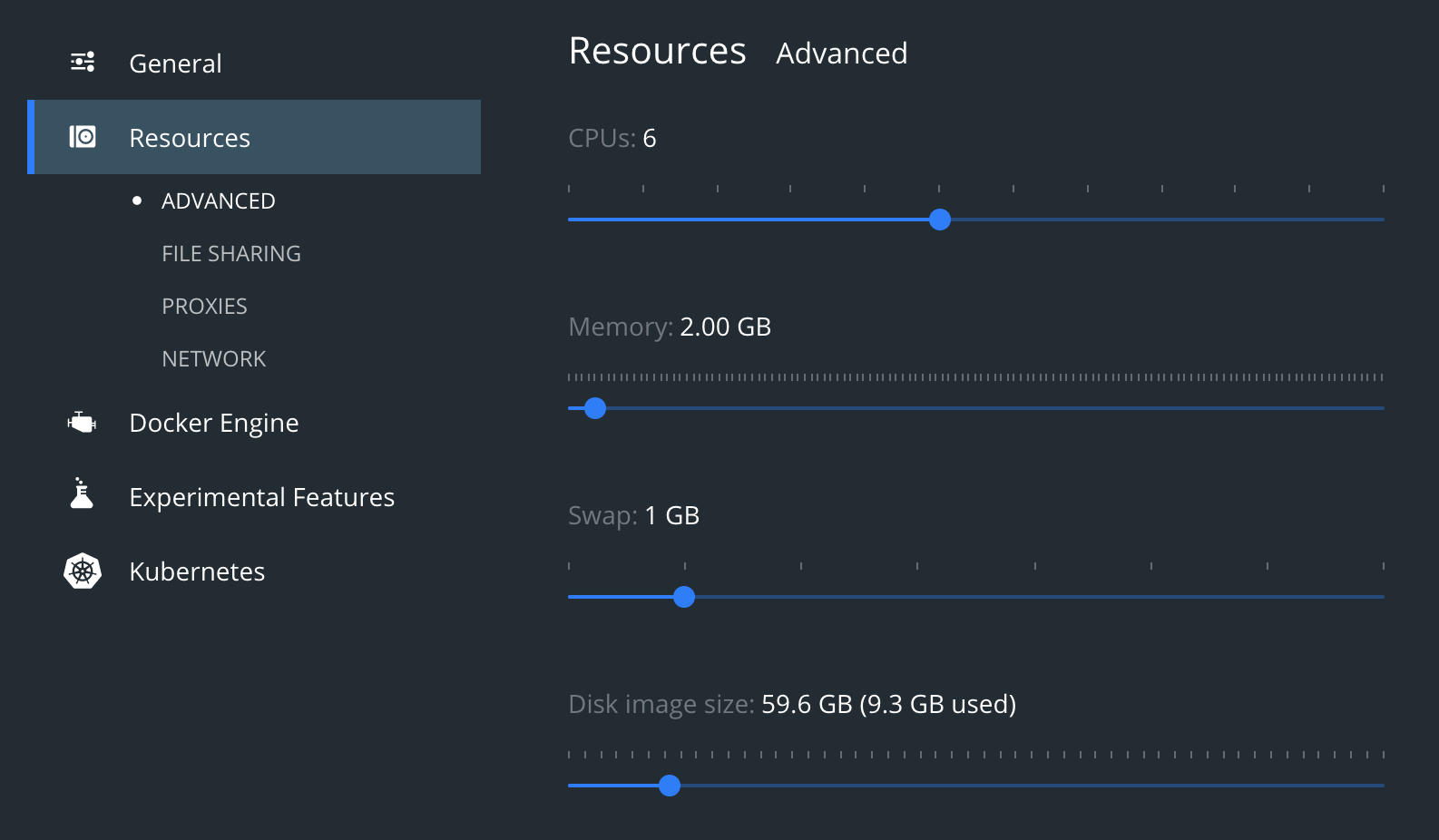

- MacOS Laptop Installation

- Docker Desktop install on macOS

- Prep standalone SSH client on macOS

- CRI = Container Runtime Interface

- Cluster Configuration

- Customize Terminal

- K command tips and tricks

- Declarative Kubernetes Commands

- Namespaces

- Namespaces

- imperative kubectl run command

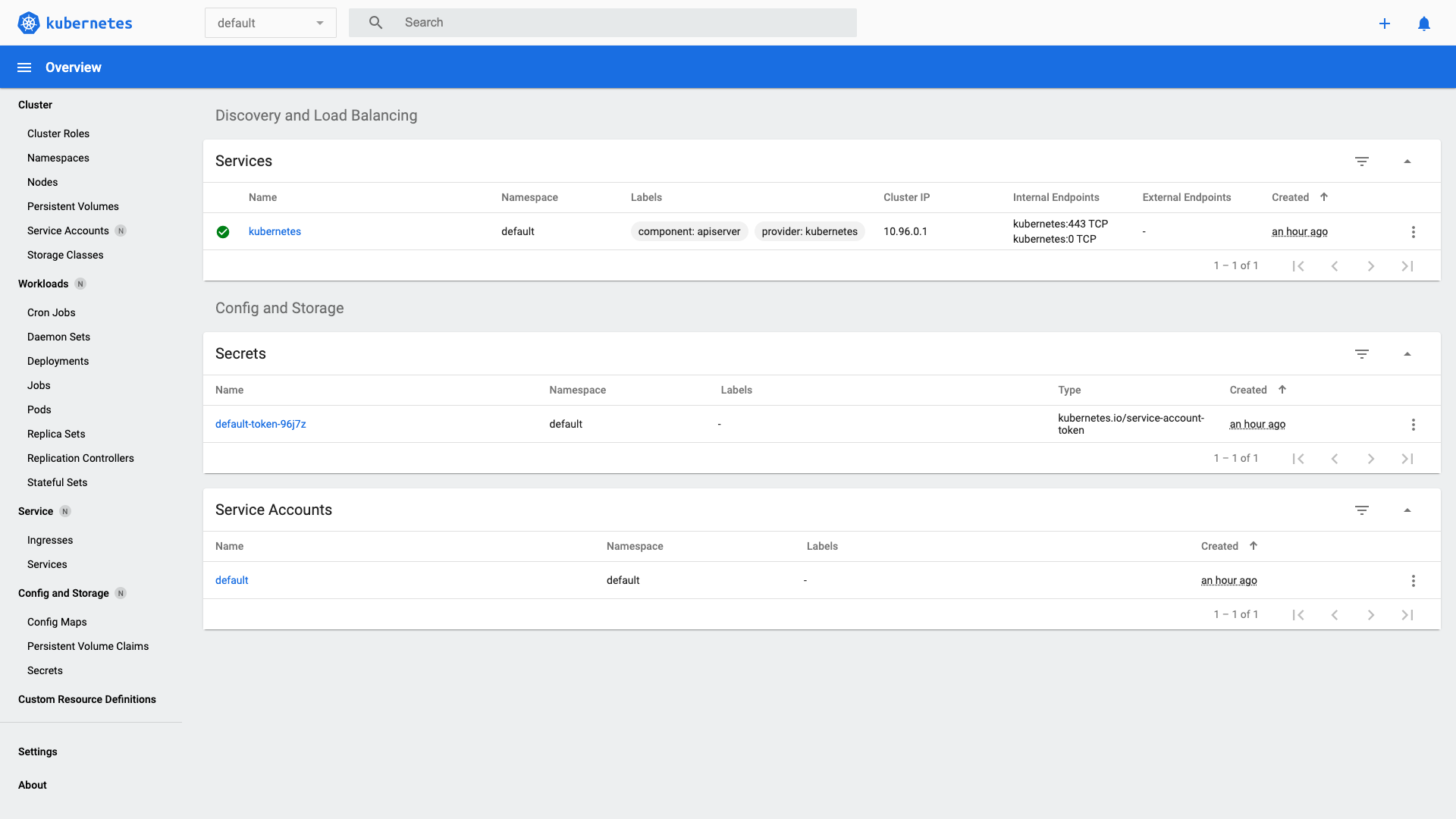

- Add-on Dashboard

- Declarative yaml

- OpenShift project wall namespaces

- Kustomize templating utility

- Jsonnet

- Dockerfile to Pod yaml correspondance

- Imperative one web server:

- Naked Pod command & yaml

- Declarative yaml

- kind: abbreviations

- CRD (Custom Resource Definitions)

- metadata:

- spec:

- Multi-Container Pods

- Controller objects

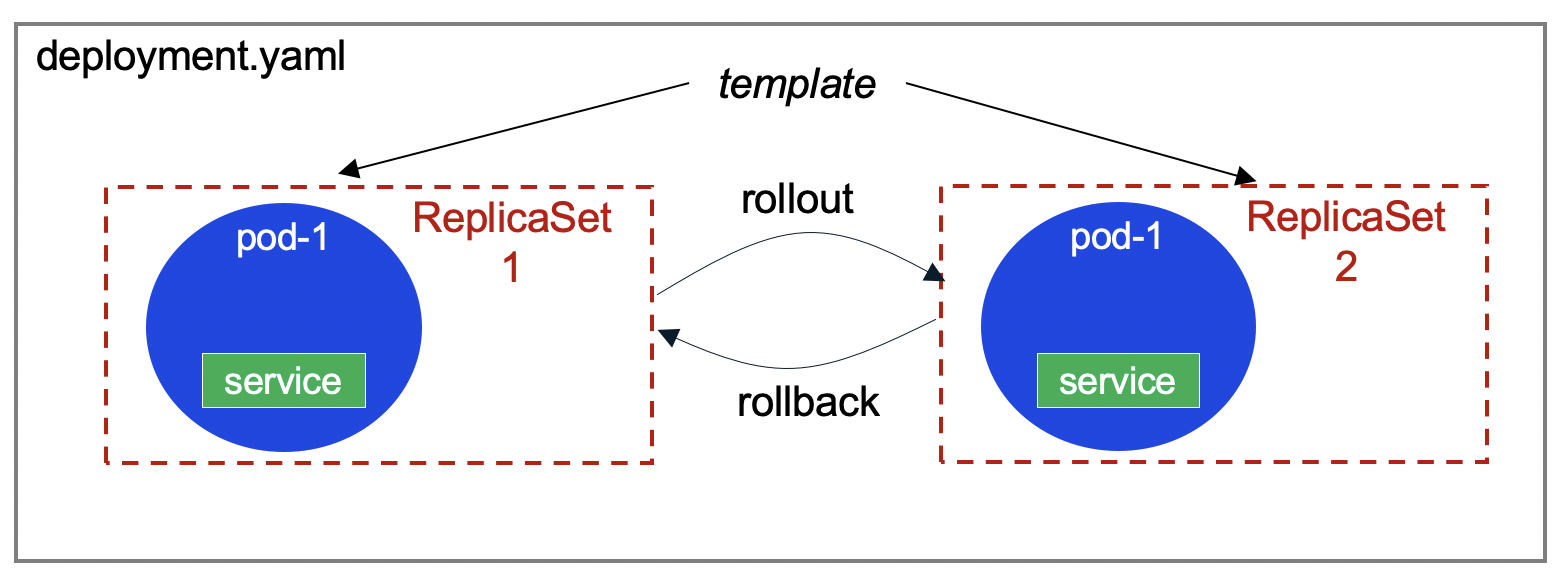

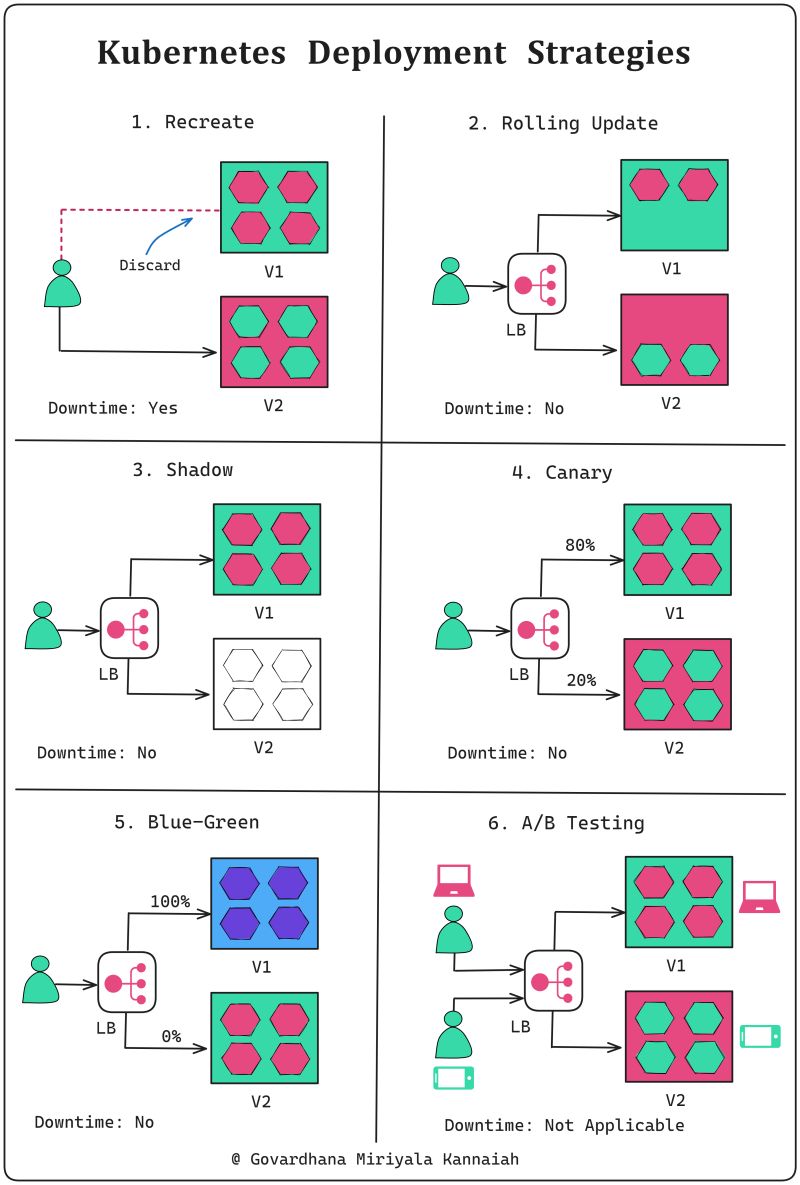

- Deployments

- Stateless apps

- Stateful Apps

- Stateful Sets

- DaemonSets (ds)

- svc = Services

- sa = ServiceAccounts

- Misc. List:

- Deleting Pods

- Plug-in manager

- Add-ons to Kubernetes

- Kapp

- netpol = NetworkPolicies

- HA Proxy cluster

- Autoscaler

- Health Checks

- Probes

- Multi-cloud

- GKS (Google Kubernetes Service)

- Google Kubernetes Threat Detection

- AWS CLI

- Microsoft’s Azure Kubernetes Service (AKS)

- Other Orchestration systems managing Docker containers

- Competing Orchestration systems

- Nodes

- Master Node (Control Plane)

- Volumes

- Taints and Tolerations

- Extract pod yaml from running podspec

- cAdvisor

- Testing K8s

- Installation options

- OS for K8s

- Architectural Details

- Admission Controller

- Container Storage Interface (CSI)

- Configmap

- Cloud Volumes (Geo-replicated)

- Storage Classes

- Sample micro-service apps

- Infrastructure as code

- pod.yml manifests

- Delete pods using selector

- Annotations

- Kubelet Daemonset.yaml

- Make your own K8s

- Kubeflow

- References

- Jobs for you

- Learning, Video and Live

- Kubernetes for Machine Learning

- Resources

- Secrets

- Debugging

- Blogs

- Production

- Quotas

- References

- Latest videos about K8s

- Video courses

- Rockstars

- Tutorials for sale

- Fun facts

- References

- Sample apps

- More on DevOps

TL;DR My contribution

Text here are my notes on how to use Kubernetes – a carefully sequenced presentation of complex material so it’s both easier to understand quickly yet more deeply.

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

Why Kubernetes?

Kubernetes is called a “orchestration” system for automating application deployment, scaling, and management.

Kubernetes is needed to run multiple instances of an application on a single machine, and run multiple machines in a cluster. This is called horizontal scaling, typically in a server cloud such as AWS, Azure, GCP, etc.

Kubernetes manages Docker containers. That is because developers create their apps within Docker images for less “it works on my machine” issues of portability across environments. That is a huge time saver.

As my article on Docker describes, Docker images are much faster and smaller than VMware images previously used.

Index: Kubernetes Vocabulary

Below are technical terms and abbreviations you need to know, listed alphabetically in one page, with bolded words being abbreviations assigned by Kubernetes:

Admission Control, Annotations, APIs, API Server, apply, Auto-scaling, CKAD, Clusters, ClusterRoles, cm=configmaps, Contexts, Control Plane, Controllers, CRD (Custom Resource Definition), CronJobs, Declarative, Discovery, ds=DaemonSets, deployment/, ep=endpoints, Environment Variables, Expose, hashes, health checks, hpa=HorizontalPodAutoscaler, Imperative, Init Containers, Ingress, JSONPath, KEDA, Kind, Kubelet, kube-proxy, Labels, LoadBalancer, Logging, Metadata, ns=Namespaces, Networking, netpol=NetworkPolicies, no=Nodes, NodePort, OpenShift, po=Pods, Podspecs, Readiness Probes, Liveness Probes, Probes, Persistent Volumes, Port Forwarding, PVC (Persistent Volume Claim), Replication, Replicas, rs=ReplicaSets, Rollbacks, Rolling Updates, Secrets, Selectors, svc=Services, sa=ServiceAccounts, Service Discovery, sts=StatefulSets, Storage Classes, Teamwork, Taints, Tolerations, Vim (tool), Volumes, VPA, Workloads API

REMEMBER: Memorizing and using abbreviations while manually typing commands will save you much time.

They will come up during basic interview questioning.

Insider Knowledge

The English word “kubernetes” is from the ancient Greek word based on “to steer” for people who pilot cargo ships –- “helmsman” or “pilot” in English. That’s why Docker/Kubernetes experts are called “captains”. Docker Captains work together on github.com/docker-captains/

Associated products have nautical themes, such as Helm (the package manager for Kubernetes).

The registered trademark for the logo of a sailing ship’s wheel, and Kubernetes code, are owned and maintained by the Linux Foundation (founded in 2000). The Linux Foundation created CNCF in 2015 to advance container technology.

Kubernetes is often abbreviated as k8s (pronounced “kate”), with 8 replacing the number of characters between k and s. Thus, https://k8s.io redirects you to the home page for Kubernetes software:

Predecessor to K8s

Kubernetes was created inside Google (using the Golang programming language). Kubernetes was used inside Google for over a decade before being open-sourced in 2014 to the Cloud Native Computing Foundation (cncf.io) collective.

This blog and podcast revealed that the predecessor to Kubernetes was the “Project 7” which built “The Borg” because initial developers were fans of the “Star Trek Next Generation” TV series 1987-1994 and related films. In the series, the “Borg” society subsumes all civilizations it encounters into its “collective”.*

The logo for Kubernetes inside the 6 sided hexagons representing each Google service has 7 sides. This is because a beloved character in the U.S. TV series (played by Jeri Ryan) is a converted Borg called “7 of 9”.

See Timeline of Kubernetes

The logo for Kubernetes inside the 6 sided hexagons representing each Google service has 7 sides. This is because a beloved character in the U.S. TV series (played by Jeri Ryan) is a converted Borg called “7 of 9”.

See Timeline of Kubernetes

The Kubernetes community repo provides icon image files (resources) labeled and unlabeled, in png and svg formats in 128 and 256 pixels.

Distributions of Kubernetes

The “k0s” distribution, open sourced at https://docs.k0sproject.io/stable, is an “all-inclusive Kubernetes distribution, which is configured with all of the features needed to build a Kubernetes cluster and packaged as a single binary for ease of use. Due to its simple design, flexible deployment options and modest system requirements, k0s is well suited for Any cloud, Bare metal, Edge and IoT” because it has no host OS external runtime dependencies.

CNCF Versions

-

The complete list of CNCF-certified releases is where Kubernetes source code is open-sourced, at:

-

On that page, notice that Kubernetes uses Semantic Versioning:

- v1.0 (first commit by Joe Beda within GitHub) for first release on July 21, 2015

- v1.6 was led by a CoreOS developer

- v1.7 was led by a Googler

- v1.8 was led by Jaice Singer DuMars (@jaicesd) after Microsoft joined the CNCF July 2017 VIDEO

- v1.22 - containerD replaces Docker as the default container runtime (Red Hat uses CRI-O instead)

- version 1.28 introduces robust sidecar support and independent upgrade of Control Plane components.

- 1.34

-

Scroll down to see the release marked “Latest” as a green sausage.

-

Open another browser tab to get that “Latest” release at:

- On a Terminal CLI, get that release as a System Variable:

K8_LATEST_VER=$( curl -L -s https://dl.k8s.io/release/stable.txt ) echo $K8_LATEST_VER-L tells curl to follow redirects.

-s runs it in silent mode (no progress bar, only output).

Alternately,

K8_LATEST_VER=$( wget -qO- https://dl.k8s.io/release/stable.txt ) echo $K8_LATEST_VER-q runs quietly (no logs).

-O- directs the output to standard output (stdout).

-

On a Terminal CLI, get the latest stable release defined in a single-line file at either of two locations:

K8_VERSION=$( curl -sS https://storage.googleapis.com/kubernetes-release/release/stable.txt ) echo $K8_VERSION

-

Get the version from Google, which is usually less recent (such as v1.31.0):

https://storage.googleapis.com/kubernetes-release/release/stable.txt

Kubernetes is complex

The power and flexibility provided by Kubernetes means there is a lot to learn.

Additionally, Kubernetes is typically run within one or more clouds, which require considerable time to learn fully.

Ideally, those who use Kubernetes would have specialists pave the way at each of the necessary steps to adopting Kubernetes. But that’s not the case in most organizations.

The (now legacy Cloud Native Trail Map summarizes the sequence of Kubernetes adoption:

- Containerization

- CI/CD (automation)

- Orchestration & Application Definition

- Observability & Analysis

- Service Proxy, Discovery, Mesh

- Networking, Prolicy, Security

- Distributed database & storage

- Streaming & messaging

- Container Registry & runtime

- Software Distribution

There are dozens of projects and products in the Kubernetes ecosystem, as shown in this landscape map:

What Kubernetes contributes

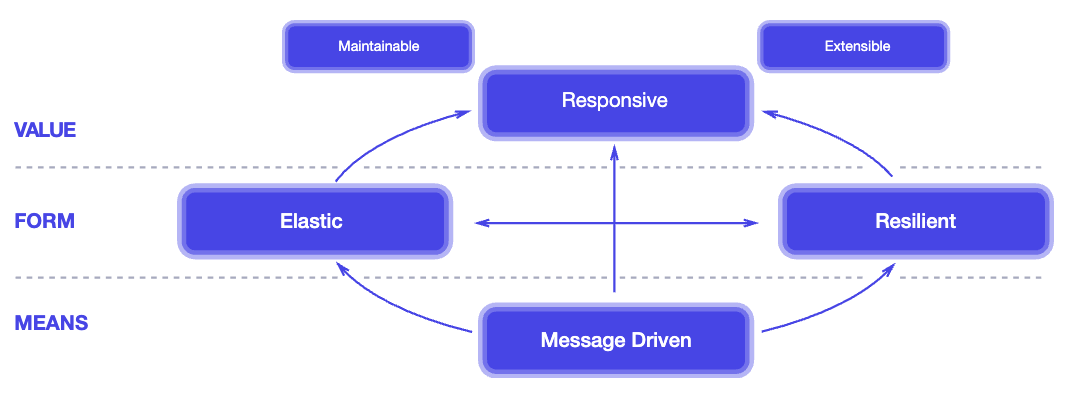

Kubernetes applies principles of the Reactive Manifesto of 2014:

- Infrastructure as code (IAC)

- Manage containers

- Naming and discovery

- Mounting storage systems

- Balancing loads

- Rolling updates

- Distributing secrets/config

- Checking application health

- Monitoring resources

- Accessing and ingesting logs

- Replicating application instances

- Horizontal autoscaling

- Debugging applications

Organize for Kubernetes as a Team Sport!

Kubernetes running in clouds has many moving parts that must be intricately configured and tuned to keep them reliable and secure.

Kubernetes was supposed to improve corporate agility and faster time to market.

However, most tutorials and courses are written to merely introduce individuals to learn Kubernetes basics rather than enabling a complex team to work together.

PROTIP: My assertion is that no one person can know everything about Kubernetes to operate it reliably and securely in a complex production enterprise. Hiring for superhumans expected to “know everything” is resulting in unnecessary turnover and down time.

PROTIP: Kubernetes in the cloud is so complex that most enterprises need to build a team of various specialists to ensure that Kubernetes is operated reliably and securely.

Kubernetes provides and enforces “significant decoupling between the layers of the serving stack: machine, operating system, application manager, and application code. This decoupling enables the development of specialized teams with agility and freedom to operate on their parts of the stack, thanks to separation of concerns:”

- hardware operations

- kernel operations

- cluster operations

- application operations

– From “How Kubernetes Changes Operations” by Brandan Burns.

BLAH: Most job descriptions for Kubernetes positions are written by people who don’t know Kubernetes enough to properly build a team around the complexity. So they search for people based only on technical certifications passed or based on counting years with “Kubernetes” in resumes.

Those who have a team exacerbate reliability by efforts to “protect” Kubernetes in production by limiting too-small a team of Operations specialists (separated from developers) who become a bottleneck to fast progress.

REMEMBER: Unlike other systems, in Kubernetes there are no “users”.

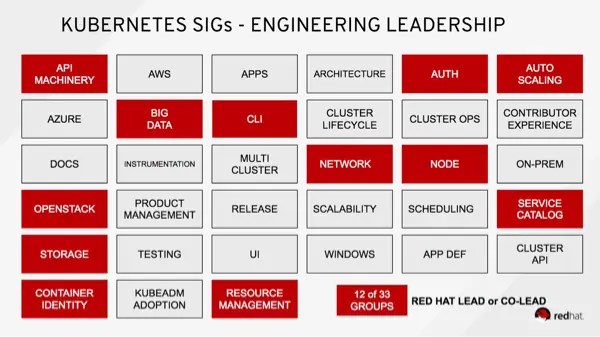

K8s API Special Interest Groups

Those who work on Kubernetes APIs are split into SIGs (Special Interest Groups), each with its own leadership structure. See https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.19/ (which is one big page):

-

Service APIs: Endpoints, Ingress, Service

-

Workloads APIs: Container, Job, CronJob, Deployment, StatefulSet, ReplicaSet, Pod, ReplicationController

-

Config and storage APIs: ConfigMap, CSIDriver, Secret, StorageClass, Volume

-

Metadata APIs: Controller, CRD, Event, LimitRange, HPA (HorizontalPodAutoscaler), PodDistributionBudget, …

-

Cluster APIs: APIService, Binding, CSR, ClusterRole, Node, Namespace, Lease, PersistantVolume -> HostPathVolume.

After Red Hat (within IBM) bought CoreOS, it bragged that its people lead “11 of 29” SIGS:

How to build a Kubernetes team

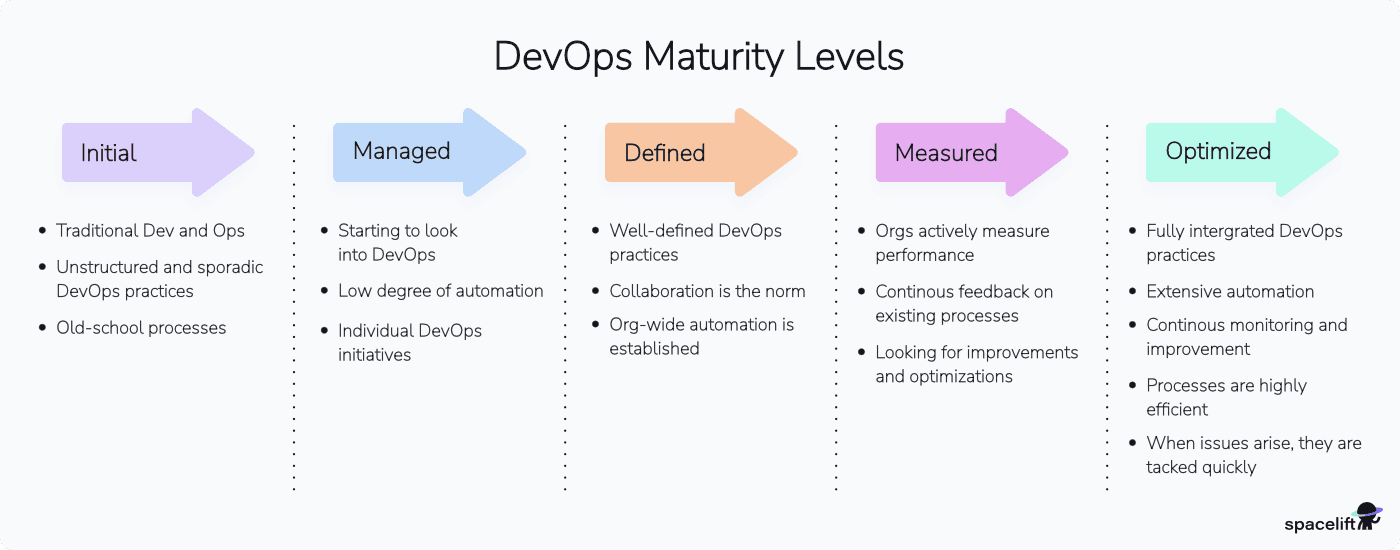

To achieve high maturity level:

-

Ensforce on-going use of a Team Calandar, Team KanBan, and Team file folders.

-

Schedule and hold sessions to sharing lessons learned and identify improvements as the only objective. Get a facilitator to enforce rules such as not criticizing ideas until it’s time.

This builds a culture that incentivizes helpfulness rather than competition and shaming.

-

Track unplanned work and categorize them by different dimensions in order to better allocate a budget instead of always being reactive (under seige). Having a list of projects enables better prioritization, and gives a complete picture for funding the team for 24/7 readiness that they are not just sitting around.

Assess the likelihood of its occurrence and the resulting level of impact if it does.

-

Identify barriers and dis-incentive for deep and well-rounded skills acquisition around Kubernetes and related skills. For example, work on defensive security is often neglected. A team that discusses lessons learned and innovations from other organizations can better avoid catastrophies.

Some like isolated Kubernetes skills because the shortage of Kubernetes skills has resulted in $300,000/year salaries for top Kubernetes jobs.

-

Form “buddy” sessions for paired work on problems together. This enables each to learn techniques that are learned through observation. Learnings are not just about debugging, but also optimal time management to maintain focus. This also what enables rotation among specialties so individuals are truely capable at many tasks.

-

Define a thorough on-boarding for those who join the team. Pre-install as much on each laptop as possible so work can begin immediately. This refines and standardizes tools and processes used by the team. Ensure joiners meet with everyone and can recall key info about the systems and others.

-

Clarify the basis for performance reviews, salary increases, and promotions – and what blocks advancement. Identify artifacts that individuals and teams can and should keep private (such as burndown charts, refactoring, automation, etc.).

-

Pay for on-line training and certifications to build knowledge and skills (in operating systems, security, networking, cloud, etc.). Vendors include KodeKloud.com. This helps build a “bench” of talent.

-

Managers track learning time and achievement versus budget for those they manage.

Many managers do not encourage skill advancement ahead of need because they are afraid of losing their people to other teams or companies. So this needs to be imposed on them by executives.

Many managers discourage skill advancement ahead of need to save money. This is reflected in hiring of contract workers (without benefits) who are not expected to be around long enough to learn.

-

Install full monitoring/observability tools and expose production analytics to everyone as the first step to develop understanding of how Kubernetes works. Evaluating what to use is a project. The “LGTM” stack is widely adopted because it’s fully open source, Kubernetes‑native, and flexible for self‑hosting or hybrid managed setups. LGTM stands for (Loki–Grafana–Tempo–Mimir/Prometheus): Loki (or OpenObserve) for logging, Grafana for visualization, Tempo (Jaeger) for tracing, Mimir and Prometheus for metrics. Others are Opentelemetry (OT) for instrumentation, SigNoz, Checkmk for Root Cause Analysis, OpenObserve, etc. Licensed options providing integrated functionality include Splunk, New Relic, Datadog, Dynatrace, Groundkover, Kloudfuse, etc. [Comparison] Opsgenie,

-

Define an error budget that matches mechanisms to establish redundancy. An enterprise which, every 3 months, redeploy everything in a different data enter in another region to ensure failover works, would have a smaller error budget. An error budget reflects the risks accepted by inaction. The great the risk, the more issues and longer downtime should be expected.

Expecting zero defects and no download without staffing for mechanisms described here is like turkeys burying their head in the sand. That leads to preventable turnover and unecessary office politics.

-

Maintain a duplicate environment to safely test making changes to production at scale. It’s needed to provide a “data-driven” approach to tune Kubernetes. For example, to ensure that the “thundering herd” problem does not occur when services come back up at scale.

This can be a waste of time if assets are not kept up to date with the latest version of Kubernetes and the latest versions of the many components that make up Kubernetes.

-

Compile playbooks of manual steps and tools in response to issues that occur, then automate them with self-healing tools that automatically apply fixes. VIDEO: Use n8n AI Article

-

Conduct regular “chaos engineering” exercises that purposefully injects faults to identify the timeliness and completeness of incident response. This is how the organization proves that it can handle problems, that alerts are issued based on trends and reach the appropriate people when needed.

Security people have defined their “Red Teaming” methodology, in which a team of testers deliberately probes the solution for weaknesses and attempts to produce harmful results. Although these are mandated by many audit requirements, this can be a waste of time if the exercises are not organized properly and results are hidden.

-

Construct “self-service” portals and utilities to simplify frequent processes and save developer’s time for common activities.

But portals can become “anti-patterns” because they can also limit innovation (new features) and block individual developers from learning to work quickly and troubleshoot independently.

Self-Healing Automation

One of the reasons for adopting Kubernetes is that it can automatically restore cluster health: * Looking at each level:

-

The kubelet service ensures containers are running, and restarts those that fail per the defined restartPolicy. Kubernetes automatically removes it from the Service’s endpoints. This ensures that traffic is routed only to healthy Pods.

-

If a node is running a Pod with a PersistentVolume (PV) attached, and the node fails, Kubernetes can reattach the volume to a new Pod on a different node.

However, if a persistent volume becomes unavailable (due to being full) or hardware failure, action outside of Kubernetes would be needed.

-

If a Pod in a Deployment or StatefulSet that is part of a DaemonSet fails, to maintain the specified number of replicas, the control plane creates a replacement Pod to run on the same node.

-

Application programming errors within underlying application issues must be addressed separately.

Add-on tools such as Komodor monitors it all and suggests actions, like a human expert would.

For “full node lifecycle management” to minimize downtime, reduce manual intervention, and improve security, the Devtron Resource Watcher detects and remediates common Kubernetes issues automatically in self-healing Kubernetes clusters created by Kured (Kubernetes Reboot Daemon), a CNCF Sandbox project. Kured manages node reboots safely after updates or failure detection, coordinating Pod rescheduling to minimize downtime.

Netdata stack provides real-time monitoring, anomaly detection, and automated alerts that can trigger remediation workflows with Ansible or Node-RED.

K9s Auto-Remediation Workflows automates recovery actions and integrates with observability tools for dynamic issue resolution.

This article: Automation

Because of the above issues, I am creating scripts that can, with one command, invoke a CI/CD workflow (on GitHub.com) that uses Terraform IaC and Sentinel PaC to stand up a Kubernetes cluster within AWS (after installing clients and establishing credentials), then identify the optimal Kunbernetes specifications by running tests of how quickly it takes Kubernetes to scale horizonatally and vertically.

But that’s just the beginning.

The contribution of this article is a carefully sequenced presentation of complex material so it’s both easier to understand quickly yet more deeply. “PROTIP” flags insightful commentary while hands-on activities automated in a shell script – an immersive step-by-step “deep dive” tutorial to both prepare for Kubernetes exams and to work as an SRE in production use.

TL;DR Professional certifications in Kubernetes

VIDEO:: If you’re here for advice on how to pass the KCNA, CKAD, here is my advice:

-

PROTIP: Instead of trying to memorize everything, during the test you’re given access only to Kubernetes official documentation, get used to navigating those set of pages to look stuff up. NOTE: There is support for other languages other than English. Foresake all other docs and utilities until after you pass (and get a real job using Kubernetes where you would consider Service Mesh, Kustomize, Jsonnet, etc.).

-

PROTIP: Instead of multiple choice questions, K8s exam consists of task-based practical responses while SSH’d into live clusters . So first minute into the exam, configure your Terminal with keyboard shortcuts (such as k instead of kubectl), and use command abbreviations.

-

PROTIP: Learn to be proficient at the text editor that come with Ubuntu Linux, which is vi.

-

PROTIP: Study using KodeKloud’s gamified hands-on troubleshooting pedagogy that moves you logically through 49 topics. Quizzes follow each topic. You can access it via Udemy.com.

-

Each exam includes one free fail retake.

Kubernetes CSPs

Kubernetes can run within private on-premises data centers on “bare metal” machines.

But being open-source has enabled Kubernetes to flourish on multiple clouds*

- ACK = Alibaba Cloud Kubernetes

- AKS = Azure Kuberntes Service using az aks commands

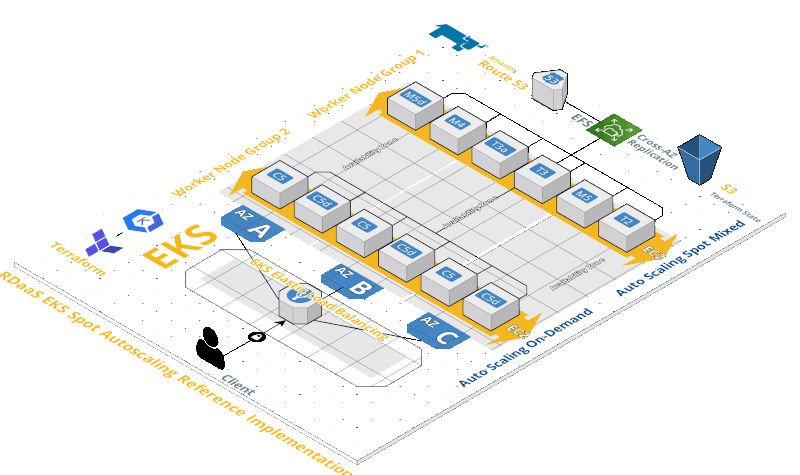

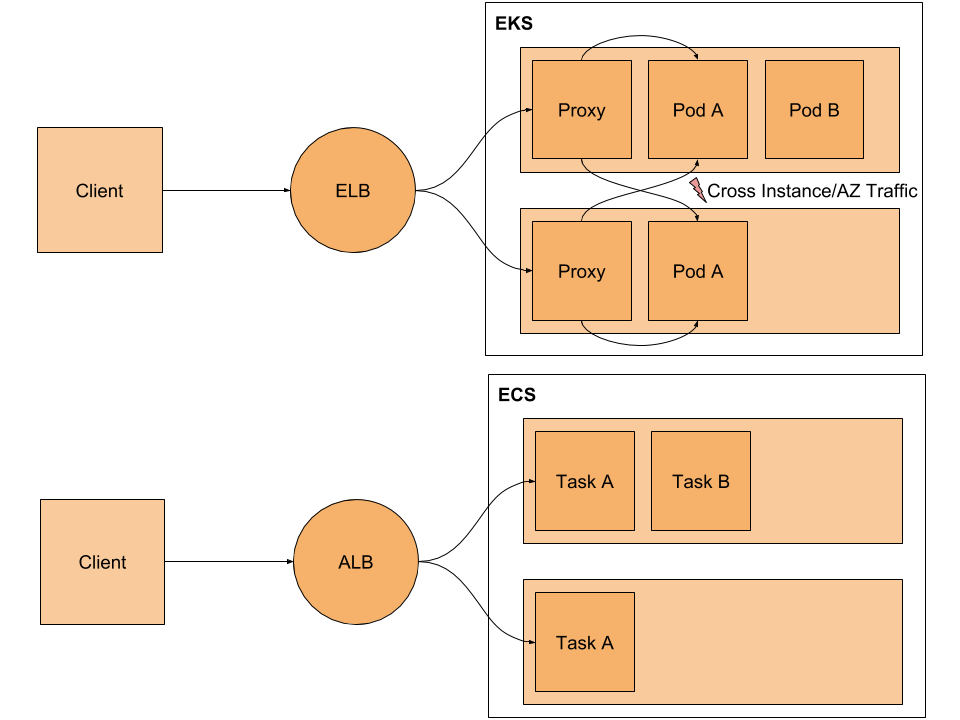

- ECS = Elastic Container Service (in AWS)

- EKS = Elastic Kubernetes Service (in AWS) using eksctl commands

-

GKE = Google Kubernetes Engine gcloud container

- IBM cloud Kubernetes Service

- OKD = OpenShift (Red Hat) Enterprise platform as a service (PaaS) Origin community distribution - OpenShift Dedicated, OpenShift Online

-

KUBE2GO

- PKS = VMWare Tanzu purchase of Pivotal, Heptio (Joe Bada, Craig McLukie), morph from PCS

- RKE = Rancher Kubernetes Engine

-

Rackspace’s Kubernetes as a Service

- DOKS = Digital Ocean (Istio)

-

OKS = Oracle

- PKE = Bonzai

- MKE = D2iQ (Day two iQ) rebranded from Mesos DC/OS meta clusters

-

Canonical

-

https://github.com/kubernetes-sigs/kubespray

- And others

Cloud Playgrounds

PROTIP: To follow along many tutorials, Admin permissions are needed for the account being used. So it’s best you use a “play” account rather than a work account.

You can learn to use Kubernetes even on a Chromebook laptop if you use a cloud-based learning environment (sandbox). A subscription is needed on some.

- KataKoda [FREE]

- Qwiklabs

- ACloudGuru [Subscription]

- CloudAcademy [Subscription]

KataKode

-

PROTIP: I prefer KataKoda because it provides Two terminal sessions when other options I have mess with jumping back and forth.

-

When you see the terminal prompt, type uname -a for:

Linux controlplane 4.15.0-122-generic #124-Ubuntu SMP Thu Oct 15 13:03:05 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

Notice “controlplane”.

Notice that KataKoda runs Linux Ubuntu, so use the installer appropriate for it.

-

Get status:

kubectl cluster-info

Kubernetes master is running at https://172.17.0.17:6443 KubeDNS is running at https://172.17.0.17:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Notice “master”. That’s what runs the control plane. To be culturally sensitive, “Master Node” has been renamed to “Control Plane Node”.

“KubeDNS”

-

Launch a two-node Kubernetes cluster with a single command:

launch.sh

Waiting for Kubernetes to start... Kubernetes started

Next, consider [FREE] guided K8s Katas by Contino. It uses K8s Namespace “kube-system”. It also has a “kube-public” namespace where nothing runs, but contains a ConfigMap which contains the bootstrapping and certificate configuration for the Kubernetes cluster.

Now proceed to use other Kublectl commands.

ACloudGuru

-

https://acloudguru.com has both guided Labs and playground sandboxes on AWS, GCP, and Azure.

ACloudguru.com CKAD course by William Boyd has 3.5 hours of video organized according to exam domains, 13 hands-on labs, and 3 practice exams based on v1.13 (older version).

CloudAcademy.com

-

https://cloudacademy.com Playground lab enables you to skip all the install details

CloudAcademy’s 11-hour “Learning Path” course was updated August 27th, 2019 by Logan Rakai.

PROTIP: A browser-based session times out too quickly and is cumbersome to copy and paste. So use SSH instead.

KodeKloud

-

STAR: KodeKloud is especially effective because it’s gamified troubleshooting where it provides a situation you have to figure out the commands, just like in the certification tests.

A KodeKloud subscription includes access to a KataKoda-powered lab environment for one hour at a time, then you have to start over. The instances come up quickly, though.

KataKoda, Red Hat’s OpenShift Playground using its “oc” CLI program. The KataKoda playground environment is pre-loaded with Source-to-Image (S2I) builders for Java (Wildfly), Javascript (Node.JS), Perl, PHP, Python and Ruby. Templates are also available for MariaDB, MongoDB, MySQL, PostgreSQL and Redis.

PROTIP: The k alias for kubectl is already configured, so type k instead of kubectl.

Qwiklabs

-

Qwiklabs has several hands-on labs using Kubernetes on Google Cloud.

Occassionally, Google offers free time.

Try the FREE 30-minute Kubernetes Engine: Qwik Start which is one of the “quests” in Google’s Kubernetes in the Google Cloud Qwiklab quest. Tasks:

- Task 1: Set a default compute zone

- Task 2: Create a GKE cluster

- Task 3: Get authentication credentials for the cluster

- Task 4: Deploy an application to the cluster

- Task 5: Deleting the cluster

Deploying Google Kubernetes Engine Clusters from Cloud Shell

Qwiklabs QUEST: Secure Workloads in Google Kubernetes Engine consists of 8 labs covering 8 hours.

Qwiklabs are used in Coursera courses, which explains provides lab solutions videos such as:

- Accessing the Cloud Console and Cloud Shell

- Deploying GKE

- Implementing Role-Based Access Control with Google Kubernetes Engine

30 days free training instances after completing a Tour class.

Others

- https://play-with-k8s.com was provided free by Docker. Now defunct.

Hands-on Demos/labs

I think the quickest yet deepest way to learn Kubernetes is to follow step-by-step instructions after seeing guided Labs (“demos”) using pre-loaded data. Hands-on practice is how you develop the “muscle memory” need to operate Kubernetes confidently.

PROTIP: To pass Kubernetes exams, you need to master the many CLI commands that control the Native Kubernetes command line interface (CLI): kubectl (pronounced “cube cuddle” or “cube see-tee-el” or “cube control”).

https://kubernetes.io/docs/user-guide/prereqs

There are several options to run kubectl:

A. Just the Kubectl CLI program can be installed on your laptop for you to communicate directly to a K8s master.

B. More commonly in production use, you use SSH to tunnel into a “Bastion host” in the cloud. Cloud-based training vendor CloudAcademy provides training on how to setup Kubernetes.

C. In a Terminal on your laptop use the Cloud Shell/Console CLI programs provided by the cloud vendor (AWS, Azure, GCP, etc.), which may involve costly bills.

D. If you have a laptop with enough memory and CPU, install a Minimal distribution:

- Minikube (see below)

- AWS ECS & EKS Anywhere

- install from the K8s hypercube Docker container

- Rancher Desktop at https://rancherdesktop.io

- K3s

- Microk8s on Linux

- Minishift

- VIDEO: KIND (Kubernetes in Docker) https://kind.sigs.k8s.io/ builds K8s clusters out of Docker containers running Docker in Docker, good for integration with a CI/CD pipeline.

A K8s on Raspberry Pi run on separate computers that are cheap ($70 USD), silent, and private. So they don’t consume disk space on your laptop.

But Pi’s are limited to 8 GB of RAM and based on newer ARM architecture rather than Intel x86 architecture used by PC’s and MacOS.

On Sep 2021, Dan Tofan released on Pluralsight “How to build a Kubernetes Cluster on 3+ Raspberry Pi”.

Scott Hanselman built Kubernetes on 6 Raspberry Pi nodes, each with a 32GB SD card to a 1GB RAM ARM chip (like on smartphones). Hansel talked with Alex Ellis (@alexellisuk) keeps his instructions with shell file updated for running on the Pis to install OpenFaaS.

CNCF Ambassador Chris Short developed the rak8s (pronounced rackets) library to make use of Ansible on Raspberry Pi.

Others:

- https://blog.hypriot.com/getting-started-with-docker-on-your-arm-device/

- https://blog.sicara.com/build-own-cloud-kubernetes-raspberry-pi-9e5a98741b49

App containers within Pods

Kubernetes runs apps (application executables) that have been “dockerized” within a Container “image” folder stored for retrieval from a container image registry such as:

- Docker Hub cloud

- Docker Enterprise (on-prem)

- JFrog Artifactory

- Nexus

- Quay.io (operated by Red Hat)

Kubernetes runs apps containers within Pods.

Every app Container has its own unique port number to a unique internal IP assigned to each Pod.

App Containers within the same Pod share the same internal IP address, hostname, Linux namespaces, cgroups, storage Volumes, and other resources.

-

List all Container images in all namespaces:

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" |\ tr -s '[[:space:]]' '\n' |\ sort |\ uniq -c

Fetch all Pods in all namespaces using kubectl get pods –all-namespaces Format the output to include only the list of Container image names using -o jsonpath={.items[].spec.containers[].image}. This will recursively parse out the image field from the returned json. See the jsonpath reference for further information on how to use jsonpath. Format the output using standard tools: tr, sort, uniq Use tr to replace spaces with newlines Use sort to sort the results Use uniq to aggregate image counts

Sidecars within Pods

Those who choose to use the Service Mesh Istio architecture place an “Envoy proxy” in each Pod to facilitate communictions and retry logic from app containers with business logic.

Within each Pod, all Containers share the same lifecycle – get created and removed together.

Pod Replicas within Worker Nodes

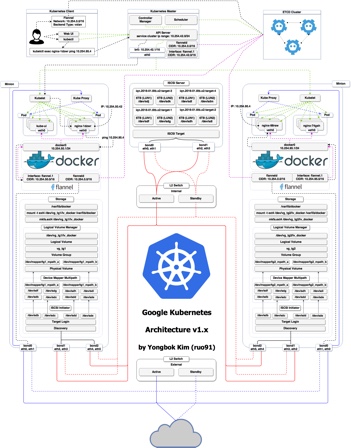

As illustrated on the right side of the diagram above:

“Worker Nodes”, the equivalent of physical host servers, are created to house various numbers of Pods.

LIMITS: Production setups have at least 3 nodes per cluster. K8s supports up to 5,000 node clusters of up to 150,000 pods (at v1.17).

Each Pod is “replicated” with the same set of Containers specified in a Deployment specification.

Again, “Replicas” of Pods are created within “Worker Nodes”.

PROTIP: “The median number of containers running on a single host is about 10.” – Sysdig, April 17, 2017. But there can be up to 100 pods per node (at v1.17)

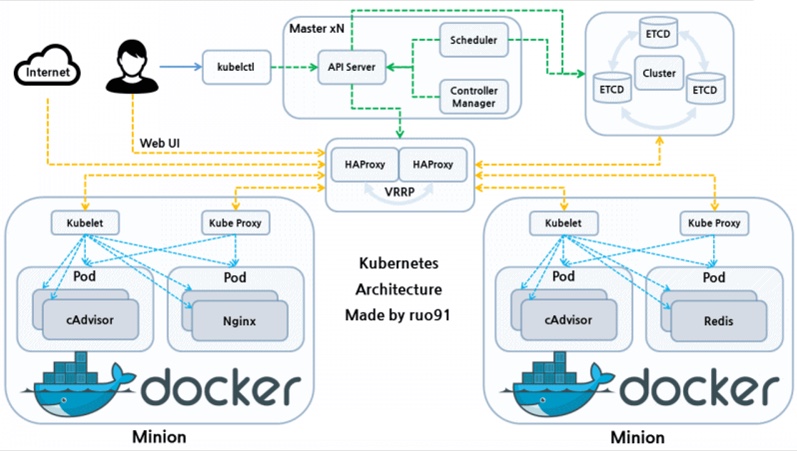

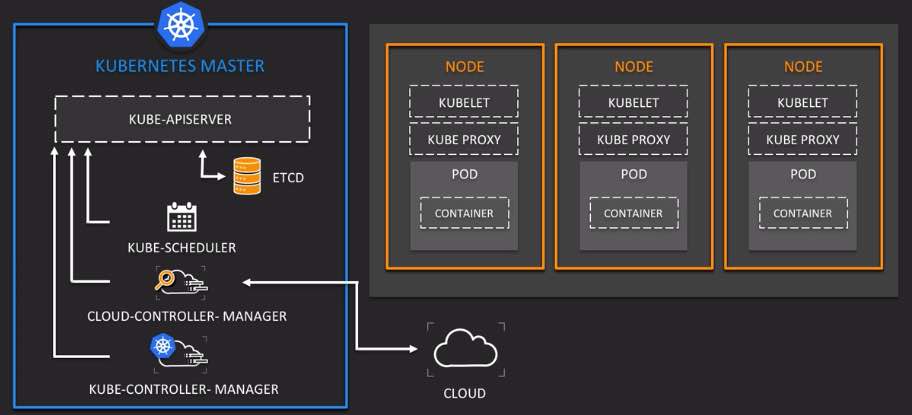

Control Plane Orchestration within a Master Node

To run and manage (orchestrates) several Worker Nodes, the Master Node of each cloud vendor maintains its “control plane” consisting of several key processes (aka service components):

-

The API server (named “kube-apiserver”) receives all administrative commands as REST API calls. So it’s called the “front-end”. Command-line programs communicating with Kubernetes do so by converting commands into REST API calls to the API server.

-

etcd is the database (key-value data store) within each cluster. PROTIP: etcd is the one stateful component, so many run it in a cluster separate for its own HA redundancy.

-

The Kube-Controller-Manager watches the state (status) of each cluster (as persisted in etcd) and attempts to move the current state towards the desired state (as defined in Yaml files). Various Controllers actually instantiate the actual resource represented by Kubernetes resource definitions.

-

The kube-scheduler assigns Pods to Nodes and communicates to the Kubelet to sechedule Pods.

-

The kublet agent on all nodes ensures containers are started.

-

The cloud-controller-manager optionally runs containers within Kubernetes.

-

Container runtime runs containers within Kubernetes.

Core K8s Internals

Kubernetes automates resilience by abstracting the network and storage shared by ephemeral replaceable pods which the Kubernetes Controller replicates to increase capacity.

This diagram (from CloudAcademy) illustrates core technical concepts about Kubernetes. In the center at the right:

Pods consume static Configmaps and Secrets.

Volumes of persistent data storage

Auto-Scaling

Unlike within AWS, where Auto Scaling Groups (ASGs) are used to scale nodes,

Within Kubernetes are these auto-scaling mechanisms:

- https://spot.io/resources/kubernetes-autoscaling-3-methods-and-how-to-make-them-great/

- https://spot.io/resources/kubernetes-autoscaling/kubernetes-replicaset-kubernetes-scalability-explained/

-

https://docs.openshift.com/container-platform/4.8/nodes/pods/nodes-pods-vertical-autoscaler.html

-

Cluster Autoscaler (CA) adjusts the number of nodes in a cluster. It automatically adds or removes nodes in a cluster when nodes have insufficient resources to run a pod (adds a node) or when a node remains underutilized, and its pods can be assigned to another node (removes a node).

-

HPA (Horizontal Pod Autoscaler) scales more copies of the same pod (assuming that the hosted application supports horizontal scaling via replication). HPA uses custom metrics such as the number of incoming session requests by end-users to a service load balancer.

-

Event-Driven Autoscaler is a Red Hat/Microsoft project that can scale a wide range of objects automatically.

-

VPA (Vertical Pod Autoscaler) analyzes standard CPU and memory resource usage metrics to provide recommendations CPU and memory resource configuration to align cluster resource allotment with actual usage. An example of its recommendation output:

status: conditions: - lastTransitionTime: "2020-12-23T10:33:13Z" status: "True" type: RecommendationProvided recommendation: containerRecommendations: - containerName: nginx lowerBound: cpu: 40m memory: 3100k target: cpu: 60m memory: 3500k upperBound: cpu: 831m memory: 8000kThe lowerBound limit defines the minimum amount of resources that containers need.

If configured, the VPA updater should be able to automatically restart nodes to carry out its recommendations and increase or decrease existing pod containers. But that is currently not recommended in production because of concerns about churn disrupting reliability – exceeding the Kubernetes PodDisruptionBudget (PDB) – minAvailable and maxUnAvailable.

Concerns about VPAs are: VIDEO:

- Complex YAML-based configuration for each container?

- Limited practicality at scale?

- Basic, reactive statistical modeling?

- Limited time-frame (looks at 8 days, which misses monthly, quarterly, yearly seasonality cycles)?

- Resource slack to reduce risk of throttling & OOM errors?

Thus, Google has come up with their service.

-

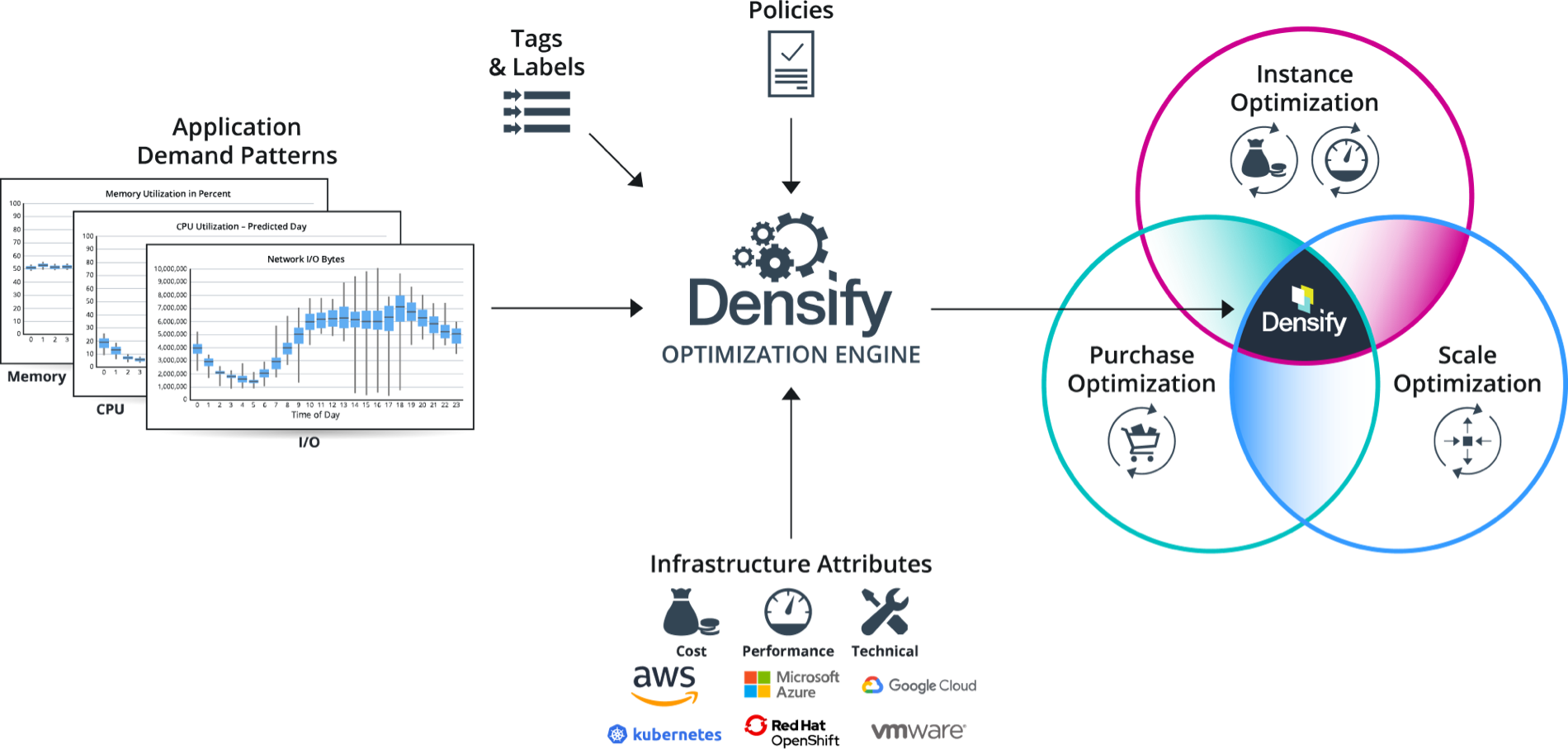

Densify.com provides a commercially available (paid) option which uses sophisticated Machine Learning mechanisms. It provides an API.

-

VIDEO: Stormforge.io uses Machine Learning to adjust based on experiments conducted in a dev instance.

- Prometheus Adapter for Kubernetes API?

VIDEO: Install the Metrics Server from https://github.com/kubernetes-sigs/metrics-server

VPA (Vertical Pod Autoscaler)

This section is based on several references:

- https://www.kubecost.com/kubernetes-autoscaling/kubernetes-vpa/ (the best description)

- https://www.densify.com/kubernetes-autoscaling/kubernetes-vpa (identifies issues with VPA)

- https://www.giantswarm.io/blog/vertical-autoscaling-in-kubernetes

- https://docs.aws.amazon.com/eks/latest/userguide/vertical-pod-autoscaler.html

- https://github.com/kubernetes/autoscaler/tree/master/vertical-pod-autoscaler

- https://cloud.google.com/kubernetes-engine/docs/concepts/verticalpodautoscaler

- BLOG:

- verticalpodautoscaler CRD

- https://blog.digitalis.io/kubernetes-capacity-planning-with-vertical-pod-autoscaler-7c1690dc38b3

- https://docs.oracle.com/en-us/iaas/Content/ContEng/Tasks/contengusingverticalpodautoscaler.htm

- https://www.alibabacloud.com/help/en/container-service-for-kubernetes/latest/vertical-pod-autoscaling

By default, the Kubernetes scheduler does not re-evaluate a pod’s resource needs after that pod is scheduled. As a result, over-allocated resources are not freed or scaled-down. Conversely, if a pod didn’t request sufficient resources, the scheduler won’t increase them to meet the higher demand. The implications of this is that:

-

If resources are over-allocated, unnecessary workers are added, waste unused resources, and monthly bills increase.

-

If resources are under-allocated, resources will get used up quickly, application performance will suffer, and the kubelet may start killing pods until resource utilization drops.

The Kubernetes Vertical Pod Autoscaler (VPA) VPA automatically adjusts the CPU and memory reservations for pods to “right size” applications. It analyzes resource usage over time to either down-scale pods that are over-requesting resources, and up-scale pods that are under-requesting resources. It maintains ratios between limits and requests specified in initial containers configuration. VPA frees users from manually adjusting resource limits and requests for containers in their pods.

To deploy the Vertical Pod Autoscaler to your cluster:

-

Define a Kubernetes Deployment that uses VPA for resource recommendations, using this sample YAML manifest:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.7.8 ports: - containerPort: 80Note that the file above has no CPU or memory requests. The pods in the Deployment belong to the VerticalPodAutoscaler (shown in the next paragraph) as they are designated with the kind, Deployment and name, nginx-deployment.

-

Define a VPA resource:

apiVersion: autoscaling.k8s.io/v1 kind: VerticalPodAutoscaler metadata: name: app-vpa spec: targetRef: apiVersion: "apps/v1" kind: Deployment name: app resourcePolicy: containerPolicies: - containerName: '*' controlledResources: - cpu - memory maxAllowed: cpu: 1 memory: 500Mi minAllowed: cpu: 100m memory: 50Mi updatePolicy: updateMode: "Auto"The targetRef (reference) allows specification of which workload is subject VPA actions. The above example says kind: Deployment, it can also be any of Deployment, DaemonSet, ReplicaSet, StatefulSet, ReplicationController, Job, or CronJob.

- Create an existing Amazon EKS cluster.

- The Metrics server must be deployed in your cluster.

- Open a terminal window.

-

Upgrade openssl to at least version 1.1.1 (currently LibreSSL 2.8.3):

openssl version

- Navigate to a directory that corresponds to your GitHub account, where you want to download source code.

-

View the documentation

https://github.com/kubernetes/autoscaler/tree/master/vertical-pod-autoscaler

Autoscaling is configured with a Custom Resource Definition object called VerticalPodAutoscaler.

CAUTION: There is a VPA version specific to each version of Kubernetes.

-

Clone the kubernetes/autoscaler GitHub repository and change to directory:

git clone https://github.com/kubernetes/autoscaler.git cd autoscaler/vertical-pod-autoscaler/

-

unset environment variables $REGISTRY and $TAG unless you want to use a non-default version of VPA.

-

(Optional) If you have already deployed another version of the Vertical Pod Autoscaler, remove it:

If running on GKE, clean up role bindings created in Prerequisites:

kubectl delete clusterrolebinding myname-cluster-admin-binding

./hack/vpa-down.sh

If VPA stops running in a cluster, resource requests for the pods already modified by VPA will not change, but any new pods will get resources as defined in your controllers (i.e. deployment or replicaset) and not according to previous recommendations made by VPA.

-

This assumes your nodes have internet access to the k8s.gcr.io container registry. If not, pull the following images and push them to your own private repository. For more information about how to pull the images and push them to your own private repository, see https://docs.aws.amazon.com/eks/latest/userguide/copy-image-to-repository.html Copy a container image from one repository to another repository.

k8s.gcr.io/autoscaling/vpa-admission-controller:0.10.0 k8s.gcr.io/autoscaling/vpa-recommender:0.10.0 k8s.gcr.io/autoscaling/vpa-updater:0.10.0

If you’re pushing the images to a private Amazon ECR repository, then replace k8s.gcr.io in the manifests with your registry. Replace 111122223333 with your account ID. Replace region-code with the AWS Region that your cluster is in. The following commands assume that you named your repository the same as the repository name in the manifest. If you named your repository something different, then you’ll need to change it too.

sed -i.bak -e 's/k8s.gcr.io/111122223333.dkr.ecr.region-codeamazonaws.com/' ./deploy/admission-controller-deployment.yaml sed -i.bak -e 's/k8s.gcr.io/111122223333.dkr.ecr..dkr.ecr.region-codeamazonaws.com/' ./deploy/recommender-deployment.yaml sed -i.bak -e 's/k8s.gcr.io/111122223333.dkr.ecr..dkr.ecr.region-codeamazonaws.com/' ./deploy/updater-deployment.yaml

-

Check if all Kubernetes system components are running: 3 pods (recommender, updater and admission-controller) all in Running state:

kubectl --namespace=kube-system get pods|grep vpa

-

Check that the VPA service actually exists:

kubectl describe -n kube-system service vpa-webhook

Name: vpa-webhook Namespace: kube-system Labels: <none> Annotations: <none> Selector: app=vpa-admission-controller Type: ClusterIP IP: <some_ip> Port: <unset> 443/TCP TargetPort: 8000/TCP Endpoints: <some_endpoint> Session Affinity: None Events: <none>

-

Check that the VPA admission controller is running correctly:

kubectl get pod -n kube-system | grep vpa-admission-controller

Sample output:

vpa-admission-controller-69645795dc-sm88s 1/1 Running 0 1m

-

Check the logs of the admission controller using its id from the previous command, such as:

kubectl logs -n kube-system vpa-admission-controller-69645795dc-sm88s

If the admission controller is up and running, but there is no indication of it actually processing created pods or VPA objects in the logs, the webhook is not registered correctly.

-

Deploy the Vertical Pod Autoscaler to your cluster:

./hack/vpa-up.sh

CAUTION: Whenever VPA updates pod resources, the pod is recreated, which causes all running containers to be restarted. The pod may be recreated on a different node.

VPA recommendation might exceed available resources (e.g. Node size, available size, available quota) and cause pods to go pending. This can be partly addressed by using VPA together with Cluster Autoscaler.

-

Check that the VPA Custom Resource Definition was created:

kubectl get customresourcedefinition | grep verticalpodautoscalers

-

Verify that the Vertical Pod Autoscaler pods have been created successfully:

kubectl get pods -n kube-system

Sample output:

NAME READY STATUS RESTARTS AGE ... metrics-server-8459fc497-kfj8w 1/1 Running 0 83m vpa-admission-controller-68c748777d-ppspd 1/1 Running 0 7s vpa-recommender-6fc8c67d85-gljpl 1/1 Running 0 8s vpa-updater-786b96955c-bgp9d 1/1 Running 0 8s

The VPA project’s components:

-

Recommender monitors the current and past resource consumption and, based on it, provides recommended values for the containers’ cpu and memory requests.

-

Updater checks which of the managed pods have correct resources set and, if not, kills them so that they can be recreated by their controllers with the updated requests.

-

K8s Admission Plugin sets the correct resource requests on new pods (either just created or recreated by their controller due to Updater’s activity).

kind: Pod are defined by a PodSpec configuration file such as:

kind: Pod metadata: name: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80 -

-

To verify that it works, deploy the hamster.yaml Vertical Pod Autoscaler:

kubectl apply -f examples/hamster.yaml

Example contents at autoscaler/vertical-pod-autoscaler/examples/hamster.yaml

apiVersion: autoscaling.k8s.io/v1 kind: VerticalPodAutoscaler metadata: name: my-app-vpa spec: targetRef: apiVersion: "apps/v1" kind: Deployment name: my-app updatePolicy: updateMode: "Auto"

-

updateMode = auto applies the recommendations directly by updating/recreating the pods. CAUTION: When VPA applies the change, each pod restarts, which causes a workload disruption.

-

updateMode = initial applies the recommended values to newly created pods only.

-

updateMode = off simply stores the recommended values for reference. This is preferred in production environments. Feed the recommendations to a capacity monitoring dashboard such as Grafana, and apply the recommendations in the next deployment cycle.

-

-

Get pod IDs from the hamster sample application:

kubectl get pods -l app=hamster

Sample output:

hamster-c7d89d6db-rglf5 1/1 Running 0 48s hamster-c7d89d6db-znvz5 1/1 Running 0 48s

-

Describe one of the pods to view its cpu and memory reservation to replace “c7d89d6db-rglf5” with one of the IDs returned in from the previous step:

kubectl describe pod hamster-c7d89d6db-rglf5

Sample output:

Containers: hamster: Container ID: docker://e76c2413fc720ac395c33b64588c82094fc8e5d590e373d5f818f3978f577e24 Image: k8s.gcr.io/ubuntu-slim:0.1 Image ID: docker-pullable://k8s.gcr.io/ubuntu-slim@sha256:b6f8c3885f5880a4f1a7cf717c07242eb4858fdd5a84b5ffe35b1cf680ea17b1 Port:

Host Port: Command: /bin/sh Args: -c while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done State: Running Started: Fri, 27 Sep 2019 10:35:16 -0700 Ready: True Restart Count: 0 Requests: cpu: 100m memory: 50Mi </pre> See that the original pod reserves 100 millicpu of CPU and 50 mebibytes of memory. For this example application, 100 millicpu is less than the pod needs to run, so it is CPU-constrained. It also reserves much less memory than it needs. The Vertical Pod Autoscaler vpa-recommender deployment analyzes the hamster pods to see if the CPU and memory requirements are appropriate. If adjustments are needed, the vpa-updater relaunches the pods with updated values. -

Wait for the vpa-updater to launch a new hamster pod. This should take a minute or two. You can monitor the pods:

Note: If you are not sure that a new pod has launched, compare the pod names with your previous list. When the new pod launches, you will see a new pod name.

kubectl get --watch pods -l app=hamster

The response is a pod ID such as “hamster-c7d89d6db-jxgfv”.

-

When a new hamster pod is started, describe it and view the updated CPU and memory reservations.

kubectl describe pod hamster-c7d89d6db-jxgfv

Sample output:

Containers: hamster: Container ID: docker://2c3e7b6fb7ce0d8c86444334df654af6fb3fc88aad4c5d710eac3b1e7c58f7db Image: k8s.gcr.io/ubuntu-slim:0.1 Image ID: docker-pullable://k8s.gcr.io/ubuntu-slim@sha256:b6f8c3885f5880a4f1a7cf717c07242eb4858fdd5a84b5ffe35b1cf680ea17b1 Port: <none> Host Port: <none> Command: /bin/sh Args: -c while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done State: Running Started: Fri, 27 Sep 2019 10:37:08 -0700 Ready: True Restart Count: 0 Requests: cpu: 587m memory: 262144k

In the sample output above, see that the cpu reservation increased to 587 millicpu, which is over five times the original value. The memory increased to 262,144 Kilobytes, which is around 250 mebibytes, or five times the original value. This pod was under-resourced, and the Vertical Pod Autoscaler corrected the estimate with a much more appropriate value.

-

View the new recommendation:

kubectl describe vpa vpa/hamster-vpa

Sample output was shown above:

recommendation: containerRecommendations: - containerName: nginx lowerBound: cpu: 40m memory: 3100k target: cpu: 60m memory: 3500k upperBound: cpu: 831m memory: 8000k

-

View the new recommendation:

kubectl describe vpa/hamster-vpa

Sample output:

Name: hamster-vpa Namespace: default Labels:

Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"autoscaling.k8s.io/v1beta2","kind":"VerticalPodAutoscaler","metadata":{"annotations":{},"name":"hamster-vpa","namespace":"d... API Version: autoscaling.k8s.io/v1beta2 Kind: VerticalPodAutoscaler Metadata: Creation Timestamp: 2019-09-27T18:22:51Z Generation: 23 Resource Version: 14411 Self Link: /apis/autoscaling.k8s.io/v1beta2/namespaces/default/verticalpodautoscalers/hamster-vpa UID: d0d85fb9-e153-11e9-ae53-0205785d75b0 Spec: Target Ref: API Version: apps/v1 Kind: Deployment Name: hamster Status: Conditions: Last Transition Time: 2019-09-27T18:23:28Z Status: True Type: RecommendationProvided Recommendation: Container Recommendations: Container Name: hamster Lower Bound: Cpu: 550m Memory: 262144k Target: Cpu: 587m Memory: 262144k Uncapped Target: Cpu: 587m Memory: 262144k Upper Bound: Cpu: 21147m Memory: 387863636 Events: <none> </pre> -

Print YAML contents with all resources that would be understood by kubectl diff|apply|… commands:

./hack/vpa-process-yamls.sh print

-

Delete it by running the same yaml file as used to create it:

kubectl delete -f examples/hamster.yaml

Kubernetes Security Hardening

BOOK: Hacking Kubernetes by Andrew Martin, Michael Hausenblas

LIVE: “Attacking and Defending Kubernetes” by Marco De Benedictis

Sidero Talos OS on Bare Metal

There is on-going debate about what Operating System to use with Kubernetes to reduce overhead and security exposures. Options are Ubuntu, Debian, CentOS, Red Hat Enterprise Linux (RHEL), Fedora. Operating systems compatible with Google Kubernetes Engine on Linux:

- https://cloud.google.com/migrate/containers/docs/compatible-os-versions

- https://computingforgeeks.com/minimal-container-operating-systems-for-kubernetes/

Reaching v1.0 April 2022, the open-source headless Talos Linux OS is purpose-built for Kubernetes.

Talos has no shell, no SSH, a read-only file system – making it small and secure.

To get the most performance possible out of hardware, with minimal overhead, run Kubernetes on bare-metal machines.

To simplify the creation and management of bare metal Kubernetes clusters, Sidero Labs, the company behind Talos Linux, also released Sidero Metal, a cluster API provider for bare metal, that installs Talos Linux based Kubernetes clusters, in a scalable and declarative way. (It’s built around the Cluster gRPC API (CAPI) project’s clusterctl CLI tool.

Replace failed node

PROTIP: Consider what happens if adding a new member results in an error and cannot join the cluster (due to a misconfiguration). See https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/#replacing-a-failed-etcd-member

-

To remove a failed node, first remove the failed nodes before adding new ones.

If you have a 3-node etcd cluster, even if one failed, adding another node would make it a 4-node cluster (counting the down node). That results in upping the minimum quorum to 3 nodes to prevent “split brain” cluster failure. If the new member is misconfigured, and cannot join the cluster, you now then have two failed nodes, and not meet the required quorum of 3.

Use of Talos Linux makes recovery of Kubernetes simpler because Talos Linux has helper functions that automate the removal of down etcd nodes.

talosctl etcd remove-member ip-172-31-41-76 kubectl delete node ip-172-31-41-76

Without Talos, adding an extra node increases quorum, which is actually not a good thing. For example, if you have a 3-node etcd cluster, a quorum of 2 nodes is required for the cluster to continue processing writes. Removing a working node would remove fault tolerance. A failure during the transition before another node is successfully added would cause the cluster to be downed. So…

With Talos, adding an extra node does NOT increase quorum. Since Talos Linux uses the Learner feature of etcd — all new control plane nodes join etcd as non-voting learners. When they have caught up with all transactions, the node is automatically promoted to a voting member.

-

To add a new control plane node under Talos Linux, boot a new node with the controlplane.yaml used to create other control plane nodes.

Replace working node

If the node to be replaced is still working, the order of actions is the opposite of when the node has failed. For example, if you have a 3-node etcd cluster, a quorum of 2 nodes is required for the cluster to continue processing writes. Removing a working node would remove fault tolerance. A failure during the transition before another node is successfully added would cause the cluster to be downed. So…

-

BEFORE removing the working node, add the new node, (with a more preferable AWS server type for different CPU or memory). This would increase a 3-node cluster to increased to 4 nodes.

A failure during the transition would reduce the cluster to 3 nodes, still a viable cluster.

Add the new control plane node by booting using the controlplane.yaml file.

-

Remove the working node by telling the node that it is being replaced to leave the cluster. Using a sample IP address:

talosctl -n 172.31.138.87 reset kubectl delete node

Since Talos is aware that it’s in a control plane node, it knows to gracefully leave etcd (be erased) when it receives the reset command.

A multi-master configuration is required so a single Master does not become a single point of failure. Workers connect to and communicate with any master’s kube-apiserver via a high availability load balancer. See https://dominik-tornow.medium.com/kubernetes-high-availability-d2c9cbbdd864

Talos

The lifecycle of each Talos machine is managed by a SideroLabs (Videos, sidero.dev, @SideroLabs) workload cluster, open-sourced at github.com/siderolabs/sidero. Sidero provides bootstrap/controlplane providers for running Talos machines on bare-metal x86 or arm64 machines on-prem, or on Raspberry Pi (VIDEO by Spencer Smith: “metal-rpl_4-arm64.img.xz” on 16GB SD).

VIDEO: Try within Docker on MacOS.

Sidero offers a declarative way to provision, assign, wipe servers (“machines as cattle”).

Sidero uses port 8081 for a combined iPXE and gRPC service, UDP 69 for TFTP, and UDP 51821 for the IPv6 SideroLink Wireguard keyed point-to-point connections. Talos’ KubeSpan yaml config automates WireGuard encryption to securely add workers on remote networks. KubeSpan delivers a solution to the coordination and key exchange problem, allowing all nodes to discover and communicate in an encrypted channel with all other nodes – even across NAT and firewalls. It supports roaming of devices, and transparently handles the correct encryption of traffic that is destined to another member of the cluster, while leaving other traffic unencrypted.

All that enables Kubernetes to operate multi-region/multi-cloud. Arges is its multi-environment management service.

An ISC DHCP server (such as on Ubiquiti EdgeRouter products) is needed.

Sidero uses IPMI information to control Server power state, reboot servers and set boot order automation. Sidero also supports BMC automation.

Talos node sends over the SideroLink connection two streams: kernel logs (dmesg) and Talos events.

Cloud K8s GUI & CLI

Each cloud SaaS vendor listed above provides its own GUI on internet browser (such as Google Chrome) for Kubernetes Administrators to access.

Each cloud vendor also has its own CLI command program (such as AWS eksctl) to provide proprietary features.

kubctl processes manifests (in yaml format) by translating them to API calls to the Kubernetes API program, a part of the “k8s master”.

CLI Setup

PROTIP: Training vendors provide you a choice to use a browser without installing anything. But a more performant (satisfying) approach is to install credentials and work from your laptop’s Terminal program.

Use of a browser would require copy and pasting of accounts and passwords, bringing up CLI, creating environment variables, etc. every time.

However, using a CLI would require some installation and configuration work.

Use my step-by-step instructions to get CLI installed and configured on your laptop:

-

For AWS CLI, see https://wilsonmar.github.io/aws-onboarding/

-

For Azure CLI, see https://wilsonmar.github.io/azure-onboarding/

-

For GCP CLI, see https://wilsonmar.github.io/gcp/

On-premise

Kubernetes distributions with on-prem. focus:

- Canonical Kubernetes

- Google Anthos

- K3s

- Red Hat OpenShift

- SUSE Rancher

- VMware Tanzu

AWS ECS & EKS Anywhere

https://aws.amazon.com/ko/blogs/aws/getting-started-with-amazon-ecs-anywhere-now-generally-available/

https://aws.amazon.com/eks/eks-anywhere (open-sourced with an Apache-2.0 license at https://github.com/aws/eks-anywhere, tested using Prow) manages Kubernetes clusters using VMware vSphere on premises using AWS EKS Distro

https://anywhere.eks.amazonaws.com/

https://anywhere.eks.amazonaws.com/docs/getting-started/install/

brew install aws/tap/eks-anywhere eksctl anywhere version

Kubectl Commands

CAUTION: The trouble with lab enviornments is that you are given a limited amount of time each session – as little as 30 minutes.

Shell scripts in SSH

PROTIP: Because all work is lost at the end of each session, I have found it useful to create shell scripts I can paste in a Shell Conole.

-

On AWS: sample.sh

-

On Azure: https://github.com/wilsonmar/aws-quickly describes use of:

bash -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/DevSecOps/main/gcp/gks-cluster.sh)"

- On GCP:

bash -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/DevSecOps/main/gcp/gks-cluster.sh)"

The script downloads what it needs from the public GitHub and runs it automatically.

The programmatic approach of scripts mean that code is needed to check conditions before each command (to see if the command needs to run). Code is also needed to obtain values in variables passed to the next step.

Docker and Alternative Runtimes

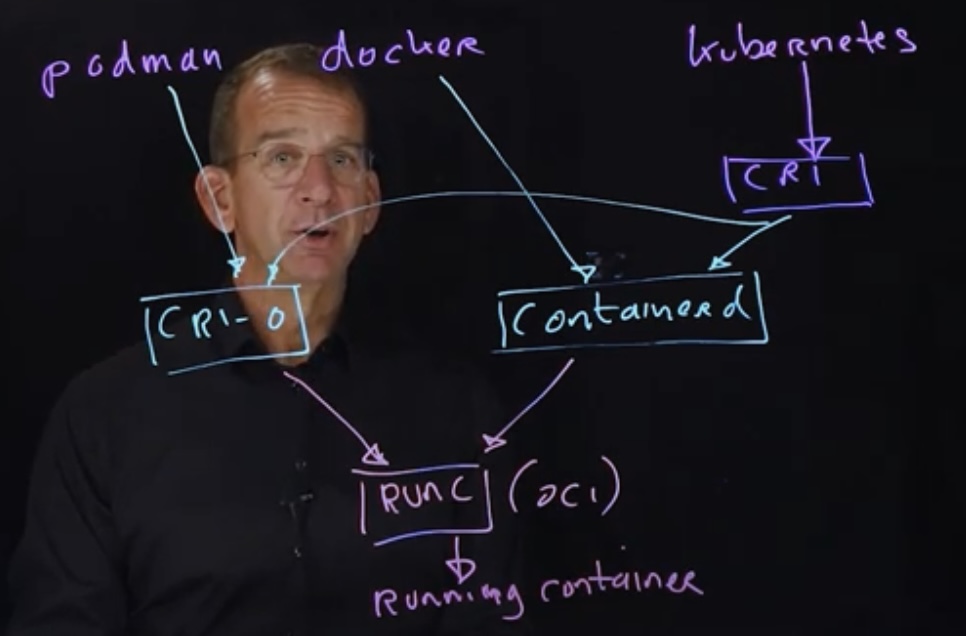

VIDEO: Kubernetes only need the Container Runtime from Docker’s Engine, which Kubernetes created a “dockershim” to use Docker’s Container Runtime. Then Docker extracted and gave to CNCF “containerd”.

This diagram of Sander on a lightboard:

Runc is supported by CRI-O, Docker, ContainerD. Runc is the low-level tool which does the “heavy lifting” of spawning a Linux container. (See CVE-2019-5736).

BTW Kubernetes had worked with rkt (pronounced “rocket”) containers, which provided a CLI for containers as part of CoreOS. Rkt became the first archived project of CNCF after IBM bought Red Hat and its competing cri-o technology used with OpenShift.

Inside each Node

- VIDEO: How Kubernetes Works explained by Brendan Burns (K8s co-founder)

Networking

Within Kubernetes are four types of network traffic:

- Container-to-container traffic is handled within the Pod.

- Pod-to-Pod traffic is handled by a SDN (Software-Defined Network).

- Pod-to-Service traffic is handled by kube-proxy and packet filters on the node.

- External-to-Service traffic is handled by kube-proxy and node-based packet filters (such as Consul)

Endpoints

Pods expose themselves to services via endpoints.

Kubernetes keeps a pool of endpoints

kubectl get endpoints

Endpoint Slices breakup endpoints into smaller, more manageable segments.

Each endpoint Slice is limited to 100 pods.

Aggregation Layer

The aggregation layer lets you install additional Kubernetes-style APIs in your cluster.

- Load balancing for Compute Engine instances

- Node pools to designate subsets of nodes within a cluster for additional flexibility

- Automatic scaling of your cluster’s node instance count

- Automatic upgrades for your cluster’s node software

- Node auto-repair to maintain node health and availability

- Logging and Monitoring with Cloud Monitoring for visibility into your cluster

What’s CNCF?

CNCF DEFINITION: “Cloud Native technologies” emplower organizations to build and run scalable applications in modern, dynamic, environments such as public, private, and hybrid clouds. Containers, services, meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and observable.

Combined with robust automation, they allow engineers, to make high impact changes, frequently and predictably with minimal toil.

The Cloud Native Foundation seeks to drive adoption of this paradigm by fostering and sustaining an ecosystem of open source, vendor-neutral projects.

We democratize state-of-the-art patterns to make these innovations acdessible for everyone.

Container Orchestration?

Kubernetes is called “container orchestration” software because it automates the deployment, scaling, and management of containerized applications[Wikipedia].

- Authentication -> Authorization -> Admission Control

- Load balancing

- Mixed operating systems (Ubuntu, Alpine, Talos, etc.)

- Using images in Docker avoids the “it works on my machine” troubleshooting of setup or dependencies

- Unlike Elastic Beanstalk, the k8s master controls what each of its nodes do

KCNA Exam

The Kubernetes and Cloud Native Associate is the entry level certification in Kubernetes. But it’s very hands-on with Terminal kubctl command experience.

https://www.cncf.io/certification/kcna

FAQ: The $250 closed-book exam is proctored by PSI online for two tries. Allocate 120 minutes of seat time. Answer 75% for 90 minutes to answer 60 multiple-choice questions in 1.5 hours (1.5 minutes/question). Good for 3 years.

The PDF exam Handbook lists:

- Kubernetes Fundamentals 46% (27-28 questions)

- Kubernetes Resources

- Kubernetes Architecture

- Kubernetes API

- Containers

- Scheduling

- Container Orchestration 22% (25 questions)

- Container Orchestration Fundamentals

- Runtime

- Security

- Networking

- Service Mesh

- Storage

- Cloud Native Architecture 16% (25 questions)

- Autoscaling

- Serverless

- Community and Governance

- Roles and Peronas

- Open Standards

-

Cloud Native Observability 8% (4-5 questions)

- Cloud Native Application Delivery 8% (4-5 questions)

- Application Delivery Fundamentals

- GitOps

- CI/CD

The CNCF Curriculum page recommends these resources:

- https://training.linuxfoundation.org/training/kubernetes-and-cloud-native-essentials-lfs250/

- https://www.edx.org/course/introduction-to-cloud-infrastructure-technologies

- https://www.edx.org/course/introduction-to-kubernetes

- https://www.edx.org/course/introduction-to-kubernetes-on-edge-with-k3s

- https://kube.academy

- https://www.edx.org/course/introduction-to-linux

- https://civo.com/academy

- KCNA Course Overview *KCNA study course on freeCodeCamp

Andrew Brown (FreeCodeCamp)

Andrew Brown (@andrewbrown) creates/sells Exampro practice exams, but he created in May 2022 a 14-hour VIDEO associated with his curriculum at FreeCodeCamp.com.

CKAD Exam

Here is the full text of the CNCF’s exam curriculum

13% Core Concepts (APIs, Create and configure basic pods, namespaces)

- Understand Kubernetes API primitives

18% Configuration (ConfigMaps, SecurityContexts, Resource Requirements, Create & consume Secrets, ServiceAccounts

10% Multi-Container Pods design patterns (e.g., ambassador, adapter, sidecar)

18% Observability (Liveness & Readiness Probes, Container Logging, Metrics server, Monitoring apps, Debugging)

20% Pod Design (Labels, Selectors, Annotations, Deployments, Rolling Updates, Rollbacks, Rollbacks, Jobs, CronJobs)

13% Services & Networking (NetworkPolicies)

08% State Persistence (Volumes, PersistentVolumeClaims) for storage

CKA Exam Domains

3-hour Certified Kubernetes Administrator (CKA) exams CNCF first announced November 8, 2016.

|

19% Core Concepts 12% Installation, Configuration & Validation 12% Security 11% Networking 11% Cluster Maintenance 10% Troubleshooting 08% Application Lifecycle Management 07% Storage 05% Scheduling 05% Logging / Monitoring |

+https://github.com/walidshaari/Kubernetes-Certified-Administrator lists links by exam domain.

Certificed Kubernauts.io Practioner (CKP)

https://trainings.kubernauts.sh/ describes a certification offered independently by https://kubernauts.de/en/home/ (@kubernauts in Germany) which also provides free namespaces (using Rancher) at https://kubernauts.sh

CKS Exam Domains

Starting November, 2020 at the KubeCon North America pre-conference: CKS exam is $300 for 2 hours.

It’s for those who hold a CKA certification.

- 10% Cluster Setup - Best practice for configuration to control environment access, rights, and platform conformity.

- 15% Cluster Hardening - to protect K8s API and utilize RBAC

- 15% System Hardening - to improve the security of OS & Network; restrict access through IAM

- 20% Minimize Microservice Vulnerabilities - to use various mechanisms to isolate, protect, and control workload.

- 20% Supply Chain Security - forcontainer-oriented security, trusted resources, optimized container images, CVE scanning

- 20% Monitoring, Logging, and Runtime Security - to analyse and detect threads

DockerDocker (specifically, Docker Engine) provides operating-system-level virtualization in containers.

-

Kubernetes Installation and Configuration Fundamentals” by Anthony Nocentino (@nocentio, centinosystems.com).

-

Pluralsight “Configuring and Managing Kubernetes Security” by Anthony Nocentino (@nocentio, centinosystems.com) is the one to take.

-

https://github.com/NodyHub/docker-k8s-resources/tree/master/k8s-pods sample Security yaml

-

https://ravikirans.com/cks-kubernetes-security-exam-study-guide/

-

https://github.com/walidshaari/Certified-Kubernetes-Security-Specialist by Walid Shaari (author in Medium).

-

https://github.com/ijelliti/CKSS-Certified-Kubernetes-Security-Specialist

-

https://ravikirans.com/cks-kubernetes-security-exam-study-guide

Whizlabs.com

Known for their sample exams, $99/year on sale from $199 for all courses, by instructors from India. If you want faster video playback, you have to set it for every video. Annoying.

-

Certified Kubernetes Application Developer (CKAD) [05:25:21]

-

Certified Kubernetes Administrator(CKA) [08:09:07]

-

* Learn Kubernetes with AWS And Docker [04:01:16

Exam Preparations

PROTIP: CAUTION: Whatever resource you use, ensure it is to the version of Kubernetes being tested (e.g., v1.19 as of 1 Sep 2020).

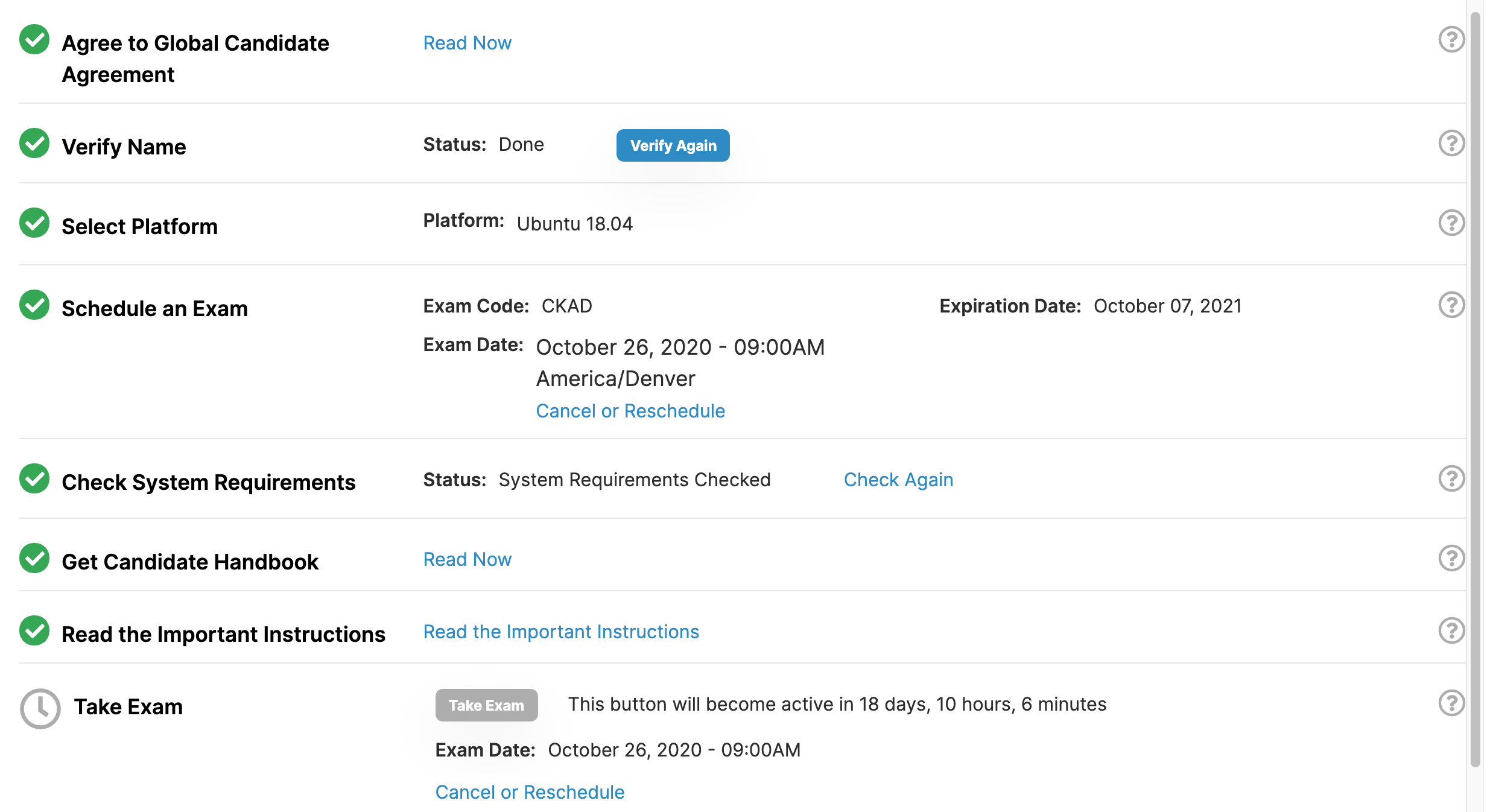

Sign up for exam

CNCF is part of the Linux Foundation, so…

-

Get an account (Linux Foundation credentials ) at https://identity.linuxfoundation.org. https://myprofile.linuxfoundation.org/

NOTE: It’s a non-profit organization, thus the “.org”.

https://docs.linuxfoundation.org/tc-docs/certification/lf-candidate-handbook

https://docs.linuxfoundation.org/tc-docs/certification/faq-cka-ckad-cks

https://docs.linuxfoundation.org/tc-docs/certification/tips-cka-and-ckad

-

Login to linuxfoundation.org and join as a member for a $100 discount toward certifications.

-

Go to https://training.linuxfoundation.org/linux-courses/system-administration-training/kubernetes-fundamentals and pay for the $300 exam plus $199 more if you want to take their class.

Alternately, if you have a Registration code: https://trainingportal.linuxfoundation.org/redeem

-

Find dates and times when you’re in a quiet private indoor place where no one else (co-workers) are near.

Select a date when your mental and physical are in peak Biorythm

- Open a Chrome browser.

-

Use your Linux Foundation credentials to create an account at

- Select the date, your time zone. The website is incredibly slow.

-

Click the date again in orange. Click the time range.

-

[Terraform] Install the Chrome extension used to take exams, verified during exam scheduling.

Click the green “I agree”, then “Confirm Reservation”.

-

Pick a date when your Biorythms are positive on Intellectual and Physical, not hitting bottom or crossing from positive to negative:

-

Sign-in at examslocal.com. For “Sponsor and exam”, type one of the following:

- Linux Foundation : Certified Kubernetes Application Developer (CKAD) - English

- Linux Foundation : Certified Kubernetes Administrator (CKA) - English

- Linux Foundation : Certified Kubernetes Security (CKS) - English ?

Click on the list, then Click “Next”.

Click the buttons in the Checklist form and select time of exam until you get all green like this:

pod-overview Docs and tutorials from Kubernetes.io.

- Click “Or Sign In With” tab and select “Sign in for exams powered by the Linux Foundation”.

- Log in using your preferred account.

-

Click “Handbook link” to download it.

https://trainingportal.linuxfoundation.org/learn/course/certified-kubernetes-application-developer-ckad/exam/exam

-

PROTIP: You’ll need a corded (Logitech) webcam (not one built-in).

-

Setup your home computer to take the exam Compatibility Checka using the Chrome extension from “Innovative Exams”, which uses your laptop camera and microphone watching you use a virtual Ubuntu machine.

Sample exam questions

-

[Terraform] https://github.com/dgkanatsios/CKAD-exercises by Dimitris-Ilias Gkanatsios (of Microsoft) provides sample exercises to prepare for the CKAD exam.

-

Build speed

-

See 3 preview exam questions (with answer explained) after signing up at https://killer.sh (Killer Shell’s) CKA/CKAD Simulator provides close replica of the CKAD exam browser terminal with 20 CKAD and 25 CKA questions, at 29.99€ for two sessions (before 10% discount). Each session includes 36 hours of access to a cluster environment. They recommend you start the first session when you’re at the beginning of your CKA or CKAD journey.

-

Practice Keyboard shortcuts for Bash

-

Get proficient with the vim editor so that commands are intuitive (where you don’t have to pause for remembering how to do things in vim). Use the vimtutor program that usually gets installed when you install the normal vim/gvim package.

Bookmarks to docs

You are allowed one browser window: kubernetes.io, so:

-

PROTIP: Rather than typing from scratch, copy and paste commands from pages in Kubernetes.io.

Key sections of kubernetes.io are:

- Documentation

- Getting started

- Concepts

- Tasks

- Tutorials

- Reference

PROTIP: Create bookmarks in Chrome for links to ONLY kubernetes.io pages

Day before exam

- Arrange to sleep well the night before the exam.

-

If you travel, make sure you are living in the correct time zone.

- Move files from your Downloads and Documents folder.

- Clear your desk of papers, books. The proctor will be checking.

Before start of exam questions

- Take a shower. Put on a comfortable outfit. Brush your teeth. Make your bed.

- Eat proteins rather than carbohydrates and sugar before the exam.

-

Fill a clear bottle with no labels holding clear liquids (water). You’re not allowed to eat snacks.

-

Put on music that helps you concentrate. Turn it off before starting the test.

-

Start calm, not rushed. Be setup and be ready a half hour before the scheduled exam.

-

You may start your exam up to 15 minutes prior to your scheduled appointment time.

-

Have your ID out and ready to present to the video camera.

- The exam takes 180 minutes (3 hours), so before you start, go to the bathroom.

- To the proctor, show your ID and pan all the way around the room.

Start of exam

-

Enter website and click grey “Take Exam” button.

-

19 questions means less than 10 minutes per question. So avoid getting bogged down on the longer complex questions. First go through all the questions to answer the easiest ones first. Along the way, mark ones you want to go back to.

NOTE: Although there are 19 objectives, not all objectives planned are in every exam.

-

PROTIP: Avoid writing yaml by scratch.

PROTIP: Learn to search within kubernetes.io to copy code.

Generate a declarative yaml file from an imperative command:

-

Create yaml file as well as pod:

kubectl create -f file.pod.yaml --record

-

Paste to the Notpad available during the exam. Save commands there for copy rather than retype.

k -n pluto get all -o wide

-

Use kubectl explain.

-

Use help as in kubectl create configmap help .

-

Run a busybox web server to test retrieval of externally (using wget):

k run tmp --restart=Never --rm --image=busybox -i -- wget -O- 10.12.2.15

Notice “Never” is title cased.

-

Do not delete/remove what you have done! People/robots review your servers after the test.

After exam

- Create an Acclaim.com account to manage publicity across many certifications.

-

If you pass the exam (score above 66%), go to acclaim to get your digital badge to post on social media.

https://trainingportal.linuxfoundation.org/pages/exam-history

Social media communities

-

community.cncf.io/manly to sign up for access to the “cncf-community-manly” community for discussions about KubeCon takeaways.

-

https://discuss.kubernetes.io is where issues with each version of K8s are discussed.

- https://kubeweekly.io, a weekly podcast [subscribe!]

-