How to provide enterprises multi-dimensional offerings with no limits of scale, geography, and complexity

Overview

- 1. Automation for large numbers of people and data

- 2. Hierarchy of groups

- 3. Analytic breakdowns and summaries across several dimensions

- 4. Global scale

- 5. Time sensitive

- 6. Central yet distributed planning and approvals

- 7. High Security

- 8. Round-the-clock SOC using SIEM and SOAR

- 9. Quick and complete recovery from disasters

- Summary

To successfully cater to enterprises, software vendors must incorporate features enterprises want and need. A salesperson from a software company once actually said in a meeting (unconvincingly):

“We’re enterprise software because we have enterprise users”

The stinging rebuff was:

“I think your software will actually be an enterprise offering when those specific features are implemented.”

Here are the concerns enterprises have that need to be addressed by vendors:

- Automation for large numbers of people and objects

- Hierarchy of groups

- Analytic breakdowns and summaries across several dimensions

- Global scale

- Time sensitive

- Central yet distributed planning and approvals

- High Security

- Round-the-clock SOC using SIEM, IDS, SOAR

- Quick and complete recovery from disasters

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

1. Automation for large numbers of people and data

Many rank enterprises based on financial measures. The “Fortune 500” lists the top 500 publicly traded stocks in the US. The Standard and Poor’s “S&P 500 index” lists stocks in the US by price times shares traded. There is also a Russell index of the top 3000 US stocks. There are also many large privately-held corporations. At the top of the list of the largest employers in the world is the U.S. Department of Defense at 3.2 million people, followed by China’s military, then Walmart at 2.2 million (1.3 million in the United States), about the same as Amazon.

Sheer scale means enterprise workers need batch (bulk) export, import, and processing. Large amounts of data make manual fixes not practical.

Enterprise-level software needs to appropriately isolate data and customize workflows used by each individual worker AND be sophisticated enough to logically summarize trends for executives. Many managers are overwhelmed by dashboards requiring expert manual navigation.

All organizations need to simplify operations and increase agility (through innovation). Issues related to large companies in these industries:

-

Healthcare - HIPPA regulations apply to their work. These need infrastructure and operations so doctors and nurses can focus on delivering better patient outcomes - not paperwork.

-

Retail - Squeezing profit from razor-thin margins, these enterprises need to build a better shopping experience with unique experiences with freedom of choice in technologies and applications. Wide geographical distribution of stores and warehouses needs to be coordinated with logistics impacted by weather and other factors.

-

Financial Organizations - Deal with highly regulated processes.

-

Manufacturing - Industrial IoT, robots, and digital manufacturing initiatives across the enterprise creating smoother workflows and managing supply chain disruptions.

-

Federal Agencies - advancing innovation quickly despite bureaucracies that build up over time.

Can your enterprise app (and salespeople) cut through the bureaucracy causing waits for approvals?

To minimize support costs, self-service apps are a big deal.

Extensive testing is crucial to keep rework from being unsustainable.

Specific, actionable alerts are important for troubleshooting.

The more managers in an organization, the more complexity and variations will be requested. That means an explosion of divergent components, databases, and technologies which drive them.

2. Hierarchy of groups

To manage large amounts of data and people, enterprises group them in various ways, as in an organization chart.

Enterprises are more used to hierarchical groups which reflect the traditional organization chart of vice presidents above directors above managers, etc. Effective or not, all data needs to fit into such an arrangement. “Conway’s Law” was coined for the observation that systems tend to look like the organizational structure of the people building them.

So enterprise workers need to make complex queries of data in order to filter out irrelevant parts of the large organization. For example, Microsoft Azure provides KQL (Kusto Query Language) with JMESPath to select specific values from within a sea of data.

Enterprise software needs to create reports showing an indented hierarchy rather than a mere two-dimensional list. Enterprise software needs to accommodate complex organizational structures.

To support innovation, additional ways to group people and data are needed. For example, each person may be in one or more projects, teams,locations, roles, levels, and have several skills, certifications, security clearances, etc. Tags are often used, but require management, so dynamic assignment of groups based on various attributes are needed.

Due to the greater complexity with enterprises, every field on enterprise data entry forms need to be searchable. It’s not enough for vendors to simply provide a “Next” button for users to hunt for a value within a long list.

And one other thing: executives at enterprises are paid a lot of money so many of them expect to be treated with deference, like VIP rock stars in 3-Michelin star restaurants.

3. Analytic breakdowns and summaries across several dimensions

Since there are different people in each box in the hierarchy, each box in each hierarchy is likely to want its own set of reports with unique filters and visualizations with its own variations. Such reporting is needed on a daily, weekly, monthly, quarterly, and yearly basis as well as custom-defined periods within dimensions of time, location, and other values.

Results often need to have a financial component that meets cost accounting principles.

Because the size and complexity of enterprise organizations make decisions time-consuming to propagate, enterprises must strive to move from reactive to proactive to predictive.

So enterprise visualizations over time need to look ahead to identify trends rather than just looking backward.

Additionally, enterprise users and managers need to create their own reports and visualizations.

4. Global scale

Global operations mean that translations in various languages become available together when the product ships. That requires massive coordination.

When a large business goes down, a lot of money is lost. So to reduce recovery time for live databases faltering, an enterprise would log-ship every single add or update across the sea to a duplicate hot site ready to take over. However, several countries (such as Germany, Singapore, etc.) mandate that it’s citizen’s data not leave its sovereign territory.

Background knowledge about international commerce is important for work in enterprises.

An enterprise that operates only in one country may be satisfied with redundancy from running two or three separate cloud Availability Zones within a single region. This has lower cost because cloud vendors charge for network traffic between regions. To save even more money, some enterprises contract with “warm” data centers which wait until a disaster to install servers, or “cold” centers which don’t have communications wired. This strategy would extend the time to recovery.

The formation of a CPPT (Continuity Planning Project Team) and setup of an EOC (Emergency Operations Center) are defined by ISO 27001 Section 14, ISO 27002, NIST 800-34, NFPA 1600 & 1620, HIPPA. These specify that enterprises have written DRP (Disaster Recovery Procedures) for emergency triage and management of information technology based on normal Business Management Procedures. DRP is the technical extension of longer-term strategic Risk Assessments and Business Continuity Plan (BCP) for the business as a whole – to ensure immediate survivability.

5. Time sensitive

Enterprise developers, especially, need tools to efficiently wade through massive amounts of data and complex code, while they are working on them real-time (rather than days or weeks after they have moved on to other issues).

Enterprises duplicate data and workload to several regions around the world within a CDN (Cloud Distribution Network) so that workers and users in South Africa and New Zealand can access systems as quickly as users in Virginia.

Complying with some standards requires that redundant capabilities be proven dependable, regularly – such as every year, when the RTA (Recovery Time Actual) statistic is captured. That’s to identify whether the organization takes too long to activate restore or is too clumsy with restore procedures.

Systems that are not set up for instant recovery nevertheless need to “fail safe” to a secure state rather than to a hackable state.

Sharing “cloud-scale” computing, storage, and network facilities in clouds enable use of the blue/green” strategy for deployment, which creates (in a cloud) a complete replacement set of components for “canary” and capacity testing before a full switch to production.

Quick response requires automation for building and testing.

Each server needs to be individually added or removed automatically within a “cluster” (within Kubernetes). That requires IaC (Infrastructure as Code such as Terraform) which defines all the components (compute, storage, and networking) in version-controlled text files.

6. Central yet distributed planning and approvals

Managers in enterprises desire to be able to centrally define policies (what is allowed or denied) distributed automatically to control everything. Software vendors are enabling a fundamental shift in governance where policy enforcement decisions occur instantly in automated pipelines rather than by manual inspections and meetings holding up progress.

Many enterprises have tried to setup PMOs, hire outside consultants, and install Agile Scrum Masters to overcome the headwind from entrenched “fiefdoms” of independent departments for compute, storage, networking, etc. which may not feel compelled to collaborate with others. Additionally, enterprises require Master Services Agreements (MSA) with vendors, managed by central purchasing and Security departments which sometimes operate on their own timelines.

So work in enterprises require social intelligence (self-control and guile) to deal with intricate corporate politics. That’s one reason why enterprise salespeople and technicians fetch top dollar.

The other reason for a shortage of enterprise specialists is the entrenchment of “separation of duties” and “least privilege” principles. Very few are able to cross fiefdoms to build the multi-tool and multi-disciplinary skills needed today.

To achieve competitive speed, many HR, marketing, and other “user” departments need to go outside on-premise data centers by running “Shadow IT” operations using enterprise software such as Salesforce, AWS, Microsoft, GCP, and others. Enlightened enterprise software vendors provide a way to get licenses using a personal credit card because it is sometimes necessary to bridge the gap to ultimately enable the bottom-up achievement of enterprise agility objectives.

The good news today is that individuals and small businesses can now use the same core cloud infrastructure (at AWS, Azure, GCP, etc.). However, many software companies make the bulk of their profit on additional-charge enterprise-level subscriptions. Such features are usually not free, even for a temporary amount of time.

Vendor flexibility is especially important if competitors have similar features. Being able to run competing products in parallel in near-production mode is often the only effective way to truly evaluate the actual value between similar products. BTW, this is why it is often counter-productive for vendors to artificially limit evaluation periods to a mere two weeks. Many such vendors are eliminated prematurely because evaluation periods are usually much longer due to organizational complexities.

The trend is for enterprises to migrate from on-premise data centers to the cloud – many on multiple clouds. That means that enterprises need to be able to prove their software can run on different clouds. Hyperscalers (such as AWS, Azure, GCP, etc.) provide innovation in AI/ML, low-code coding, datalakes, IoT, etc.

This diagram (adapted from a sample recycling app by Neal Ford) illustrates the complexity of tradeoffs in enterprise component design.

On the lower right, the instant and wide availability of storage housing modern Parquet-format files within delta lakes (Databricks, Snowflate, Microsoft Fabric, etc.) combines streaming and all models of databases (SQL, NoSQL, Graph, etc.). To cleanse and prepare data for AI/ML and analytics, instead of ETL processing which creates analytic data into another datastore, ELT processing occurs within a “Medallion” arrangement within a single datastore.

That modern way involves synchronization with a central data lake using Change Data Capture (CDC) or Slowly Changing Dimension (SCD), both of which exchange only changes rather than the whole data set.

These transitions up-end the traditional approach of extracting filtered datasets from a central database for movement to remote locations. Enterprises now see moving copies of data remotely as being more expensive, less secure, and less flexible than local copies of data (which may be a bit faster for users). It better supports those who roam among locations. The ability to refactor is quicker with a centralized data store, which uses “schema on read” rather than “schema on write”.

That means more centralized control of data governance, with more attention to access controls.

7. High Security

Because enterprises are tempting targets, defensive security is important. So every piece of software and every service needs vetting – a thankless, tedious endeavor. So many use specialist consultants and whistic.com, which pool security questionnaires and answers to reduce duplicate work.

When working with cloud vendors, many enterprises prefer to generate their own customer-owned keys for the encryption of data at rest instead of having cloud vendors provide the keys.

Many enterprises need to use strong encryption on data. In transit, mTLS (mutual TLS) protocols use certificates to encrypt each side of transmissions. On Windows, BitLocker is used on whole hard drives. Linux, MCrypt, PGP, TruCrypt, and others are options. If a hard drive is removed from a machine, the data on it would require many years of brute-force attacks to crack. Faster computers (and Quantum computing) will soon work so fast that very strong algorithms are needed. That is also why frequent re-authentication is required. That’s also why instead of having static passwords waiting to be hacked, enterprises are moving to dynamic passwords and user accounts which are generated for each session and then destroyed after a short time. The best password storage is no password at all.

Enterprises usually provide their users VPN (Virtual Private Network) to create an encryption-protected tunnel through the public internet to defeat man-in-the-middle attacks. Enterprise editions of the Windows 10 operating system enable “DirectConnect” which ensures the use of a VPN all the time. It also blocks apps from being installed.

Enterprises want vendors to provide Long-Term Servicing Channel (LTSC) versions which contain just security updates but no new features – to maintain consistency of training and support materials used.

The most sophisticated edition of Windows 10 – Enterprise E5 – adds Windows Defender ATP (Advanced Threat Protection) which runs virus scans and details the machine’s security posture in sophisticated visualizations. It also provides a “sandbox” to run suspicious programs in isolation.

Enterprise “DevSecOps” tooling includes scanner programs to identify:

- secrets exposed in open-source coded (using GitLeaks, etc.)

- security vulnerabilities in Terraform Infrastructure as Code (using Policy as Code TFSec, Checkov, etc.)

- OWASP vulnerabilities in custom code (using Veracode, Fortify, etc.)

- open-source packages referenced but are known to have vulnerabilities in the US NIST CVE database (using XRay, Sonatype, etc.)

- malicious code within packages (identified by socket.dev)

Higher security means more granularity in controlling what permissions specific users can access specific data based on each person’s role and group/department membership. New technologies enable Dynamic Attribute-Based Access Control (DABAC) which enables the use of contextual attributes (such as time of day, location, etc.) to determine whether a user can access data.

Need for security has led enterprises to segment access in different ways.

8. Round-the-clock SOC using SIEM and SOAR

Enterprise support typically has SLA (Service Level Agreements) which are quicker (more expensive) than others. Both Azure and AWS refund 100% of their billing on periods that do not achieve at least 95% availability (18 days a year). Achieving 99.99% (down an hour per year) requires self-diagnosing and self-healing.

Most large enterprises operate a SOC (Security Operations Center) which operates 24/7/365 to quickly respond to trouble detected among logs gathered from the many software programs the organization runs. IDS (Intrusion Detection Systems) repeatedly analyzes files to detect indicators of compromise.

The large number of people and data in enterprises means that automated tools are needed to identify and respond to threats.

Such utilities need developers to configure each application built to send logs and metrics from all machines into a SIEM (Security Information and Event Management) system such as Splunk, Azure Azure Sentinel, etc. Such systems commonly maintain several times more data than the systems themselves. Machine Learning techniques and advanced statistical analysis are becoming common with such systems. All that enables the SOC team to correlate events across the enterprise to detect intrusion and exploits.

Logs, especially, are also used by external auditors to determine actual compliance with policies. Those with access to SIEM data can elicit actual, detailed, real-time insights on inflows and outflows between different parts of the organization and systems – a magical tool to identify bottlenecks and predict trends. We look forward to 3D dynamic projections in Mixed reality glasses from Microsoft, Apple, Facebook, etc.

The complexity of SIEM systems requires utilities (such as Cardinal) to audit whether detection mechanisms are actually responding to various threats.

Data to track the Security Posture of the whole Enterprise means obtaining “metadata” (data about data). Enterprise apps need to provide central Service Now systems with a detailed history of auditable user activities linked to specific charge codes and approval events. This is especially important for financial and healthcare organizations. This means that software vendors need to provide an additional overlay of manual procedures into every workflow. For example, in GitHub, when someone creates a new repository (since GitHub doesn’t track charge codes), enterprise Security may want the user to exit out temporarily to another system to specify that charge code or request permission associated with the request. Detailed metadata and audit logs enable enterprises to perform forensics (after the fact) to identify who did what and when – needed for legal proceedings.

9. Quick and complete recovery from disasters

Many legacy applications were created when hardware capacity was static. It took months to obtain additional capacity. Even with over-bought capacity, such systems were designed to fail when overwhelmed.

The use of shared public clouds enables enterprises to use High Availability (HA) features, which means running simultaneously in multiple locations. Such operations require real-time coordination of data created across multiple sites.

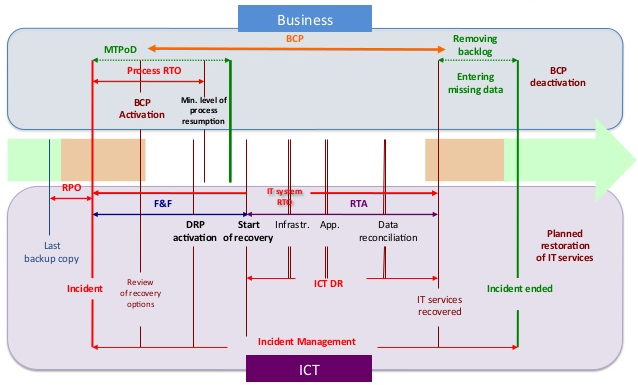

This timeline illustrates the complexity ignored by other diagrams on the same topic, especially the interplay between customer-facing business teams and ICT (Information and Communication Technology) organizations:

The success of a BCP (Business Continuity Plan) for Continuation of Operations (COOP) is realizing for each incident the MTPoD (Minimum Tolerable Period of Disruption), aka MTD (Maximum Tolerable Downtime), to reach the minimal level of business process resumption. MTD (MaximumTolderable Downtime) is the maximum number of hours an organization has to recover from a disaster until it has passed the point of no return.

RTO (Recovery Time Objective) is the maximum number of hours an organization has to recover from a disaster, without suffering too much damage.

RPO (Recovery Point Objective) refers to how far back to recover. This should be defined based on what processes can achieve. An organization that takes backups weekly stands to lose up to a week of data. Thus, enterprises typically set up their databases to perform “log shipping” where individual changes to each database are forwarded to keep a mirror database on standby in another region.

All these are ideally defined before a disaster (receiving a ransomware notice).

Notice in that green line a possible disconnect between the two organization’s measurements? A technical definition of what is measurable “Start of recovery” and “Incident ended” can be very different due to manual processes. What is the DRP (Disaster Recovery Plan) for business personnel? How do they participate and coordinate during F&F (Fail and Fix) events?

Do a dry run to actually restore from the last (most recent) backup copy to measure whether the RTA (Recovery Time Actual) meets the wishful RTO (Recovery Time Objective) for how much data is lost.

In a dry run of systems going down suddenly, how much data was actually lost compared to the RPO (Recovery Point Objective)? An organization that takes incremental backups once a day would have an RPO of at least 24 hours since any data processed after the last backup would be lost. The RPO needs to include time to run and verify restores from backups.

SnowflakeDB and Microsoft’s CosmosDB send database changes continuously to several regions so data is not lost if one region goes down. Users of the global service can choose to wait for confirmation on every transaction or continue without confirmation by assuming “eventual consistency”.

Summary

So here you have what makes for software to be enterprise-worthy:

- Automation for large numbers of people and objects

- Hierarchy of groups

- Analytic breakdowns and summaries across several dimensions

- Global scale

- Time sensitive

- Central yet distributed planning and approvals

- High Security

- Round-the-clock SOC using SIEM, IDS, SOAR

- Quick and complete recovery from disasters

Incorporating the above is not just for enterprises, but any organization that wants to be prepared to become massive with fewer issues. Building systems that inherently address the above enterprise concerns would save vendors and implementers the embarrassment of having to add them at the request of end-users. And it’s a lot easier to incorporate enterprise features during development rather than as an afterthought.

Let me know your thoughts.

// Wilson Mar