How to quickly learn and use the cloud-native Snowflake SQL database’s AI/ML features on top of AWS, Azure, GCP clouds

Overview

- Snowflake’s Value-Add

- Cloud Data Lakehouses

- Sign-up for a live account

- Use cases

- Snowflake’s Competition

- Concerns

- Architectural Innovations

- Snowflake the company

- Host Names (URLs)

- SnowPro Certifications

- Video Training

- Community

- Automation

- Language Support

- Futures

- Video Tutorials

- More about databases

The scene is in snow because Snowflake founders are fond of snow.

The photo at the top shows a large lake house because this article is a deep-dive hands-on approach to learning and using Snowflake, which provides what the IT (Information Technology) world calls a “Data Lakehouse”. That analogy takes some explanation.

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

- Value Add

- Competition

- Architecture

- Host Names (URLs)

- Occupations for certification

- Automation: CLI, Python APIs

- Futures Roadmap

- Resources

- More about databases

Snowflake’s Value-Add

In the 1980s, IBM defined the SQL (Structured Query Language) to run on their corporate mainframes. Memory and disk space were expensive and required months to obtain. To ensure that servers don’t crash due to lack of space or not enough CPU, corporations hired DBAs (DataBase Administrators) as a protective bureaucracy around databases, blocking application development teams. Frustrating.

So Oracle and Microsoft made SQL run on early Personal Computers as well. MS-SQL and open-source alternatives MySQL, MariaDB, and PostgreSQL enabled the growth of database-backed websites on the internet.

In the 90’s, SQL-based relational databases were at the heart of “Big Data” made big by Hadoop and Apache Spark software. They enabled the expansion of “Enterprise Data Warehouses” (EDWs) designed for Analytical Processing (OLAP) by summarizing data refreshed from source systems.

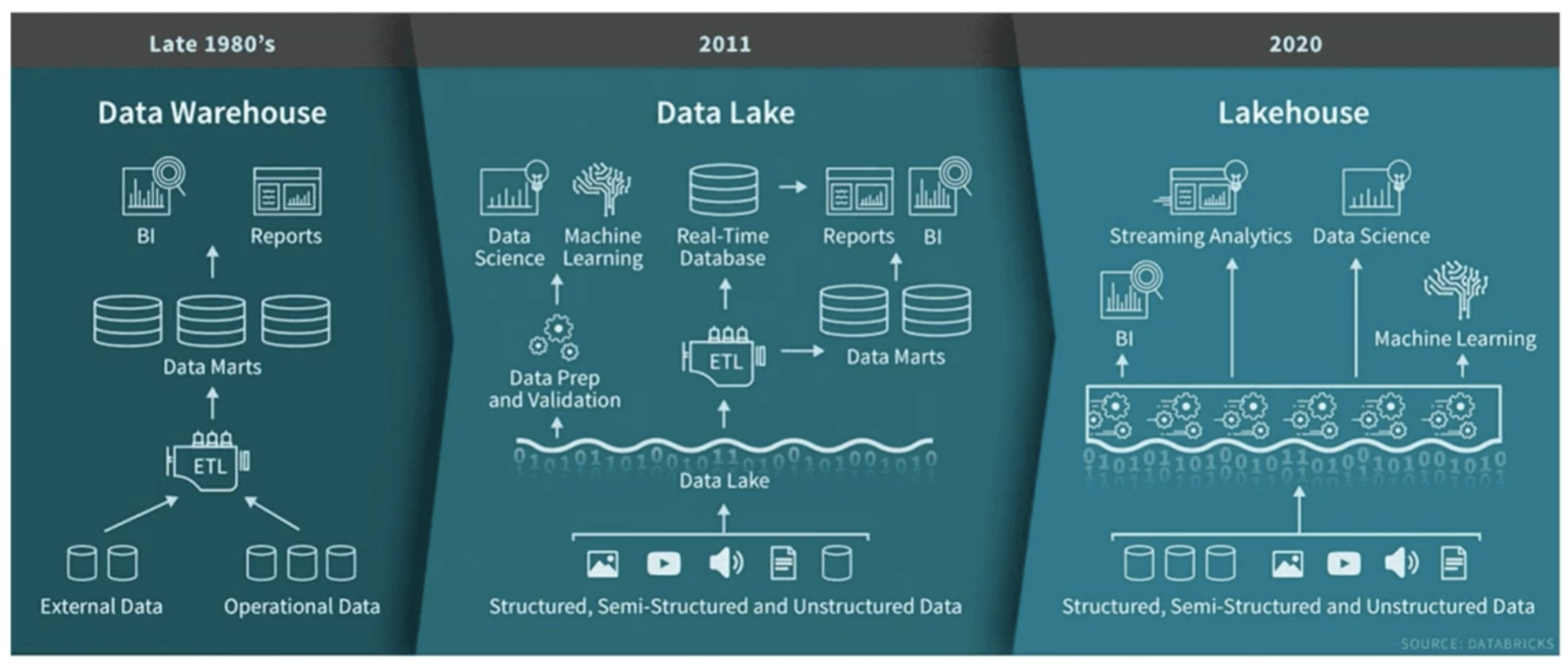

VIDEO: A “Data Lake” is a centralized collection of different data warehouses.

A “Data Mart” makes data searchable.

Memory limitations had resulted in OLTP (Online Transactional Processing) data update features being removed from data warehouses providing “write-once, read-many” Analytics Processing (OLAP) containing historical (denormalized) summary data.

And expensive disk space required that some data be thrown away as batch-mode “ETL” programs that load data into databases that have a rigid schema. Data warehouses also typically didn’t support semi-structured or unstructured data types.

VIDEO: A “Data Lakehouse” is designed to capture raw data (structured, semi-structured, unstructured) for integration with several Enterprise Data Warehouses, for “data science” AI/ML data processing.

PROTIP: Through acquisitions and internal R&D, Snowflake has quickly incorporated AI (Artificial Intelligence) in its offerings (Generative AI using LLMs).

“Data Lakehouses” provide an intelligent metadata layer that acts as a sort of middleman between unstructured data and data users.

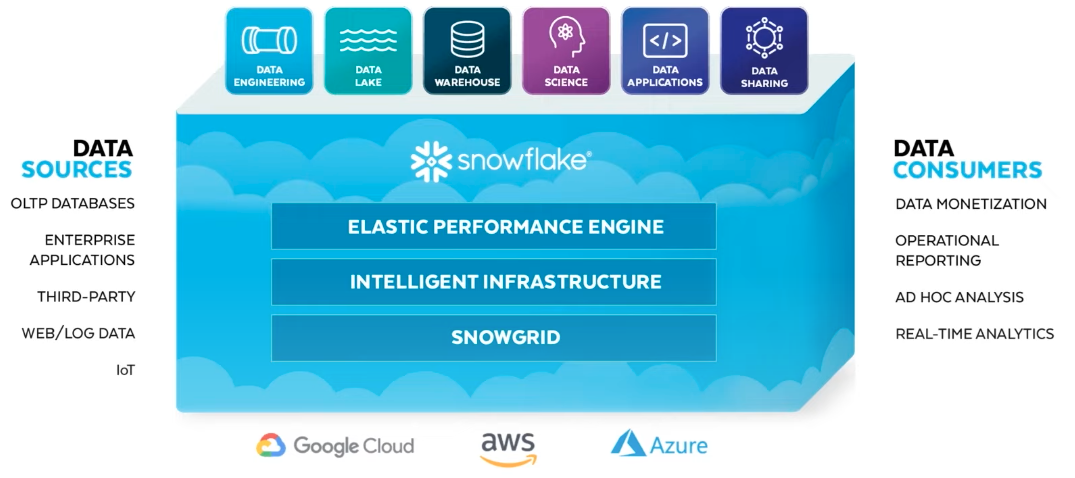

Snowflake is that middleman. Snowflake’s offers a cloud-native database – designed from the ground up to be a “Serverless” cloud-based database Data-Warehouse-as-a-Service” (DWaaS) on hardware and networks provided by public cloud service providers (CSPs AWS, Azure, GCP):

- on Amazon S3 storage since 2014

- on Microsoft Azure Blob Storage</a> (Data Lake Gen2) since 2018

- on Google Cloud Platform (GCP) since 2019

- QUESTION: Apache Iceberg? Salesforce? SAP?

-

View a demo to see its GUI for yourself:

https://www.snowflake.com/en/resources/video/data-lake-live-demo-emea/

-

If you don’t want to wait for a live session, select EMEA ON-demand demo:

Use of public clouds enables Snowflake to overcome limitations of limited memory and CPU.

PROTIP: By also putting an easy-to-use GUI in front use of public clouds, developers can now create databases, bypassing the DBA bureaucracy necessary before.

Snowflake’s GUI and database design also enables its data warehouse to be updated. Thus:

Data Lake + Data Warehouse = Data Lakehouse

Salesforce, also a SaaS company in San Franciso, and Warren Buffett’s Berkshire Hathaway each bought $250 million in Snowflake stock in private placements following the IPO. VIDEO: How Snowflake Broke Warren Buffet’s Lifelong Rule of not investing in tech.

Cloud Data Lakehouses

Cloud Data Lakehouses include:

- Snowflake

- AWS Redshift (first in market share)

- Google BigQuery

- Databricks Delta Lake

- Azure Synapse (based on Spark)

In VIDEO: Databricks v. Snowflake, Seattle Data Guy notes how Databricks starting off in Berkely using Jupyter Notebooks.

Scaling

Snowflake can be set to autoscale across their “t-shirt sizes” of credit usage per hour:

- 1 = X-Small

- 2 = Small

- 4 = Medium

- 8 = Large

- 16 = X-Large

- 32 = 2X-Large

- 64 = 3X-Large

- 128 = 4X-Large

- 256 = 5X-Large

- 512 = 6X-Large

References:

- What’s the difference?

- https://www.fivetran.com/blog/warehouse-benchmark concludes that performance is similar among Cloud Data Warehouses.

-

Snowflake’s corporate landing page is at:

Snowflake’s Data Lake House NOT open-sourced

Snowflake uses a columnar store (C-store) with a unique and proprietary SQL engine. A “columnar” database is structured to efficiently read specific fields across various rows, but without doing the I/O to read the entire row just to get to those fields.

Snowflake combines traditional “Data Lake” and “Data Warehouse” into a Data Lake House – a structure that’s useful for AI/ML (Machine Learning) as well as traditional analytics.

Snowflake can operate like Oracle, MySQL, Postgres, Teradata, or other traditional database. Connectors enable the interchange of data between Snowflake and Microsoft’s Power Platform, Qlik, etc.

References:

- https://www.youtube.com/watch?v=Muyq3qtHzzo&list=PL7_h0bRfL52pOai_ih3HSu2WCgPXmNHzH by Bryan Cafferky

- https://www.youtube.com/watch?v=FAnR4R5JMM8 by Pragmatic Works

- https://www.youtube.com/watch?v=-bSkREem8dM by Alex the Analyst

- https://www.youtube.com/watch?v=FxpRL0m9BcA by Seattle Data Guy

- https://www.youtube.com/watch?v=cnCIoNDaGvg by Bernard Marr

- https://www.youtube.com/watch?v=WgIbvkyY4mI

- VIDEO

Sign-up for a live account

-

Enroll in the “Badge 1: Data Warehousing Workshop” at

https://learn.snowflake.com/courses/course-v1:snowflake+ESS_DWW_101+2021/about

-

Snowflake offers 30-day trials, so many simply sign up using a different name each month (such as JOHNDOE_231230 for the end date.

They use automation to populate each new account.

PROTIP: Create a different browser profile associated with each account.

-

Create an account to begin a 30-day trial use:

6 Predefined Roles

ACCOUNTADMIN is the default role for new Trial Accounts.

In productive use, switch to SYSADMIN because it has less permissions, for a small “blast radius” in case of account compromise.

The Snowflake has an ORGADMIN predefined role used to generate new accounts and tie multiple Snowflake accounts together.

PUBLIC, SECURITYADMIN, USERADMIN

There is an inheritance

CSP Region choice

Snowflake cannot select all regions of each CSP.

PROTIP: To meet data sovereignty laws in the EU, Singapore, etc., Snowflake customers are limited to using specific CSP data center regions. These regions are what Snowflake makes available:

Theater Azure AWS GCP Canada Canada (Central) Canada Central (Toronto) - USA US East (North Virginia, Ohio)

US West (Oregon)East US 2 (Virginia)

Centrl US (Iowa)

South Central US (Texas)

West US 2 (Washington)US East 4 (N. Virginia)

US Central (Iowa)South America Sao Paulo - - EU North Europe (Ireland)

UK South (London)

West Europe (Netherlands)

Switzerland North (Zurich)Europe West (London)

Europe West (Netherlands)EMEA (Ireland, London, Paris, Frankfurt, Stockholm) UAE North (Dubai) - Asia Asia Pacific (Tokyo, Seoul, Osaka, Mumbai, Singapore, Jakarta, Sydney) Japan East (Tokyo)

Central India (Pune)

Southeast Asia (Singapore)

Australia East (New South Wales)- PROTIP: Instead of leaving data in a single CSP (such as AWS), Snowflake provides the basis for multi-cloud operation, to avoid CSP vendor lock-in.

Because data is valuable, a lot of barriers have been put around Oracle databases in on-prem data centers. This creates “data silos” which limits creative uses. But cloud-based databases can be accessed anywhere in the world, so can be used more creatively. Clouds can be used to enable teamwork around governed data.

-

??? Turn off after use.

-

Setup an alert when charges reach a threshold.

-

Set a way to turn off usage automatically.

Decoupled compute & storage

PROTIP: Unlike traditional databases (and "monolithic" database machines such as Terradata) which run continuously and racking up charge, Snowflake customers don't pay for compute charges unless jobs are being run. This is because Snowflake has <strong>decoupled compute from storage</strong>.

This decoupling also means that you're free to amass data without having to buy larger machines.

Snowflake’s customers don’t see its proprietary compute layer running on commodity virtual machines (AWS, GCP or Azure). It’s rumored to have intelligent predicate pushdown + smart caching. Hybrid columnar system inspired by C-Store, MonetDB among others.

Snowflake’s data is stored in a hybrid columnar format (PAX) with aggressive metadata caching. Snowflake’s proprietary columnar object store format runs in-memory / SSD.

https://www.dremio.com/open-source/nessie/

Use cases

-

One step during sign-up is “what will you use Snowflake for?”

- Build an application or data product for customers

- Develop machine-learning model or another data science initiative

- Run analytics or connect to the visualization provider

- Migrate an existing data warehouse

- Create a data lake, data mesh, or federated data source

- Other

Snowflake’s “Data Marketplace” platform supports a range of use cases, including data warehousing, data lakes, data engineering, data science, data application development, and data sharing.

Workloads

-

Pull down the list of Workloads:

- Data Cloud

- Applications

- Collaboration

- Marketplace

- Data Lakes

- Data Warehouse

AI/ML use cases

- VIDEO: DICOM Image Analysis With Snowpark (to detect Pneumonia in Xrays using Machine Learning).

-

The activate email sent to you contains a unique URL to the GUI dashboard associated with your account, such as:

https://abc-123.snowflakecomputing.com/console/login

-

Save the URL in a Browser Bookmark.

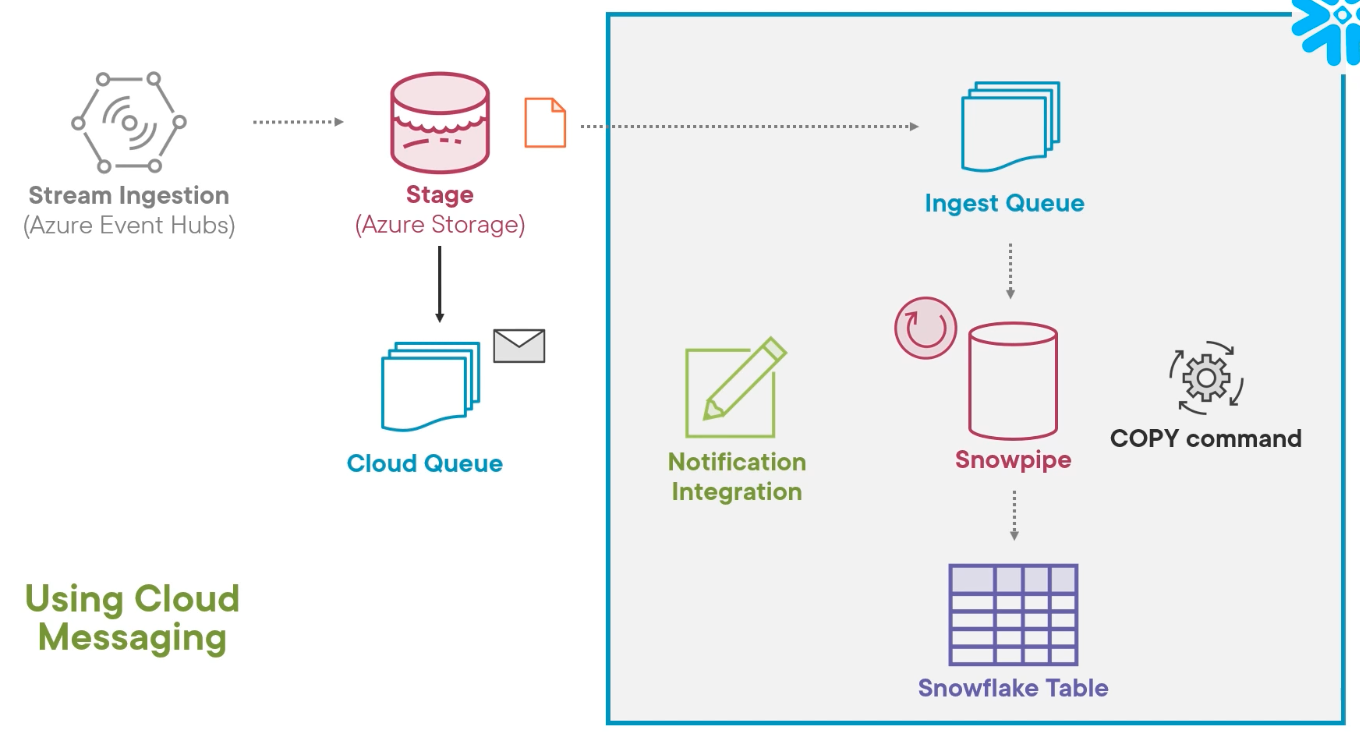

Snowpipe continuous streaming load

Snowflake’s ability to steam data makes for faster ingestion than traditional batch approaches.

NOTE:

This is used by IoT.

Snowflake’s “Snowpipe” “continuosly” (every few seconds) checks whether there is a file in specified folders within Azure or other cloud. When a file is found, an event notification is sent to Queue Storage.

- On AWS, use S3 event notifications or SQS notifications

- On Azure, use Storage Queue and Event Grid

- On GCP, use Pub/Sub for GCS buckets

A “Serverless” load process performs load into a Snowflake DB.

Load files are held for 14 days, by default.

Snowpipe ELT

ELT (Extract, Load, Transform) are the steps to load “raw data” directly from a source server into a target data warehouse. Business rules and data integrity checks occur in the data warehouse after data is loaded.

The larger capacity possible (perhaps for a short time) on cloud vendors enables innovation from the traditional

ETL (Extract, Transform, Load) approach in which transformation takes place on an intermediate server before it is loaded into the target. Transformations before load often render data unusable for purposes not originally designed.Snowflake Professional Services have a “Data Cloud Deployment Framework (DCDF)”.

Customer references

https://www.snowflake.com/en/why-snowflake/customers/

Snowflake’s Competition

Snowflake’s “Cloud Data Warehouse” competes in a crowded field of cloud-based databases from its cloud vendors:

- Amazon Aurora, MySQL-based service

- Amazon Relational Database Service

- Microsoft Azure SQL Database (MS SQL)[30]

- Google Cloud SQL

One trainer notes that

“Snowflake is easy to use, flexible, scalable, and highly suitable to the data-driven businesses of today. However, this cloud database cannot be deployed across all available cloud platforms and so is not very popular in use.”

Snowflake’s programmatic interfaces enable its data to be accessed from even competitors:

- Tableau analytics dashboard (owned by SAP)

- Google BigQuery

Other cloud databases:

- Clustrix Database as a Service[25]

- CockroachDB-as-a-Service[26]

- EnterpriseDB Postgres Plus Cloud Database[27]

- Heroku PostgreSQL as a Service (shared and dedicated database options)[29]

- Oracle Database Cloud Service[31]

- SkySQL MariaDB

- Xeround Cloud Database* – MySQL front-end (*service no longer available)[32]

- YugabyteDB

Other SaaS data competitors:

- Airtable

- Splunk

- Notion (notebooks)

Like other cloud vendors, Snowflake provides Authentication, Access Control, Infrastructure, and Optimization.

Reference:

- https://poplindata.com/data-warehouses/2021-database-showdown-bigquery-vs-redshift-vs-snowflake/

- https://medium.com/2359media/redshift-vs-bigquery-vs-snowflake-a-comparison-of-the-most-popular-data-warehouse-for-data-driven-cb1c10ac8555

Concerns

Snowflake users write SQL according to ANSI standards, so proprietary features from Oracle and Microsoft T-SQL are not available in Snowflake.

Snowflake is adding custom features to SQL, such as the very useful MATCH_RECOGNIZE (March 2021).

Snowflake support geospatial data using native type GEOGRAPHY (like BigQuery).

Snowflake does not support Foreign Keys.

VIDEO: Applying Architectural Patterns to Solve Business Questions by Greg Sitzman, Principal Solutions Architect and Melinda Webster

-

Menu items:

Worksheets (for running SQL commands) Dashboards Data * Databases * Private Sharing * Provider Studio Marketplace Activity * Query History * Copy History * Task History Admin * Usage * Warehouses * Resource Monitors * Users & Roles * Security * Billing & Terms * Contacts * Accounts * Partner Connect Help & Support

Seattle Data Guy answers“Why Everyone Cares about Snowflake” by saying Snowflake “has the most clout” despite market share of 12-13%.

PROTIP: Snowflake is acknowledged as user-friendly, easy scaling up/down with flexible “pay-as-you-go” pricing.

Snowflake also has powerful and convenient data management features.

Snowflake is fast: “A complex query takes more than 6 hours in MySQL, 2 hours in Oracle, and just 10 minutes in snowflake.”

References:

-

https://seekingalpha.com/article/4429909-data-warehouse-wars-snowflake-vs-google-bigquery

Architectural Innovations

- https://docs.snowflake.com/user-guide/intro-key-concepts

Snowflake’s cloud-native architecture consists of three independently scalable layers across storage, compute, and cloud services:

-

The storage layer ingests massive amounts and varieties of structured and semi-structured data to create a unified data record.

-

The compute layer provides dedicated resources to enable users to simultaneously access common data sets for many use cases without latency.

-

The cloud services layer optimizes each use case’s performance requirements with no administration.

“What makes Snowflake different is its multi-cluster shared architecture” –VIDEO: What is Snowflake (by a non-user)

Unique:

- Metadata

- Zero Copy Cloning

- Time Travel

- Zero Copy Cloning + Time Travel

- Data Sharing

Dynamic Data Masking & External Tokenization

SnowGrid?

SnowSight

SnowPipe

Automatic register new files with auto-refresh of partitions

File formats

Snowflake can encode in UTF8, UTF16.

Snowflake can intelligent ingest many types of string data formats:

- CSV text delimited by commas or tabs

- JSON

- XML

Serialization file formats of Big Data:

-

Apache Avro (row-based, self-describing) introduced 2009 for Hadoop, with dynamic data definitions in JSON and data in binary that’s uncompressed, Snappy, deflate, bzip2, or xz. Supports complex data structures (arrays, enums, maps, and unions).

-

ORC (Optimized Row Columnar) file introduced in 2013 for Hadoop Hive as the successor to RCFile (Record Columnar File) formats. Supports Hive ACID transactions. Support complex data types (DateTime, decimal, struct, list, map, and union). Stores “row collections” across a cluster as a single NameNode file. It achieves higher throughput through parallel processing using parallel reads “predicate pushdown” that checks a query or condition against file metadata to see whether rows can be skipped insteaad of read. Splits files without scanning for markers.

-

Apache Parquet Columnar File Format introduced 2013. See

https://parquet.apache.org/ - is language agnostics (supporing Java, C++, Python). Support fast data processing for complex nested data structures (such as log files and event streams at scale) with flexible encoding schemes to handle columns containing different data types. Its columnar compression saves on cloud storage space, with compression schemes specified on a per-column basis. That makes Parquet “future-proof”. Parquet supports automatic schema merging for schema evolution, so you can start with a simple schema and gradually add more columns as needed.

Like ORC, Parquet files are splittable as they store file footer metadata containing information on block boundaries for the file. Systems access this block boundary information to determine whether to skip or read only specific parts (blocks) of the file – allowing for more efficient reads – or to more easily submit different blocks for parallel processing.

Parquet supports many query engines, including Amazon Athena for Serverless SQL, Amazon Redshift Spectrum, Qubole, Google BigQuery, Microsoft Azure Data Explorer and Apache Drill). AWS RDS for Aurura NoSQL databases (DynamoDB, MongoDB, and DocumentDB) use Parquet for data lake storage.

Thus, Parquet files are often most appropriate for “write-once, read-many” analytics (OLAP) use cases, typically when traditional OLTP databases are the source. Used with Spark.

References:

- https://bryteflow.com/how-to-choose-between-parquet-orc-and-avro/

New table formats emerging to support substantial increases in the volume and velocity of (particularly, streaming) data:

- Apache Iceberg

- Apache Hudi

- Databricks Delta Lake

Delta Lake tables store DAGs (Directed Acyclic Graphs) which store ACID transactions like Git commits do. That enables Time Travel – the ability to return the database to any point in the past.

- 2-hour Data Management with Databricks: Big Data with Delta Lakes (Guided Project)

https://aws.amazon.com/resources/analyst-reports/forrester-wave-data-warehouse-2021/

Snowflake the company

Snowflake Inc. was founded in 2012 by French founders: VIDEO:

- Benoît Dageville President of Product, living in San Francisco after 16 years at Oracle,

- Thierry Cruanes of San Mateo

- Marcin Żukowski

Snowflakes lists “Silicon Valley” jobs at (not remote) 450 Concar Drive, San Mateo, California 94402.+1 844.766-9355.

Snowflake is a Delaware corporation.

That’s according to https://www.wikiwand.com/en/Snowflake_Inc. which

lists 5,884 employees in 2023.

https://www.linkedin.com/company/snowflake-computing/

lists 7,230 employees and 664,151 followers (on July 23, 2023)

After 6 years as CEO of Service Now, Frank Slootman has been CTO since April 2019.

- VIDEO: “Leadership is not a popularity contest”.

Slootman lives in Bozeman, Montana, so also headquarters the company in a one-story building at 106 East Babcock Street, Bozeman, Montana. +1 844.766-9355

On Glassdoor, Slootman received from employees a 90% rating, with a high 4.0 rating and 75% of employees saying they would recommend to a friend.

The company mascot is a white bear called “___”.

People who work in the company Snowflake are called “Snowflakes”.

Snowflake was ranked at the top of the Forbes Cloud 100 list of private companies in 2019. It fell off that list when Snowflake went public.

Snowflake IPO’d (during the pandemic) on September 2020 as NYSE ticker SNOW, raising $3.4 billion, one of the largest software IPOs in history.

SNOW is a component of the Russell 1000 index.

On the board since April, 2023 is Mark McLaughlin, retired Chairman of Qualcomm, Palo Alto, Verisign.

SNOW stock peaked on December 2020 at $429. But on July 27, 2023, was at $170/share, for a market value of $55.4B with 4.61 Debt-to-Equity. In 2023, its $1.31B Gross Income and $2.15B Expenses yielded a -$796.71M Loss for -38.57% Net Margin and -$2.67 EPS and -15.17% ROE based. Although declining, a 73.97% Income Growth was reported for 2023, which is still impressive for any company.

Snowflake reported having 7,828 total customers, including 330 customers with trailing 12-month product revenue greater than $1 million, and remaining performance obligations of $3.7 billion, representing 38% year-over-year growth.

The company’s Growth Story aims for “$10B in revenue by FY2029”, partly through acquisitions of Polidea, Pragmatists, Streamlit, Myst.ai.

On June 27, 2023, a partnership with NVIDIA was announced.

/#MakeItSnow

Host Names (URLs)

-

https://www.snowflake.net

-

https://www.youtube.com/@snowflakedevelopers - 5k subscribers led by Chanin Nantasenamat, Streamlit Sr. Dev Advocate living in Thailand.

-

https://…/ is Snowflake’s URL shortener

-

https://docs.snowflake.com/Documentation

-

https://bots.snowflake.com for hands-on experiences using Snowflake security products.

-

https://github.com/snowflakedb contains open-source repos by the company (858 followers on July 27, 2023)

SnowPro Certifications

Snowflake’s certification exams are delivered through Pearson Vue (844.914-0562) through Snowflake’s Certification Portal at

https://snowflake.useclarus.com/

https://www.snowflake.com/certifications

(Shortened $88 recertification exam COF-R02 to maintain status)

Core Certification COF-C02

$175 SnowPro Core Certification COF-C02

$175 SnowPro Core Certification COF-C02

-

75% of 100 questions in 110 minutes (recerts have 60 questions in 85 minutes):

- Snowflake Data Cloud Features & Architecture: 25%

- Account Access and Security: 20%

-

Performance Concepts: 15%

- Data Loading and Unloading: 10%

- Data Transformations: 20%

- Data Protection and Data Sharing: 10%

Practice tests:

- 10-question $26.90 https://www.vmexam.com/exam-store/cof-c02-snowflake-snowpro-core-certifications

Advanced Certifications

$375 for each of five SnowPro Advanced Certifications and recerts

- 75% of 65 questions in 115 minutes :

- ADA-C01 (ADA-R01) - Advanced Administrator

- Snowflake Security, RBAC, & User Administration: 30%

- Account Management & Data Governance: 25%

- Performance Monitoring & Tuning: 20%

- Data Sharing, Data Exchange & Snowflake Marketplace: 10%

- Disaster Recovery, Backup & Data Replication: 15%

- DEA-C01 (DEA-R01) - Advanced Data Engineer

- Data Movement: 28%

- Performance Optimization: 22%

- Storage and Data Protection: 10%

- Security: 10%

- Data Transformation: 30%

- DAA-C01 (DAA-R01) - Advanced Data Analyst

- Data Ingestion and Data Preparation: 17%

- Data Transformation and Data Modeling: 22%

- Data Analysis: 32%

- Data Presentation and Data Visualization: 29%

- ARA-C01 (ARA-R01) - Advanced Architect:

- Accounts and Security: 25%

- Snowflake Architecture: 30%

- Data Engineering: 25%

- Performance Optimization: 20%

- DSA-C01 (DSA-R01) - Advanced Data Scientist

- Data Science Concepts: 10%

- Data Pipelining: 15%

- Data Preparation and Feature Engineering: 30%

- Model Development: 30%

- Model Deployment: 15%

As of this writing, practice exams are available only for Core, Architect, and Data Engineer.

After passing one expert exam, SnowPro SME to work on exams.

References:

- https://www.chaosgenius.io/blog/snowflake-certifications/

Video Training

Snowflake Documentation: overviews, tutorials and detailed references</a> https://docs.snowflake.com/en/

Snowflake offers both free and paid on-demand training at

https://learn.snowflake.com/

- https://learn.snowflake.com/en/courses/uni-essdww101/

Learn Snowflake best practices with complete hands-on labs at

instructor-led training courses at

https://training.snowflake.com/

Community

TODO: Create an account at

https://community.snowflake.com/s/login/

https://usergroups.snowflake.com/chapters/

Automation

REMEMBER: Snowflake is purely a SaaS product. Unlike other vendors, its “Enterprise” offering does not mean where customers install servers and upgrade versions.

Snowflake does have a client-side CLI to install:

- CLI (snowsql client-side command utility)

- DML (Data Markup Language) to create SQL database schemas

- Python/Go/Java/Scala client-side programs

- Java/Scala/NodeJs server-side programs

Install SnowSQL CLI

DBeaver

-

On macOS

brew install --cask snowflake-snowsql

==> Downloading https://sfc-repo.snowflakecomputing.com/snowsql/bootstrap/1.2/da ######################################################################### 100.0% ==> Installing Cask snowflake-snowsql ==> Running installer for snowflake-snowsql; your password may be necessary. Package installers may write to any location; options such as `--appdir` are ignored. Password: ____ installer: Package name is Snowflake SnowSQL installer: Installing at base path / installer: The install was successful. 🍺 snowflake-snowsql was successfully installed!

PROTIP: Even though there is a “SnowSQL.app” installed, it is accessed only by the CLI.

PROTIP: The installer creates in the root folder file ~/.zprofile with this content:

printf "%s\n" "Hello from .zprofile" # added by Snowflake SnowSQL installer v1.2 export PATH=/Applications/SnowSQL.app/Contents/MacOS:$PATH

-

The first time you invoke the app, click “OK” to the warning.

-

Verify:

snowsql --version Version: 1.2.27

-

Run snowsql by itself to get a long (and wide) menu of options:

Usage: snowsql [OPTIONS] Options: -a, --accountname TEXT Name assigned to your Snowflake account. If you are not on us-west-2 or AWS deployement, append the region and platform to the end, e.g.,. or . . Honors $SNOWSQL_ACCOUNT. -u, --username TEXT Username to connect to Snowflake. Honors $SNOWSQL_USER. -d, --dbname TEXT Database to use. Honors $SNOWSQL_DATABASE. -s, --schemaname TEXT Schema in the database to use. Honors $SNOWSQL_SCHEMA. -r, --rolename TEXT Role name to use. Honors $SNOWSQL_ROLE. -w, --warehouse TEXT Warehouse to use. Honors $SNOWSQL_WAREHOUSE. -h, --host TEXT Host address for the connection. Honors $SNOWSQL_HOST. -p, --port INTEGER Port number for the connection. Honors $SNOWSQL_PORT. --region TEXT (DEPRECATED) Append the region or any sub domains before snowflakecomputing.com to the end of accountname parameter after a dot. e.g., accountname= . -m, --mfa-passcode TEXT Token to use for multi-factor authentication (MFA) --mfa-passcode-in-password Appends the MFA passcode to the end of the password. --abort-detached-query Aborts a query if the connection between the client and server is lost. By default, it won't abort even if the connection is lost. --probe-connection Test connectivity to Snowflake. This option is mainly used to print out the TLS/SSL certificate chain. --proxy-host TEXT Proxy server hostname. Honors $SNOWSQL_PROXY_HOST. --proxy-port INTEGER Proxy server port number. Honors $SNOWSQL_PROXY_PORT. --proxy-user TEXT Proxy server username. Honors $SNOWSQL_PROXY_USER. Set $SNOWSQL_PROXY_PWD for the proxy server password. --authenticator TEXT Authenticator: 'snowflake', 'externalbrowser' (to use any IdP and a web browser), 'oauth', or https:// .okta.com (to use Okta natively). -v, --version Shows the current SnowSQL version, or uses a specific version if provided as a value. --noup Disables auto-upgrade for this run. If no version is specified for -v, the latest version in ~/.snowsql/ is used. -D, --variable TEXT Sets a variable to be referred by &. -D tablename=CENUSTRACKONE or --variable db_key=$DB_KEY -o, --option TEXT Set SnowSQL options. See the options reference in the Snowflake documentation. -f, --filename FILE File to execute. -q, --query TEXT Query to execute. --config FILE Path and name of the SnowSQL configuration file. By default, ~/.snowsql/config. -P, --prompt Forces a password prompt. By default, $SNOWSQL_PWD is used to set the password. -M, --mfa-prompt Forces a prompt for the second token for MFA. -c, --connection TEXT Named set of connection parameters to use. --single-transaction Connects with autocommit disabled. Wraps BEGIN/COMMIT around statements to execute them as a single transaction, ensuring all commands complete successfully or no change is applied. --private-key-path PATH Path to private key file in PEM format used for key pair authentication. Private key file is required to be encrypted and passphrase is required to be specified in environment variable $SNOWSQL_PRIVATE_KEY_PASSPHRASE -U, --upgrade Force upgrade of SnowSQL to the latest version. -K, --client-session-keep-alive Keep the session active indefinitely, even if there is no activity from the user.. --disable-request-pooling Disable request pooling. This can help speed up connection failover --token TEXT The token to be used with oauth authentication method --query_tag TEXT Tags to be applied to the queries run --generate-jwt Generate a jwt token, which will be printed out and displayed. Requires values for user, account, and private-key-path. -?, --help Show this message and exit. </pre> ### snowsql variables $SNOWSQL_ACCOUNT $SNOWSQL_USER $SNOWSQL_PWD $SNOWSQL_SCHEMA $SNOWSQL_HOST $SNOWSQL_PORT $SNOWSQL_PRIVATE_KEY_PASSPHRASE

-

Configure

-

Open a new Terminal or Finder window navigate to the folder:

$HOME/.snowsql

On Windows: %USERPROFILE%.snowsql\

Language Support

VIDEO: Exploring Snowflake’s Open Source Drivers & API Ecosystem | Summit 2023

VIDEO: “Snowpark” APIs was introduced in 2022 to provide pre-integrated DataFrame style programming inside the Snowflake engine (for ML) online. Java/Scala, (Anaconda) Python client-side plus also NodeJs server-side.

Python

Python-centric repos on SnowflakeDB on GitHub:

- https://github.com/snowflakedb/snowflake-connector-python - 471

- https://github.com/snowflakedb/snowflake-ml-python - 6

- https://github.com/snowflakedb/snowflake-sqlalchemy - 191

- https://github.com/snowflakedb/libsnowflakeclient - 15

- https://github.com/snowflakedb/snowpark-python - 174

- https://github.com/snowflakedb/SnowAlert - 163 Security Analytics Using The Snowflake Data Warehouse

C/C++

- https://github.com/snowflakedb/libsnowflakeclient - 16

C#

- https://github.com/snowflakedb/snowflake-connector-net - 138

Go

- https://github.com/snowflakedb/gosnowflake -230

JavaScript NodeJS

- https://github.com/snowflakedb/snowflake-connector-nodejs - 109

Java

- https://github.com/snowflakedb/snowflake-ingest-java - 45

- https://github.com/snowflakedb/snowflake-jdbc - 150

- https://github.com/snowflakedb/snowflake-kafka-connector - 103

- https://github.com/snowflakedb/spark-snowflake - 186

PHP

- https://github.com/snowflakedb/pdo_snowflake - 52

Futures

modern Graph database structures.

Video Tutorials

On Snowflake University

Snowflake provides 4 “Badge” video tutorials for free.

- Signup for a trial account here.

-

Select AWS, Canada (Central) region in Badge 3.

-

Copy and submit your LOCATOR number.

- To complete the workshop you will run scripts that are autograded by DORA, the grading robot.

Badge 1: Data Warehousing Workshop (DWW) Lesson 2: Identity and Access Lesson 3: Data Containers Lesson 4: Tables, Data Types, and Loading Data Lesson 5: Worksheets & Warehouses Lesson 6: File Formats & the Load Wizard Lesson 7: Meet DORA! Lesson 8: Staging Data Lesson 9: Data Storage Structures Lesson 10: Intro to Semi-Structured Data Lesson 11: Nested Semi-Structured Data

- Benefits of a Cloud-based Database

- Account Editions, Regions & Clouds

- Snowflake Identity, Access, Users, & Roles

- Databases, Ownership and Context

- Worksheets and Warehouses

- Loading Tables Using SQL Insert Statements

- File Formats, Stages, and Copy Into Loading

- Semi-Structured Data including XML and JSON

- Querying Nested Semi-Structured Data

Badge 2: Data Application Builders Workshop

Almost like a full-stack developer bootcamp, this course covers a wide array of technologies that will help you build applications that use Snowflake as a back end. Including: Streamlit (Python), SnowSQL, REST APIs, and much more.

Badge 3: Sharing, Marketplace, & Exchanges Workshop

Badge 4: Data Lake Workshop

- Get an Enterprise Edition.

Badge 5: Data Engineering Workshop (DNGW)

On LinkedIn.com Learning:

By Lynn Langit who has worked on various clouds, Analytics, and Snowflake in production, presents a rich yet succinct tutorial:

1h 41m Learning SnowflakeDB 3/25/2022

It references her https://github.com/lynnlangit/learn-snowflakedb

On Coursera.com

By Nikolai Schuler residing in Bulgaria:

By UAE resident Mohamed Touiti, Snowflake Sales Engineer since 2021.

-

2-hour Snowflake for Beginners: Make your First Snowsight Dashboard

-

2-hour Data Cleaning in Snowflake: Techniques to Clean Messy Data

By Google:

-

Course BigQuery Fundamentals for Snowflake Professionals provides quizzes and labs:

On Pluralsight.com:

Path: Path: Snowflake for Data Analyst

Beginner:

- 1h 16m Snowflake Architecture and Overview: Getting Started Dec 23, 2021 by Alejandro Romero (@aromero77)

By Mohit Batra (CrystalTalks) residing in Hyderabad:

-

1h 22m Moving Data with Snowflake Nov 21, 2021

-

1h 56m Querying Data with Snowflake Feb 13, 2023

Intermediate:

-

57m Setting up Cloud Databases and Tables with Snowflake Apr 28, 2022 by Thomas LeBlanc (@TheSmilingDBA, Thomas-LeBlanc.com)

-

35m Query Caching Performance Features with Snowflake Dec 6, 2021 by Rushabh Doshi in Redmond.

-

1h 29m Performing Data Analytic Tasks with Snowflake Oct 4, 2021 by Warner Chaves

-

1h 2m SQL Extensibility Features with Snowflake 5 Oct 3, 2021 by Pinal Dave in India.

Advanced:

-

1h 37m Working with Semi-structured Data with Snowflake by Warner Chaves (Datascape podcast, @warchav, createdatapros.com) Nov 17, 2021

-

1h 2m Visualizing Data via Snowflake by Alejandro Romero (@aromero77) at U of Utah and Elastic Mar 1, 2022

On OReilly.com

on Udemy.com

VIDEO: Snowflake Architecture - Learn How Snowflake Stores Table data Aug 17, 2019 by the anonymous @scholarnest who has paid courses about Kafka on https://www.learningjournal.guru/courses/

on YouTube

What is Snowflake? 8 Minute Demo by Peter Mebane

Understanding Snowflake Data Platform for Beginners by Peter Morton

Why is Snowflake so popular? Data warehouse vs. data lake. // What you should know about software

Zero to Snowflake in 58 minutes by Datalytyx

Snowflake Real Time Project Flow || What is Snowflake || Snowflake Features by Praveen Kumar Bommisetty

What is a Data Lakehouse by Shreya Pal

Resources

https://www.snowflake.com/summit/ June 3-6, 2024 in San Francisco Moscone Center

https://www.youtube.com/watch?v=-aHF1eseweY&t=5m Snowflake End-to-End ETL Project by MultiCloud4U’s Theresal Thangapazham

More about databases

This is one of a series about databases:

- Azure cloud introduction

- Azure Cloud Onramp (Subscriptions, Portal GUI, CLI)

- RDP client to access servers

- Bash Windows using Microsoft’s WSL (Windows Subsystem for Linux)

- Microsoft PowerShell ecosystem

- Azure Cloud Powershell

- PowerShell DSC (Desired State Configuration)

- PowerShell Modules

- Azure Networking

- Azure Storage

- Azure Compute

- Azure cloud DevOps

- Dockerize apps

- Kubernetes container engine

- Hashicorp Vault and Consul for keeping secrets

- Hashicorp Terraform

- Ansible

- Microsoft AI in Azure cloud

- Azure Monitoring

- Azure KSQL (Kusto Query Language) for Azure Monitor, etc.

- Dynatrace cloud monitoring

- Cloud Performance testing/engineering

- Cloud JMeter