Master the cloud that runs on fast Google Fiber and Big AI, from the folks who created Kubernetes, Gmail, Google Docs/Sheets, etc.

Overview

- Why Google?

- Personas

- Google Certified Professional (GCP) Certification Exams

- Digital Leader certification

- Associate Cloud Engineer certification

- Cloud Architect certification

- Data Engineer certification

- Cloud DevOps Engineer certification

- Cloud Developer certification

- Cloud Network Engineer certification

- Cloud Security Engineer certification

- Machine Learning Engineer certification

- Cloud Engineer certification

- Documentation

- Cloud Adoption Framework

- Types (Models) of product offerings

- Maturity Assessement

- SLAs

- Google’s Hundreds of Products

- Google Support

- Social Community

- Hands-on training

- How to get free cloud time

- A. Free $300 account for 60 days

- Ways of interacting with Google Cloud

- CLI programs & commands on your Terminal

- Google Shell

- REST APIs

- Google IaC (Infra as Code)

- New Project

- Principals

- Credentials for Authentication

- IAM

- Create sample Node server

- Source Code Repository

- REST APIs

- Google Networking

- Google COMPUTE Cloud Services

- GKE (Google Kubernetes Engine)

Here is a hands-on introduction to learning the Google Compute Platform (GCP) and getting certified as a Google Certified Professional (GCP).

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

TLDR: My contributions (under construction):

- A Bash shell file (gcpinfo.sh) that installs what’s needed for

- A multi-cloud Python program interacting across GCP, Azure, AWS

- A Terraform repo (gcp-tf) containing HCL to create GCP resources safely within enterprises

- A Google Sheet providing metadata about APIs collected over several Google websites and commands

Why Google?

-

PROTIP: Due to fewer people working on GCP, individual professionals are likely to be paid better than AWS & Azure pros. This is the flip side of there being fewer GCP jobs than AWS & Azure jobs.

-

Visit Google Cloud’s marketing home page at:

Google is based in Mountain View, California (“Silicon Valley”). It became big since its search engine (appearing in 1996) and associated advertising catapulted them to be one of the most valuable companies in the world.

Major named clients of the Google Cloud Platform (GCP) include HSBC, PayPal, 20th Century Fox, Bloomberg, Dominos, etc.

-

Click on Solutions to see that Google has software specific to industries such as Retail, Healthcare, Supply Chain, even Manufacturing. Those are industries where Amazon has entered markets of its AWS cloud customers.

Google also supports “Modernization” initiatives such as Open Banking, SRE, DevOps, Day 2 Operations, Multicloud

Google says it’s the first company to be carbon-free, running on 90% carbon-free energy.

-

On the left side of the Solutions page, » marks category names that are also in the Products menu (below):

- Application modernization (CI/CD » DevOps, API Management, Multicloud)

- Artificial Intelligence »

- APIs and applications

- Databases »

- Data cloud

- Digital transformation

- Infrastructure modernization

- Productivity and collaboration (Google Workspace, Chrome Enterprise, Cloud Identity, Cloud Search)

- Security »

- (Smart) Analytics » (previously “BIG DATA”)

- Startups and SMB (Web3, Startup Program)

- Featured partner solutions

- Google has innovated in its aggressive pricing among CSPs:

- first to have per-second vs. per-minute billing.

- discount applied automatically after an instance runs for more than 25% of a month.

- Google encrypts data automatically at no additional charge. However, AWS charges extra for the stronger encryption for security – an expensive hassle.

- Forrester rated GCP highest on “Strategy” in its “IaaS Platform Native Security” even though Microsoft got a 5 vs 3 for “Roadmap”, “Market approach”. Some may argue rating Google over Amazon on “Innovation”.

Pricing is especially important as more usage is made of AI and Machine Learning (Vertex), which use a voracious amount of compute power.

-

My cloud vendor comparison article describes how Google’s fast fiber network of underground and undersea cables connects machines at high capacity and speed across the world.

Even if you don’t use Google Cloud, you can use Google’s fast DNS at 8.8.8.8.

-

Google was the first cloud vendor to offer a VPC which spans several regions (until late 2018 when AWS offers the same). But still, the global scope of a VPC in Google eliminates the cost and latency of VPN between regions (plus a router for each VPN for BGP). This also enables shareable configuration between projects.

-

As with AWS, Google has Preemptible VMs that run up to just 24 hours, with less features than Spot VMs. Both are priced the same. No “pre-warming” is required for load balancing.

- Largest servers? As of May 11, 2022, Google’s Cloud TPU (Tensor Processing Units) use 2048-chip and 1024-chip v4 Pods which combine for 9 exaflops of peak aggregate performance (equivalent to the computing power of 10 million laptops combined) – the largest publicly available. Google is also working on Quantum AI. to run ML hub.

See https://cloud.google.com/why-google

As with other clouds

- It’s costly and difficult for individual companies to keep up with the pace of technology, especially around hardware

- “Pay as you go” rather than significant up-front purchase, which eats time

- No software to install (and go stale, requiring redo work)

- Google scale - 9 cloud regions in 27 zones. 90 Edge cache locations.

cloud.google.com/about/locations lists current number of regions, zones, network edge locations, countries served by the Google Front End (GFE) with DoS protection.

Google was the first CSP to get an ISO 14001 certification.

Personas

The major services associated with each major persona (job/profession category):

from VIDEO by Ashutosh Mishra

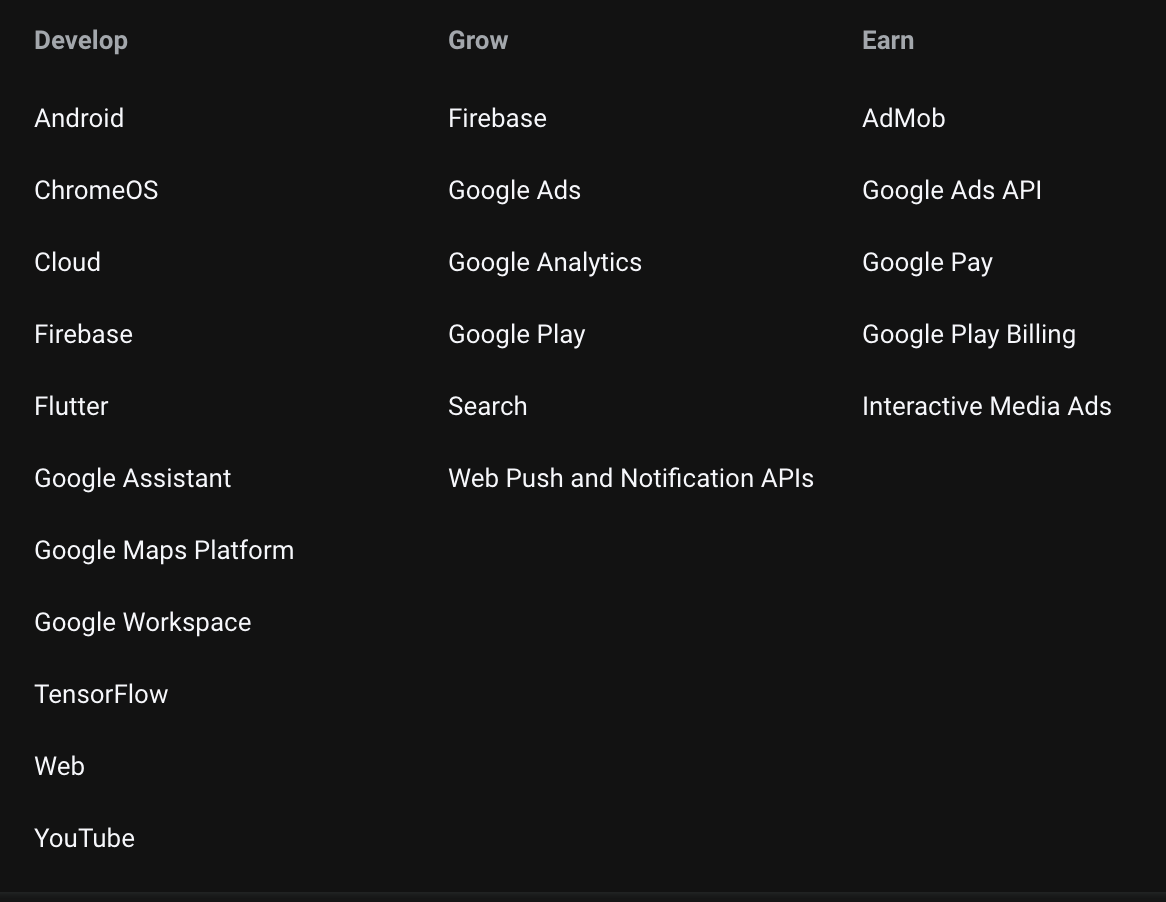

Products for Developers

At https://developers.google.com, the Products are:

This article addresses only Google’s Cloud products.

Google’s non-cloud Products & APIs

In addition to Google Cloud are Google’s SaaS (Maps, etc.), Workspace (Gmail, Calendar, Sheets, Drive, etc.), Social (Contacts, etc.), YouTube, Mobile hardware line (Android, Pixel, etc.), and Search AdSense.

Google Certified Professional (GCP) Certification Exams

After certification, you are listed on the Google Cloud Certified Directory.

- https://www.coursera.org/collections/googlecloud-offer-expired

- https://support.google.com/cloud-certification/answer/9907748?hl=en

Google lists its certifications atz;

They’re good for 3 years.

$99 to answer 70% of 50-60 questions in 90 minutes. My notes:

$125 to answer 50-60 questions in 2 hours:

$200 to answer 50-60 questions in 2-hours for professional-level Cloud exams:

- Cloud Architect

- Data Engineer

- Cloud Developer Certificate

- Cloud DevOps Engineer

- Cloud Network Engineer

- Cloud Security Engineer

- Machine Learning Engineer

Certifications on non-cloud Google products:

- Google Workspace Administrator (Collaboration Engineer)

-

G Suite Administrator (Gmail, Google Drive, etc.)

- Associate Android Developer

-

Mobile Web Specialist

- Apigee (API Management) certification

PROTIP: Google uses Webaccessor (by Kryterion), which amazingly requires a different email for each exam subject. In other words, if you want to get certified in Salesforce, DevOpsInstitute, and Google, you’ll need 3 emails. Absolutely crazy! And they consider addresses such as “johndoe+google@gmail.com” invalid.

Tests can be taken online or in-person at a Kryterion Test Center. PROTIP: Call (602) 659-4660 in Phoenix, AZ because testing centers go in an out of business, or have limitations such as COVID, so call ahead to verify they’re open and to confirm parking instructions. Copy address and parking instructions to your Calendar entry.

Kryterion’s online-proctoring (OLP) solution is not affected by COVID-19 and may be a suitable testing alternative to taking exams at a test center.

Register for your exam through your Test Sponsor’s Webassessor portal. There you get a Test Taker Authorization Code needed to launch the test.

- thecloudgirl.dev (Priyanka Vergadia) provides 101 sketchnotes (cartoon diagrams) BOOK

Digital Leader certification

Foundational certification as a “Cloud Digital Leader”

- 8-hour Google Cloud Fundamentals: Core Infrastructure

- 7-hour Essential Google Cloud Infrastructure: Foundation

- 11-hour Essential Google Cloud Infrastructure: Core Services

- 7-hour Elastic Google Cloud Infrastructure: Scaling and Automation

- 8-hour Architecting with Google Kubernetes Engine: Foundations

- 3-hour Preparing for Your Associate Cloud Engineer Journey

6-hour FreeCodeCamp.org (GCP-CDL) by Andrew Brown of $60 exampro.co

Others:

Associate Cloud Engineer certification

Cloud Architect certification

Cloud Architect – design, build and manage solutions on Google Cloud Platform.

- 10 week Google Cloud Fundamentals: Core Infrastructure

- Essential Google Cloud Infrastructure: Foundation

- Essential Google Cloud Infrastructure: Core Services

- Elastic Google Cloud Infrastructure: Scaling and Automation

- Reliable Google Cloud Infrastructure: Design and Process

- Architecting with Google Kubernetes Engine: Foundations

- Preparing for your Professional Cloud Architect Journey

PROTIP: The exam references these case studies, so get to know them to avoid wasting time during the exam at https://cloud.google.com/certification/guides/professional-cloudarchitect/

-

EHR Healthcare

-

Helicopter Racing League

-

Mountkirk (rev2) online multiplayer Games MySQL on Google Compute Engine for scaling globally. Rather than batch ETL.

-

TerramEarth (rev2) CASE mining vehicle field data collection from cellular network to reduce downtime and quicker data visibility SOLUTION

The above are covered by Google’s Preparing for the Google Cloud Professional Cloud Architect Exam on Coursera is $49 if you want the quizzes and certificate. It covers these previous case studies:

-

JencoMart retailer migrating LAMP stacks to cloud (LAMP = Linux OS, Apache HTTP server, MySQL database, PHP)

-

Dress4Win (rev2) CASE clothing website with social network dev/test/DR with CI/CD lift and shift from Ubuntu MySQL Nginx, Hadoop. SOLUTION

More about this certification:

-

https://medium.com/@earlg3/google-cloud-architect-exam-study-materials-5ab327b62bc8

-

KC = Coursera “Architecting with Google Cloud Platform Specialization” (6 courses for $79 USD per month via qwiklabs):

https://www.coursera.org/specializations/gcp-architecture

Data Engineer certification

From Google: Data Engineer certification Guide for Analytics (big data)

https://cloud.google.com/training/courses/data-engineering is used within the Data Engineering on Google Cloud Platform Specialization on Coursera. It is a series of five one-week classes ($49 per month after 7 days). These have videos that syncs with transcript text, but no hints to quiz answers or live help.

-

Building Resilient Streaming Systems on Google Cloud Platform $99 USD

-

Leveraging Unstructured Data with Cloud Dataproc on Google Cloud Platform $59 USD

-

Google Cloud Platform Big Data and Machine Learning Fundamentals $59 USD by Google Professional Services Consulant Valliappa Lakshmanan (Lak) at https://medium.com/@lakshmanok, previously at NOAA weather predictions.

-

Serverless Data Analysis with Google BigQuery and Cloud Dataflow $99 USD

-

Serverless Machine Learning with Tensorflow on Google Cloud Platform $99 USD by Valliappa Lakshmanan uses Tensorflow Cloud ML service to learn a map of New York City by analyzing taxi cab locations.

- Vision image sentiment

- Speech recognizes 110 languages, dictating,

- Translate

- personalization

Cloud DevOps Engineer certification

From Google: Cloud DevOps Engineer certification</a>

5-course Coursera (Core Infra, SRE Culture, Design & Process, Logging, GKE):

- Google Cloud Platform Fundamentals: Core Infrastructure

- Developing a Google SRE Culture

- Reliable Google Cloud Infrastructure: Design and Process

- Logging, Monitoring and Observability in Google Cloud

- Getting Started with Google Kubernetes Engine

Coursera’s video Architecting with GKE Specialization course.

Previously:

-

Architecting with GKE: Foundations by Brian Rice (Curriculum Lead) provides hands-on Qwiklabs. Lab: Working with Cloud Build Quiz: Containers and Container Images, The Kubernetes Control Plane (master node), Kubernetes Object Management, Lab: Deploying to GKE, Migrate for Google Anthos

Coursera’s video courses toward a Prep Professional Cloud DevOps Engineer Professional Certificate

-

Google Cloud Platform Fundamentals: Core Infrastructure (1 “week”)

-

Developing a Google SRE Culture

In many IT organizations, incentives are not aligned between developers, who strive for agility, and operators, who focus on stability. Site reliability engineering, or SRE, is how Google aligns incentives between development and operations and does mission-critical production support. Adoption of SRE cultural and technical practices can help improve collaboration between the business and IT. This course introduces key practices of Google SRE and the important role IT and business leaders play in the success of SRE organizational adoption.

Primary audience: IT leaders and business leaders who are interested in embracing SRE philosophy. Roles include, but are not limited to CTO, IT director/manager, engineering VP/director/manager. Secondary audience: Other product and IT roles such as operations managers or engineers, software engineers, service managers, or product managers may also find this content useful as an introduction to SRE.

-

Reliable Google Cloud Infrastructure: Design and Process by Stephanie Wong (Developer Advocate) and Philipp Mair (Course Developer)

equips students to build highly reliable and efficient solutions on Google Cloud using proven design patterns. It is a continuation of the Architecting with Google Compute Engine or Architecting with GKE courses and assumes hands-on experience with the technologies covered in either of those courses. Through a combination of presentations, design activities, and hands-on labs, participants learn to define and balance business and technical requirements to design Google Cloud deployments that are highly reliable, highly available, secure, and cost-effective.

- Apply a tool set of questions, techniques, and design considerations

- Define application requirements and express them objectively as KPIs, SLOs and SLIs

- Decompose application requirements to find the right microservice boundaries

- Leverage Google Cloud developer tools to set up modern, automated deployment pipelines

- Choose the appropriate Cloud Storage services based on application requirements

- Architect cloud and hybrid networks * Implement reliable, scalable, resilient applications balancing key performance metrics with cost

- Choose the right Google Cloud deployment services for your applications

- Secure cloud applications, data, and infrastructure

- Monitor service level objectives and costs using Google Cloud tools Prerequisites * Completion of prior courses in the \

CERTIFICATE COMPLETION CHALLENGE to unlock benefits from Coursera and Google Cloud Enroll and complete Cloud Engineering with Google Cloud or Cloud Architecture with Google Cloud Professional Certificate or Data Engineering with Google Cloud Professional Certificate before November 8, 2020 to receive the following benefits; => Google Cloud t-shirt, for the first 1,000 eligible learners to complete. While supplies last. > Exclusive access to Big => Interview ($950 value) and career coaching => 30 days free access to Qwiklabs ($50 value) to earn Google Cloud recognized skill badges by completing challenge quests

-

Logging, Monitoring, and Observability in Google Cloud

teaches techniques for monitoring, troubleshooting, and improving infrastructure and application performance in Google Cloud. Guided by the principles of Site Reliability Engineering (SRE), and using a combination of presentations, demos, hands-on labs, and real-world case studies, attendees gain experience with full-stack monitoring, real-time log management, and analysis, debugging code in production, tracing application performance bottlenecks, and profiling CPU and memory usage.

Cloud Developer certification

- Google Cloud Fundamentals: Core Infrastructure

- Getting Started with Google Kubernetes Engine

- 7-week Getting Started with Application Development

- Securing and Integrating Components of your Application

- App Deployment, Debugging, and Performance

- Application Development with Cloud Run

Cloud Network Engineer certification

- Google Cloud Platform Fundamentals: Core Infrastructure

- Developing a Google SRE Culture

- Reliable Google Cloud Infrastructure: Design and Process

- Logging, Monitoring and Observability in Google Cloud

- Getting Started with Google Kubernetes Engine

Cloud Security Engineer certification

Pluralsight’s 2-hour course (highlights) was “prepared by Google”.

PROTIP: 8 courses on Coursera:

- Preparing for Your Professional Cloud Security Engineer Journey

- Google Cloud Platform Fundamentals: Core Infrastructure

- LAB: Getting Started with VPC Networking and Google Compute Engine (Routes, Firewalls)

- Networking in Google Cloud: Defining and Implementing Networks

- Google Cloud VPC Networking Fundamentals

- Controlling Access to VPC Networks

- Sharing Networks across Projects

- Load Balancing

- Networking in Google Cloud: Hybrid Connectivity and Network Management

- Hybrid Connectivity

- Networking Pricing and Billing

- Network Design and Deployment

- Network Monitoring and Troubleshooting

- Managing Security in Google Cloud

- Security Best Practices in Google Cloud

- Mitigating Security Vulnerabilities on Google Cloud

- Hands-on Labs in Google Cloud for Security Engineers

- IAM Custom Roles GSP190

- Lab: VPC Network Peering

- Lab: Setting up a Private Kubernetes Cluster

- Lab: How to Use a Network Policy on Google Kubernetes Engine

- Lab: Using Role-based Access Control in Kubernetes Engine

Features of particular interest:

- Cloud Shell

- Network Service Tiers

- VPC, Routes, Firewall, DNS

- Cloud Network

- Cloud Armor (perimeter and boundary)

- Cloud CDN

- Cloud Deployment Manager

- Cloud Interconnect

- Cloud Load Balancing

- Compliance requirements

https://www.cloudskillsboost.google/course_templates/382 Managing Security in Google Cloud

- https://drive.google.com/file/d/1ZRwbL_M33GMPFnBG2QQ5RSIvPUgxMqRl/view?pli=1

https://www.cloudskillsboost.google/quests/150 Ensure Access & Identity in Google Cloud

https://www.cloudskillsboost.google/course_templates/87 Security Best Practices in Google Cloud

- https://www.cloudskillsboost.google/course_sessions/3783064/documents/379433

Machine Learning Engineer certification

8-course Coursera (TensorFlow)

Cloud Engineer certification

- 8-hour Google Cloud Fundamentals: Core Infrastructure

- 7-hour Essential Google Cloud Infrastructure: Foundation

- 11-hour Essential Google Cloud Infrastructure: Core Services

- 7-hour Elastic Google Cloud Infrastructure: Scaling and Automation

- 8-hour Architecting with Google Kubernetes Engine: Foundations

- 3-hour Preparing for Your Associate Cloud Engineer Journey

Documentation

- https://cloud.google.com/architecture

- https://cloud.google.com/docs

- https://cloud.google.com/docs/samples (code samples)

- https://cloud.google.com/compute/docs/reference/rest/v1/instances/start

Cloud Adoption Framework

VIDEO: The Google Cloud Adoption Framework (GFAF) themes: white paper PDF

- https://dzone.com/articles/cli-for-rest-api

- https://medium.com/google-cloud = curated articles for developers

Google Cloud Deployment Manager vs Terraform vs. Pulumi vs. AWS Co-pilot (AWS CloudFormation) vs. Azure Resource Manager: You still ‘describe’ your desired state, but by having a programming language, you can use complex logic, factor out patterns, and package it up for easier consumption.

Types (Models) of product offerings

SaaS = Software (web apps) as a Service =>

- Google.com Search, YouTube.com, Google Workspaces: Gmail.com, Calendar, Tasks, Docs, Sheets, Drive, Google Apps.

PaaS = Platform (managed services) as a Service => - Cloud Functions, Cloud Run, API Gateway, Analytics

IaaS = Infra (resources) as a Service => - Compute, storage, databases, network.

Production systems such as Pokemon Go use a combination of several (Serverless) services:

Maturity Assessement

VIDEO: Cloud Maturity Assessment along VIDEO Google’e Cloud Maturity Scale has 3 levels along themes: Learn, Lead, Scale, Secure:

- Tactical short-term is self-taught, heroic project manager, change is slow

- Strategic mid-term has change management, templates central identity, hybrid network

- Transformational long-term has peer learning, cross-functional feature teams, Changes are constant, low risk, and quickly fixed

https://cloud.google.com/docs/enterprise/setup-checklist

SLAs

Google offers these monthly uptime SLAs:

- 99.999% (5 minutes of downtime/year) on Spanner, Cloud BigQuery replicated instance with multi-cluster routing policy (3 or more regions)

- 99.99% on fewer than 3 regions; multi-zone services and load balancing BigQuery, App Engine + Dedicated Interconnect

- 99.95% on Cloud SQL, Cloud Formation

- 99.90% on Cloud BigQuery single cluster; Standard storage in a regional location of Cloud Storage: Nearline or Coldline in a multi-region or dual-region location of Cloud Storage

- 99.50% on a single instance

- 99.00% on Nearline or Coldline storage in a regional location of Cloud Storage: Durable Reduced Availability storage in any location of Cloud Storage

Google network peering is not covered by SLAs.

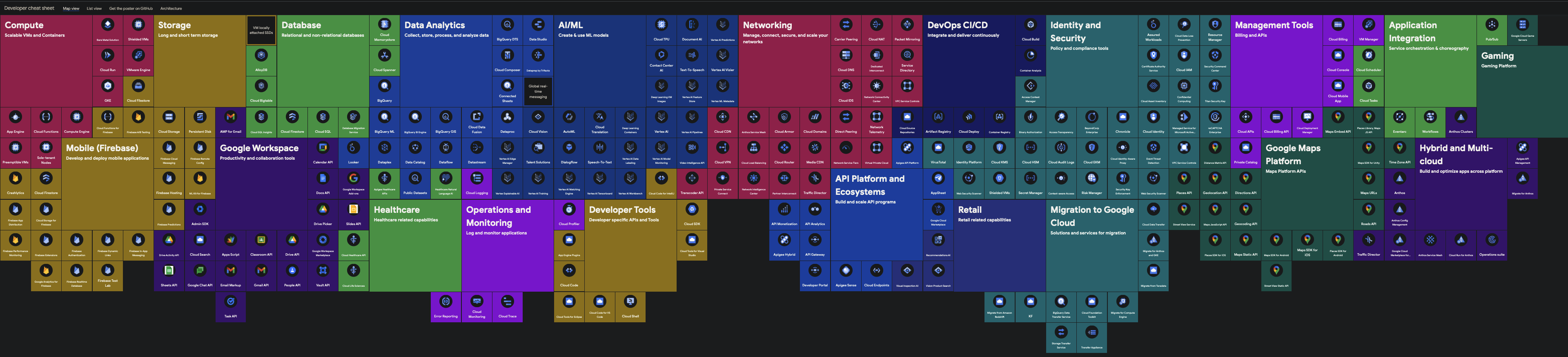

Google’s Hundreds of Products

For those who have good visual memory, click here to open this diagram which groups Google’s cloud products into colors.

Then click the icon for each service. Alternately, from Chrome within GCP, download Google SVG icons using the Chrome extension Amazing Icon Downloader.

Then click the icon for each service. Alternately, from Chrome within GCP, download Google SVG icons using the Chrome extension Amazing Icon Downloader.

Groups not in the Console’s menu (below) are: “API Platforms and Ecosystems”, “Identity and Security”, “Migration to Google Cloud”, “Developer Tools”.

One-sentence per service at https://cloud.google.com/products and in the “Products & solutions” page at https://console.cloud.google.com/products, notice that items of each category are in order of popularity. My contribution is arranging the categories in alphabetical order instead:

-

Analytics (BigQuery, Pub/Sub, Dataflow, Composer, Dataproc, Dataprep, IoT Core, Data Fusion, Looker, Healthcare, Financial Services, Datastream, Life Sciences, Data Catalog, Elastic Cloud, Databricks, Dataplex)

-

AI/ML (Vertex AI (Machine Learning), Natural Language, Tables, Translation, Document AI, Recommendations AI, Retail, Talent Solution, DocAI Warehouse, Discovery Engine, Speech, Vertex AI Vision)

AI Platform (Unified), Data Labeling -

DevOps CI/CD (Cloud Build,

Container Registry, Source Repositories, Artifact Registry, Cloud Deploy)Cloud Scheduler, Deployment Manager, Endpoints, Workflows, Private Catalog -

Compute (Compute Engine, Kubernetes Engine, VMware Engine, Anthos, Batch)

Container Engine -

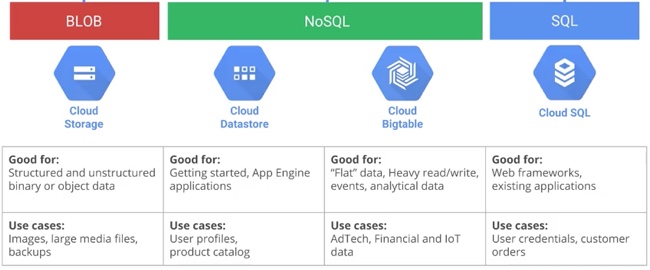

Database (SQL, Datastore, Filestore, Spanner, Bigtable, Memorystore, Database Migration, MongoDB Atlas, Neo4j Aura, Redis Ent., AlloyDB)

-

Distributed Cloud (Edge, Appliances)

-

Integration Services (Cloud Scheduler, Cloud Tasks, Workflow, Eventarc, Application Integration, Integration Connectors, Apigee)

-

Networking (VPC network, Network services, Hybrid Connectivity, Network Security, Network Intelligence, Network Service Tiers)

-

Operations (and Monitoring) (Logging, Monitoring, Error Reporting, Trace, Profiler, Capacity Planner)

Stackdriver -

Other Google products (Google Maps Platform, Immersive Stream, Google Workspace)

-

Storage (Google Storage, Filestore, Storage Transfer, Dell PowerScale)

-

Serverless (Cloud Run, Cloud Functions, App Engine, API Gateway, Endpoints)

-

Security (Security, Compliance)

-

Tools (Identity Platform, Deployment Manager, Service Catalog, Carbon Footprint, Apache Kafka on Confluence, Splunk Cloud, Cloud Workstations, (DB) Migration Center)

Previously, “BIG DATA” is now “Analytics”. “TOOLS” is now “CI/CD”. Apigee and API Gateway are now in separate categories. Debugger, Previous menu categories:

- IDENTITY & SECURITY (Identity, Access, Security) was reallocated.

- PARTNER SOLUTIONS (Redis Enterprise, Apache Kafka, DataStax Astra, Elasticsearch Service, MongoDB Atlas, Cloud Volumes)

PROTIP: Menu over a menu item to see the lower-lever menu. Escape from that by pressing Esc key.

Google Support

- FREE (community only)

- $29/mo. + 3% of spend for Standard (4-hour email) Support

- $500/mo. + 3% of spend for Enhanced (1-hour phone) Support in additional languages. PROTIP: Higher than AWS!

- Those with paid Premium (15 min.) support get a TAM (Technical Account Manager) to provide tech previews, etc.

Social Community

- https://stackoverflow.com/collectives/google-cloud

- Join the Google Cloud Platform Community

- https://cloud.google.com/newsletters monthly emails.

- https://twitter.com/googlecloud = @googlecloud

- https://googlecloudplatform.blogspot.com

- Google on LinkedIn

- Google Developers on LinkedIn

- Google Developers Group on LinkedIn

- https://developers.google.com/community/gdg = Google Developer Groups (Chapters) local

- DevFest events

- Google Cloud Events on Youtube

- Google NEXT conference online.

-

GOTO conference online.

- https://www.facebook.com/GDGCloudHanoi

-

https://gdgcloudhanoi.dev/?fbclid=IwAR2aDQV2OvRwn9awLYQrmohHjhU1RnQqUX–lIwLfSF2jwJZlqz-wEBfdd8

- https://cloud.google.com/find-a-partner

Hands-on training

There are several ways to obtain hands-on experience, with cloud time.

- learndigital.withgoogle.com

- Qwiklabs (credits)

- Codelabs (not FREE)

- Coursera

- Pluralsight

- Rock Stars on YouTube

WithGoogle.com

https://learndigital.withgoogle.com/digitalgarage.com/courses lists courses about <a target=”_blank” href=’https://learndigital.withgoogle.com/digitalgarage/remote-work”>Marketing</a>, Career development as well as the full range of Google’s products, from many providers:

- Futurelearn’s Agile and Design Thinking is $129 after a month or $27.99/month. They also have courses on Raspberry Pi.

- Simplilearn

Qwiklabs Training and Cloud Time

https://www.cloudskillsboost.google incorporates features of Qwiklabs, purchased by Google to provide a UX to cloud instance time (around an hour each class).

PROTIP: Videos of Qwiklabs being completed

https://github.com/quiccklabs/Labs_solutions

PROTIP: List of broken Qwiklabs

In a browser, when a green “Start Lab” appears:

-

PROTIP: To avoid wasting time during the timed lesson, before clicking “Start Lab”, read the Overview, Objectives, and all Task instructions.

-

To avoid corrupting your cookies, copy the URL. Open an Incognito (private) window. Notice the “Private Browsing” at the upper right? Click “Search or enter address”. Paste the URL. Sign in with Google.

- Click on the green “Start Lab” to start the clock ticking down.

- PROTIP: So that you can read instructions on one screen and perform the work on another screen without jumping between screens, right-click on “Open Google Cloud console” to select “Open Link in New Window”.

- Click the boxes icon next to the Password value to copy it into your invisible Clipboard.

- Drag the new window to another monitor, if you have one.

- Click the blue “Next” to Sign in.

- Click on “Enter your password” and press command+V to paste. Click Next.

- Click “I understand”.

- Click the checkbox to agree. Click “AGREE AND CONTINUE”.

- You should now be at the Google Cloud console.

-

The service navigation menu icon is at the upper-left corner. It’s a toggle to show or hide the left menu of products and services.

Also press Esc.

- A Tutorial pane that appears at the right side can be dismissed by clicking its “X”.

Get support by emailing support@qwiklabs.com, but replies are from

support@qwiklab.zendesk.com

because their website is at

https://qwiklab.zendesk.com/hc/en-us/requests/new

PROTIP: Create an email filter to star and mark as important emails from support@qwiklab.zendesk.com

sudo apt-get install iputils-ping

PROTIP: If you are not able to type in a box for entry, look at the top of the screen to see if there is a (usually blue) modal pop-up window hogging attention. Click the “X” to dismiss that window.

References:

Google Codelabs

Google Codelabs (at https://codelabs.developers.google.com) are interactive instructional tutorials, authored in Google Docs using Markdown formatting and the claat Go-based command line tool.

Codelabs are NOT FREE. You need to supply a real account associated with a credit card for billing.

Get $10 for hands-on practice using Google’s Codelabs: Doing a Google Cloud codelab? Start here

https://console.developers.google.com/freetrial

Some Codelabs:

- Cloud Spanner with Terraform on GCP

- Running Node.js on a Virtual Machine

-

https://codelabs.developers.google.com/codelabs/cloud-natural-language-python3#0

- https://pypi.org/project/pyhcl/ (from 2020) parses HCL

- HashiCorp maintains a https://github.com/hashicorp/terraform-config-inspect to extract top-level objects from a Terraform module file. See https://github.com/yutannihilation/go-parse-hcl-example/blob/master/main.go

- Extend an Android app to Google Assistant with App Actions

Coursera courses

Google developed classes on Coursera and at https://cloud.google.com/training

- https://www.coursera.org/learn/gcp-infrastructure-scaling-automation

Pluralsight videos

On Pluralsight, Lynn Langit created several video courses early in 2013/14 when Google Fiber was only available in Kansas City:

- Introduction to Google Cloud [52min] for Developers

- Introduction to Google Compute Engine

- https://firebase.google.com/docs/reference/rest/storage/rest/

Pluralsight redirects to Qwiklabs. It’s best to use a second monitor to display instructions.

Rock Stars on YouTube, etc.

Google’s program:

YouTube creators:

-

https://www.freecodecamp.org/news/google-cloud-platform-from-zero-to-hero/

-

https://www.youtube.com/@AwesomeGCP/videos

-

http://www.roitraining.com/google-cloud-platform-public-schedule/ in the US and UK. $599 per day

-

https://deis.com/blog/2016/first-kubernetes-cluster-gke/

-

https://hub.docker.com/r/lucasamorim/gcloud/

-

https://github.com/campoy/go-web-workshop

-

http://www.anengineersdiary.com/2017/04/google-cloud-platform-tutorial-series_71.html

-

https://bootcamps.ine.com/products/google-cloud-architect-exam-bootcamp $1,999 bootcamp

-

https://www.youtube.com/watch?v=jpno8FSqpc8&list=RDCMUC8butISFwT-Wl7EV0hUK0BQ&start_radio=1&rv=jpno8FSqpc8 by Antoni

How to get free cloud time

A. Free $300 account over first 60 days of each new account.

B. For individuals, in 2023 Google began offering a $299/year “Innovators Plus” subscription for $500 cloud credits and a $200 certification voucher.

C. For startups, Google has a https://cloud.google.com/startup program gives $200,000 cloud costs and $200 skills boost for the first 2 years of a startup.

D. For Google partners, Partner Certification Kickstart

A. Free $300 account for 60 days

In US regions, new accounts get $300 of overage for 12 months.

There are limitations to Google’s no charge low level usage:

- No more that 8 cores at once across all instances

- No more than 100 GB of solid state disk (SSD) space

- Max. 2TB (2,000 GB) total persistent standard disk space

PROTIP: Google bills in minute-level increments (with a 10-minute minimum charge), unlike AWS which charges by the hour (for Windows instances).

-

Read the fine print in the FAQ to decide what Google permits:

https://cloud.google.com/free/docs/frequently-asked-questions

-

Read Google’s Pricing Philosophy:

https://cloud.google.com/pricing/philosophy

Gmail accounts

PROTIP: Use a different password for your Gmail address than when registering for Google events such as Next conference Aug 29-31 (for $1,600).

-

NOTE: Create several Gmail accounts, each with a different identity (name, birthdate, credit card). You would need to use the same name for the credit card and the same phone number because they are expensive.

PROTIP: It can be exhausting, but write down all the details (including the date when you opened the account) in case you have to recover the password.

PROTIP: Use a different browser so you can flip quickly between identities.

- Use Chrome browser for Gmail account1 with an Amex card for project1

- Use Firefox browser for Gmail account2 with a Visa card for project2

- Use Brave browser for Gmail account3 with a Mastercard for project3

- Use Safari browser for Gmail account4 with a Discover card for project4

-

In the appropriate internet browser, apply for a Gmail address and use the same combination. in the free trial registration page and Console:

-

Click the Try It Free button. Complete the registration. Click Agree and continue. Start my new trial.

-

With the appropriate account and browser, configure at

-

PROTIP: Bookmark the project URL in your browser.

PROTIP: Google remembers your last project and its region, and gives them to you even if you do not specify them in the URL.

Configure Limits

-

CAUTION: Your bill can suddenly jump up to thousands of dollars a day, with no explanation. Configure to put limits.

Ways of interacting with Google Cloud

As with other CSPs, there are several ways to interact with Google Cloud:

-

Manual clicking and typing on the web-based Console GUI at https://console.cloud.google.com

-

Execution of CLI commands in the web-based Google Shell initiated from the web console GUI

-

Execution of CLI commands in the Qwiklabs training environment

-

Execution of CLI commands from a Terminal on your macOS laptop after installing gcloud and other utilities

-

Execute Terraform declarative HashiCorp Configuration Language (HCL) specifications to create Infrastructure as Code (IaC).

-

Execute a programmatic IaC such as Pulumi.

-

Calls to REST APIs from a custom application code you write in Python, Go, etc.

-

“Google Cloud” Console mobile app provides view-only access

In this article, we start with the web GUI, then on to Qwiklabs and other CLI, then Terraform, and API coding.

macOS utilities for Google Cloud

PROTIP: Even if you are not going to develop on macOS, it helps to be prepared to copy files from your laptop to Google Cloud.

Web GCP Console GUI (Dashboard)

-

https://console.cloud.google.com is the Welcome page for a specific project.

-

Select a project or create one.

-

https://console.cloud.google.com/home/dashboard

displays panes for your project from among the list obtained by clicking the “hamburger” menu icon at the upper left corner.

Alternately, create a new project:

gcloud projects create $MY_PROJECT_ID

Use GUI to create first project

All resources are created under a project (and Organization if one has been setup), so use the GUI to create the first project.

- In a browser, https://console.cloud.google.com

- At the upper-left, click the organization or project to the right of “Google Cloud” .

- Click “NEW PROJECT”.

- “My Project-12345” (or some other number) is automatically created.

- Change it to something more meaningful.

- Notice that the Project ID is auto-created with a number suffix.

Cloud Shell in the web GUI

The Cloud Shell provides command line access on a web browser, with nothing to install.

Sessions have a 1 hour timeout.

Language support for Java, Go, Python, Node, PHP, Ruby.

Not meant for high computation use.

- HANDS-ON: Getting Started with Cloud Shell and gcloud

- https://cloud.google.com/sdk/gcloud/reference - the SDK provides CLI utilities bq, gsutil, gcloud.

-

PROTIP: Instead of manually performing the commands below, invoke a script by copying this, pasting on the Cloud Shell:

sh -c "$(curl -fsSL https://raw.githubusercontent.com/wilsonmar/gcp-samples/main/gcpinfo.sh)" -v

-

TODO: Set alias “gshellinfo” to invoke above github.com/wilsonmar/???/gshellinfo.sh

-

Rerun using alias gcpinfo

Here are the steps you skip by invoking my script:

-

Set the prompt so the cursor returns to the sample location on the left, with an extra blank line and present working directory (pwd):

export PS1="\n \u/\w\[\033[33m\]\n$ "

PROTIP: I use the above command so often I have it as one of the buttons on my Stream Deck box.

-

Know what versions you are working with, for troubleshooting later:

gcloud version

Google Cloud SDK 431.0.0 alpha 2023.05.12 app-engine-go 1.9.75 app-engine-java 2.0.14 app-engine-python 1.9.104 app-engine-python-extras 1.9.100 beta 2023.05.12 bigtable bq 2.0.92 bundled-python3-unix 3.9.16 cbt 0.15.0 cloud-datastore-emulator 2.3.0 cloud-run-proxy 0.3.0 core 2023.05.12 gcloud-crc32c 1.0.0 gke-gcloud-auth-plugin 0.5.3 gsutil 5.23 kpt 1.0.0-beta.31 local-extract 1.5.8 minikube 1.30.1 nomos 1.15.0-rc.6 pubsub-emulator 0.8.2 skaffold 2.3.0

-

Versions of pre-installed language support:

dotnet --info # 6.0.408, Runtime: debian 11, etc. go version # go1.20.4 linux/amd64 java -version # "17.0.6" 2023-01-17 node -v # v18.12.1 php -v #A 7.4.33 (cli) (built: Apr 9 2023 16:54:16) ( NTS ) python --version # 3.9.2 ruby -v # 2.7.8p225 (2023-03-30 revision 1f4d455848) [x86_64-linux]

-

Know how much disk space Available (out of the 5GB Google gives each session):

df

Filesystem 1K-blocks Used Available Use% Mounted on overlay 62710164 48587684 14106096 78% / tmpfs 65536 0 65536 0% /dev /dev/sda1 62710164 48587684 14106096 78% /root /dev/disk/by-id/google-home-part1 5018272 253184 4486612 6% /home /dev/root 2003760 1029144 974616 52% /usr/lib/modules shm 65536 0 65536 0% /dev/shm tmpfs 3278072 1236 3276836 1% /google/host/var/run

NOTE: Need more disk space? Google Cloud Workstations (go/cloud-workstations-external-deck) is an enterprise focused offering to enable custom disk size/VM type, VPC, and VPC-SC support.

-

List all zone Names and REGION codes Google offers:

gcloud compute zones list

Sample response:

NAME REGION STATUS NEXT_MAINTENANCE TURNDOWN_DATE asia-east1-c asia-east1 UP asia-east1-b asia-east1 UP asia-east1-a asia-east1 UP asia-northeast1-a asia-northeast1 UP asia-northeast1-c asia-northeast1 UP asia-northeast1-b asia-northeast1 UP asia-south1-c asia-south1 UP us-central1-c us-central1 UP asia-south1-a asia-south1 UP asia-south1-b asia-south1 UP asia-southeast1-a asia-southeast1 UP asia-southeast1-b asia-southeast1 UP australia-southeast1-c australia-southeast1 UP australia-southeast1-b australia-southeast1 UP australia-southeast1-a australia-southeast1 UP europe-west1-c europe-west1 UP europe-west1-b europe-west1 UP europe-west1-d europe-west1 UP europe-west2-b europe-west2 UP europe-west2-a europe-west2 UP europe-west2-c europe-west2 UP europe-west3-b europe-west3 UP europe-west3-a europe-west3 UP europe-west3-c europe-west3 UP southamerica-east1-c southamerica-east1 UP southamerica-east1-b southamerica-east1 UP southamerica-east1-a southamerica-east1 UP us-central1-a us-central1 UP us-central1-f us-central1 UP us-central1-c us-central1 UP us-central1-b us-central1 UP us-east1-b us-east1 UP us-east1-d us-east1 UP us-east1-c us-east1 UP us-east4-c us-east4 UP us-east4-a us-east4 UP us-east4-b us-east4 UP us-west1-c us-west1 UP us-west1-b us-west1 UP us-west1-a us-west1 UP

REMEMBER: Region is a higher-order (more encompassing concept) than Zone.

Not shown is a list of the URI of each zone:

gcloud compute zones list --uri

(A long list is shown)

-

List projects for your account:

gcloud projects list --format="table[box](name:sort=1:reverse, createTime.date('%d-%m-%Y'))" -

View your Project ID from the $GOOGLE_CLOUD_PROJECT variable value inherited from the Google Cloud console, even though your Cloud Shell instance is not directly associated with or managed by the project:

echo $GOOGLE_CLOUD_PROJECT

-

View your current Project number and region, run the following command from a Cloud Shell session:

curl metadata/computeMetadata/v1/instance/zone

projects/project number/zones/us-west1-b

Alternately:

curl http://metadata.google.internal/computeMetadata/v1/instance/zone -H Metadata-Flavor:Google | cut '-d/' -f4

-

View the hostname of your Cloud Shell VM which you can use to make HTTPS requests to the environment.

echo $WEB_HOST

GUID.cs-us-west1-ijlt.cloudshell.dev

References:

- https://www.youtube.com/watch?v=RdDyF3jVbbE

CLI programs & commands on your Terminal

Google has these shells:

-

gcloud CLI installed with google-cloud-sdk.

-

gsutil to access Cloud Storage

-

bq for Big Query tasks

-

kubectl for Kubernetes

-

anthos for multicloud using Kubernetes

-

gcpdiag to lint projects (for troubleshooting)

See the Google Cloud SDK for Windows (gcloud) for your programming pleasure.

BLOG: Graphical user interface (GUI) for Google Compute Engine instance

gcloud CLI commands

-

Documentation on the gcloud CLI command:

https://cloud.google.com/sdk/gcloud/reference

“GROUP” refers the name grouping services.

https://www.cloudskillsboost.google/focuses/563?parent=catalog

-

Click the icon in the Google Cloud Platform Console:

-

Click “START CLOUD SHELL” at the bottom of this pop-up:

When the CLI appears online:

-

See that your present working directory is

/home/as your account name:pwd

-

See the folder with your account name:

echo ${HOME} -

Just your account name:

echo ${USER} -

Read the welcome file:

nano README-cloudshell.txt

Your 5GB home directory will persist across sessions, but the VM is ephemeral and will be reset approximately 20 minutes after your session ends. System-wide changes will NOT persist beyond that.

-

Type “gcloud help” to get help on using Cloud SDK. For more examples, visit

https://cloud.google.com/shell/docs/quickstart and

https://cloud.google.com/shell/docs/examples -

Type “cloudshell help” to get help on using the “cloudshell” utility. Common functionality is aliased to short commands in your shell, for example, you can type “dl <filename>” at Bash prompt to download a file.

Type “cloudshell aliases” to see these commands.

-

Type “help” to see this message any time. Type “builtin help” to see Bash interpreter help.

Other resources:

Text Editor

-

Click the pencil icon for the built-in text editor.

-

Edit text using nano or vim built-in.

-

PROTIP: Boost mode to run Docker with more memory.

- Switch to your macOS local machine. Navigate to where you store your secret files.

-

Copy all content to your invisible Clipboard. On a mac:

cat ~/myvars.sh | pbcopy

-

Switch back to Google Cloud Shell. Since it’s a Linux machine, define these substitutes:

alias pbcopy=’xsel — clipboard — input’ alias pbpaste=’xsel — clipboard — output’

-

Use the aliases defined above to execute the contents of your Clipboard (containing export CLI commands):

pbpaste

If there are secrets, it’s better you don’t save them in a file on Google Cloud Shell.

- Define environment variables to hold zone and region and location:

export CLOUDSDK_COMPUTE_ZONE=us-central1-f export CLOUDSDK_COMPUTE_REGION=us-central1 echo $CLOUDSDK_COMPUTE_ZONE echo $CLOUDSDK_COMPUTE_REGION

TODO: Get the default region and zone into environment variables.

curl “http://metadata.google.internal/computeMetadata/v1/instance/zone” -H “Metadata-Flavor: Google”

- Set the zone (for example, us-east1-f defined above):

gcloud config set compute/zone ${CLOUDSDK_COMPUTE_ZONE}

See https://cloud.google.com/compute/docs/storing-retrieving-metadata

List Projects, Set one

-

Get list of Project IDs:

Example (default sort by project ID):

PROJECT_ID NAME PROJECT_NUMBER what-182518 CICD 608556368368

-

To get creation time of a specified project:

gcloud projects list –format=”table(projectId,createTime)”

Response:

PROJECT_ID CREATE_TIME applied-algebra-825 2015-01-14T06:51:30.910Z

-

Alternately, gcloud projects describe 608556368368 gives too much info:

createTime: '2022-09-14T14:30:01.540Z' lifecycleState: ACTIVE name: something projectId: what-182518 projectNumber: '608556368368'

-

To get last date used and such, example code for apis/SDK involving the f1 micro instance

- https://github.internet2.edu/nyoung/gcp-gce-project-audit-bq

- https://github.internet2.edu/nyoung/gcp-project-audit-simple

-

PROTIP: Instead of manually constructing commands, use environment variable:

gcloud config set project "${DEVSHELL_PROJECT_ID}"

Alternately, if you want your own:

gcloud config get-value project

project = qwiklabs-gcp-02-807529dcf2db Your active configuration is: [cloudshell-1079]

PROJECT_ID=$(gcloud config get-value project) MY_REGION=$(gcloud config get-value compute/region) MY_ZONE=$(gcloud config get-value compute/zone)

- PROTIP: The shell variable $DEVSHELL_PROJECT_ID defined by Google to refer to the project ID of the project used to start the Cloud Shell session.

echo $DEVSHELL_PROJECT_ID

- List project name (aka “Friendly Name”) such as “cp100”.

gcloud config list project

A sample response if “(unset)”:

[core] project = what-182518 Your active configuration is: [cloudshell-20786]

-

Print just the project name (suppressing other warnings/errors):

gcloud config get-value project 2> /dev/null

Alternately:

gcloud config list --format 'value(core.project)' 2>/dev/null

Quotas

-

PROTIP: Get COMPUTE information about a project using the project environment variable:

gcloud compute project-info describe --project ${DEVSHELL_PROJECT_ID}Project metadata includes quotas:

quotas: - limit: 1000.0 metric: SNAPSHOTS usage: 1.0 - limit: 5.0 metric: NETWORKS usage: 2.0 - limit: 100.0 metric: FIREWALLS usage: 13.0 - limit: 100.0 metric: IMAGES usage: 1.0 - limit: 1.0 metric: STATIC_ADDRESSES usage: 1.0 - limit: 200.0 metric: ROUTES usage: 31.0 - limit: 15.0 metric: FORWARDING_RULES usage: 2.0 - limit: 50.0 metric: TARGET_POOLS usage: 0.0 - limit: 50.0 metric: HEALTH_CHECKS usage: 2.0 - limit: 8.0 metric: IN_USE_ADDRESSES usage: 2.0 - limit: 50.0 metric: TARGET_INSTANCES usage: 0.0 - limit: 10.0 metric: TARGET_HTTP_PROXIES usage: 1.0 - limit: 10.0 metric: URL_MAPS usage: 1.0 - limit: 5.0 metric: BACKEND_SERVICES usage: 2.0 - limit: 100.0 metric: INSTANCE_TEMPLATES usage: 1.0 - limit: 5.0 metric: TARGET_VPN_GATEWAYS usage: 0.0 - limit: 10.0 metric: VPN_TUNNELS usage: 0.0 - limit: 3.0 metric: BACKEND_BUCKETS usage: 0.0 - limit: 10.0 metric: ROUTERS usage: 0.0 - limit: 10.0 metric: TARGET_SSL_PROXIES usage: 0.0 - limit: 10.0 metric: TARGET_HTTPS_PROXIES usage: 1.0 - limit: 10.0 metric: SSL_CERTIFICATES usage: 1.0 - limit: 100.0 metric: SUBNETWORKS usage: 26.0 - limit: 10.0 metric: TARGET_TCP_PROXIES usage: 0.0 - limit: 24.0 metric: CPUS_ALL_REGIONS usage: 3.0 - limit: 10.0 metric: SECURITY_POLICIES usage: 0.0 - limit: 1000.0 metric: SECURITY_POLICY_RULES usage: 0.0 - limit: 6.0 metric: INTERCONNECTS usage: 0.0REMEMBER: Up to up 100 user-managed service accounts can be created in a project.

config list

-

List configuration information for the currently active project:

gcloud config list

Sample response:

[component_manager] disable_update_check = True [compute] gce_metadata_read_timeout_sec = 5 [core] account = johndoe@gmail.com check_gce_metadata = False disable_usage_reporting = False project = what-182518 [metrics] environment = devshell Your active configuration is: [cloudshell-20786]

-

List projects to whch your account has access:

gcloud projects list

-

Confirm:

gcloud compute config-ssh

WARNING: The private SSH key file for gcloud does not exist. WARNING: The public SSH key file for gcloud does not exist. WARNING: You do not have an SSH key for gcloud. WARNING: SSH keygen will be executed to generate a key. Generating public/private rsa key pair. Enter passphrase (empty for no passphrase): __

Google Shell

-

Set the default zone and project configuration:

gcloud config set compute/zone us-central1-f

Example:

export MY_ZONE="us-east1-c"

Local gcloud CLI usage

Get the CLI to run locally on your laptop:

-

Install. On macOS use Homebrew:

brew install --cask google-cloud-sdk

Alternately:

- In https://cloud.google.com/sdk/downloads

- Click the link for Mac OS X (x86_64) like “google-cloud-sdk-173.0.0-darwin-x86_64.tar.gz” to your Downloads folder.

- Double-click the file to unzip it (from 13.9 MB to a 100.6 MB folder). If you’re not seeing a folder in Finder, use another unzip utility.

- Move the folder to your home folder.

Either way, edit environment variables on Mac.

PROTIP: The installer creates folder ~/google-cloud-sdk

-

PROTIP: To quickly navigate to the folder with just 3 characters (gcs), add to macsetup/aliases.zsh

alias gcs='cd ~/google-cloud-sdk'

-

Add the path to that folder in the $PATH variable within your ~/.bash_profile or ~/.zshrc

export PATH="$PATH:$HOME/google-cloud-sdk/bin"

-

That’s where executables for gcloud, gsutil, bq are located as well as .py (Python scripts):

Also consider:

source "/opt/homebrew/Caskroom/google-cloud-sdk/latest/google-cloud-sdk/path.zsh.inc"

Authenticate using gcloud

-

Authenticate using the Google Cloud SDK:

gcloud auth application-default login

This pops up a browser page for you to select the Google (Gmail) account you want to use.

-

If “Updates are available for some Google Cloud CLI components”, install them:

gcloud components update

Account Login into Google Cloud

-

PROTIP: If you are juggling several GCP accounts, prepare a file containing variables such as these so that, in one action, ALL the various variables and values you’ll need:

export MY_GCP_ORG="???" export MY_GCP_ACCOUNT="johndoe@gmail.com" export MY_PROJECT_ID="123456789" export MY_LOCATION="us-central1" export MY_ZONE="us-central1-c"

The script would activate the values:

gcloud config set project "$MY_PROJECT_ID"

Updated property [core/project].

-

Some APIs (Maps, BigQuery) require Billing to be enabled for the project using them. See https://cloud.google.com/billing/docs/how-to/verify-billing-enabled

echo "MY_PROJECT_ID=$MY_PROJECT_ID" yes | gcloud beta billing projects describe "$MY_PROJECT_ID"

This may require installation of additional glcoud components.

-

Check account:

echo "MY_GCP_ACCOUNT=$MY_GCP_ACCOUNT" gcloud beta billing accounts describe "$MY_GCP_ACCOUNT"

-

PROTIP: Login to a specific Google account using gcloud auth login:

gcloud auth login "$MY_GCP_ACCOUNT"

Returned is:

https://cloud.google.com/sdk/auth_success -

Navigate to a project folder where you store your custom Python code:

-

Initialize the SDK:

gcloud init

Welcome! This command will take you through the configuration of gcloud. Settings from your current configuration [default] are: auth: service_account_use_self_signed_jwt: None core: account: wilsonmar@gmail.com disable_usage_reporting: 'True' Pick configuration to use: [1] Re-initialize this configuration [default] with new settings [2] Create a new configuration Please enter your numeric choice: _

TODO:

-

Show Google’s variable for the Project ID:

echo "$GOOGLE_CLOUD_PROJECT"

-

Obtain the Project ID to authorize use of the gsutil CLI command:

gsutil mb -l $LOCATION gs://$DEVSHELL_PROJECT_ID

If prompted, click AUTHORIZE.

-

Install libraries (without the help argument):

On Linux or Mac OS X:

./install.sh --help

On Windows:

.\install.bat --help

-

Retrieve a banner image (png file) from a publicly accessible Cloud Storage location:

gsutil cp gs://cloud-training/gcpfci/my-excellent-blog.png my-excellent-blog.png

Copying gs://cloud-training/gcpfci/my-excellent-blog.png... / [1 files][ 8.2 KiB/ 8.2 KiB] Operation completed over 1 objects/8.2 KiB.

-

Modify the Access Control List of the object you just created so that it is readable by everyone:

gsutil acl ch -u allUsers:R gs://$DEVSHELL_PROJECT_ID/my-excellent-blog.png

gcloud CLI commands

Regardless of whether the CLI is online or local:

-

Get syntax of commands

gcloud help

-

Be aware of the full set of parameters possible for GCP tasks at

https://cloud.google.com/sdk/gcloud/referenceThe general format of commands:

gcloud [GROUP] [GROUP] [COMMAND] – arguments

Has all Linux command tools and authentication pre-installed.

-

Run

dfto see that /dev/sdb1 has 5,082,480 KB = 5GB of persistent storage:Filesystem 1K-blocks Used Available Use% Mounted on none 25669948 16520376 7822572 68% // tmpfs 872656 0 872656 0% //dev tmpfs 872656 0 872656 0% //sys//fs//cgroup /dev/sdb1 5028480 10332 4739672 1% //home /dev/sda1 25669948 16520376 7822572 68% //etc//hosts shm 65536 0 65536 0% //dev//shm

-

Confirm the operating system version:

uname -a

Linux cs-206022718149-default 5.10.133+ #1 SMP Sat Sep 3 08:59:10 UTC 2022 x86_64 GNU/Linux

Previously:

Linux cs-6000-devshell-vm-5260d9c4-474a-47de-a143-ea05b695c057-5a 3.16.0-4-amd64 #1 SMP Debian 3.16.43-2+deb8u5 (2017-09-19) x86_64 GNU/Linux

-

PROTIP: It may seem like a small thing, but having the cursor prompt always in the first character saves you from hunting for it visually.

export PS1="\n \w\[\033[33m\]\n$ "

The “\n” adds a blank line above each prompt.

The current folder is displayed above the prompt.

PROTIP: Setup a keystroke program (such as Stream Deck) to issue that long command above. Aliases

Apps using API

https://docs.morpheusdata.com/en/latest/integration_guides/Clouds/google/google.html

REST APIs

PROTIP: Google’s public-facing APIs are listed among thousands of others on the internet at https://any-api.com

I was hoping to make a list to automate calling Google APIs using a single reference for each API. But nooooo…

PROTIP: References to each API are inconsistent. So I created a spreadsheet:

Column A “_#” shows the sequence number to the category name at ???

Column B “_api_name” is the common key across all lists.

Column C “_Cat” (Category) contains “Ent” (Enterprise) for each of the 186 Google Enterprise APIs.

Column D & E “_Desc_in_explorer” and “_explore_doc_url” lists 265 APIs at https://developers.google.com/apis-explorer - but different format of links go to different websites! For example:

- https://cloud.google.com/certificate-authority-service/docs/reference/rest/ is the most common

- https://cloud.google.com/deployment-manager/docs/reference/v2beta/

-

https://cloud.google.com/vpc/docs/reference/vpcaccess/rest/

-

https://developer.chrome.com/docs/versionhistory/reference/

- https://developers.google.com/chrome/management/reference/rest/ (http, not https)

- https://developers.google.com/analytics/devguides/reporting/data/v1/rest/

- https://developers.google.com/discovery/v1/reference/

-

https://developers.google.com/civic-information/docs/v2/

- https://firebase.google.com/docs/reference/appcheck/rest/

- NOTE: To extract the URL from underlined cells containing the URL, I had to create and store a VBA function in the Excel sheet and JavaScript Extension function (per atylerorobertson.com) in the equivalent Google Sheet, using function =GETLINK(CELL("Address",B2))

Column F “_googleapis.com” lists 452 of the unique service ID that replaces the “???” in https://???.googleapis.com. It’s obtained from command gcloud services list –available | grep googleapis.com which filters for only googleapis.com APIs (out of 3401 APIs)

- The key in this column is used to construct the URL to the "Product details" web page where you enable the "???" service for your current project, such as

https://console.cloud.google.com/apis/library/???.googleapis.com

Column G “_Python Cloud Client Library”

Column H “doc_samples” lists 83 https://github.com/GoogleCloudPlatform/python-docs-samples

Column I “_package” lists 100 packages in https://github.com/googleapis/google-cloud-python/tree/main/packages providing dependencies defined in Python program import coding. There is a similar repo for other programming languages.

Predefined scopes for each API version are shown at

https://developers.google.com/oauthplayground

For example, Google Storage API v1 has these scopes:

- https://www.googleapis.com/auth/devstorage.full_control

- https://www.googleapis.com/auth/devstorage.read_only

- https://www.googleapis.com/auth/devstorage.read_write

Most others (Apigee, Filestore, Text-to-Speech, etc.) have just:

- https://www.googleapis.com/auth/cloud-platform

-

https://console.cloud.google.com/apis/library/browse (for each project)

The file was created manually, but TODO: write a program to scrape the page to update the sheet.

- “Cloud Memorystore for Memcached API” in the Explorer is

“Memorystore for Memcached” - “Google Cloud Memorystore for Redis API” in the Explorer is

“Memorystore for Redis”

- “Cloud Memorystore for Memcached API” in the Explorer is

References:

- https://cloud.google.com/apis/docs/cloud-client-libraries

- https://cloud.google.com/sdk/cloud-client-libraries Go, Java, Node.js, Python, Ruby, PHP, C#, C++

https://cloud.google.com/code/docs/vscode/client-libraries https://cloud.google.com/code/docs/vscode/client-libraries#remote_development_with_permissions_enabled

https://medium.com/google-cloud/programatically-manage-gcp-resources-via-rest-apis-6b216e5efadf https://googleapis.dev/python/google-api-core/latest/auth.html https://codelabs.developers.google.com/codelabs/cloud-vision-api-python#0

https://cloud.google.com/web-risk/docs/reference/rest/ https://developers.google.com/drive/v3/reference/ https://developers.google.com/drive/activity/v2/reference/rest/

https://developers.google.com/apps-script/api/reference/rest/

https://cloud.google.com/secret-manager/docs/reference/rest/ https://developers.google.com/vault/reference/rest/

https://googleapis.github.io/google-api-python-client/docs/start.html

- https://medium.com/google-cloud/programatically-manage-gcp-resources-via-rest-apis-6b216e5efadf

Google Marketplace

Get someone else to do the work for you.

Get pre-configured infrastructure from various 3rd-party vendors:

- https://cloud.google.com/marketplace/

-

https://cloud.google.com/marketplace/docs/

- HANDS-ON: Google Cloud Fundamentals: Getting Started with Cloud Marketplace deploy a LAMP stack on a Compute Engine instance. The Bitnami LAMP Stack provides a complete web development environment for Linux and phpinfo.php

Google IaC (Infra as Code)

There are several ways to create resources in Google Cloud:

- Google Cloud Console GUI

- Google Cloud SDK (gcloud CLI commands)

-

Google Cloud Deployment Manager

- Google Cloud Terraform

- Google Cloud Cloud Foundation Toolkit (CFT)

Terraform and CFT are both Infrastructure as Code (IaC) tools, where resources are defined by coding like programming code (Java, Python, Go, etc.) stored in versioned GitHub repositories. The versioning also enables identification of who made each change, when, and why. Git commands can retrieve files at various points in the past.

PROTIP: I’ve been saying that the most useful part of Infrastructure as Code is not just the producitivity gains from resusability, etc. but that security vulnerabilities in resource can be tested before being exposed on the wild-west Internet</a>. That’s not just because infra code can deploy into test enviornments before being deployed into production.

Cloud Adoption Framework

All this is mentioned in the Google Cloud Adoption Framework at:

https://cloud.google.com/adoption-framework

and

https://cloud.google.com/architecture/security-foundations

Several “DevSecOps” tools have been created to scan Terraform code for vulnerabilities:

- Synopsys

- OPA (Open Policy Agent) Rego language

- Hashicorp Sentinel

Google CFT (Cloud Foundation Toolkit)

The Google CFT (Cloud Foundation Toolkit) – with marketing page at:

- https://cloud.google.com/foundation-toolkit

The CFT toolkit repo at:

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit

says it “provides GCP best practices as code” out of the box – “a comprehensive set of production-ready resource templates that follow Google’s best practices”.

CFT is more opininated (less flexible) than Terraform modules in the Cloud Foundation Fabric.

Rather than forking and modification when using Fabric, CFT aims to be “extensible via composition” from the Terraform Registry modified with .tfvars

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/blob/master/dm/docs/userguide.md notes that the “cft” command line tool is needed as a wrapper around the “gcloud” command line tool because:

- GCP Deployment Manager service does not support cross-deployment references, and

- the gcloud utility does not support concurrent deployment of multiple inter-dependent configs.

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/blob/master/dm/docs/tool_dev_guide.md

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/blob/master/dm/docs/template_dev_guide.md

??? the Blueprint (integration) Test framework, each containing a resource.yaml test config with a resource.bats test harness. See the Template Development Guide.

- https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/tree/master/infra/blueprint-test

“CFT is designed to be modular and off the shelf, providing higher level abstractions to product groups which allows certain teams to adopt Terraform without maintenance burden while allowing others to follow existing practices.”

Rather than monolithically as with Fabric, the 64 modules within CFT are versioned and released individually:

- https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/tree/master/dm/templates

??? managed by Python 2.7 template file resource.py ???

- https://github.com/terraform-google-modules

Reports defined in the OPA Rego language (hence the .rego file extension):

- https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/tree/master/reports/sample

aims to provide “reference templates” that can be used off-the-shelf to quickly build a repeatable enterprise-ready baseline secure foundation environment in Google Cloud. The templates aim to reflect Google Cloud best practices.

The CFT expands on the Google Deployment Manager because it does not support cross-deployment references.

The CFT expands on Google’s gcloud CLI utility because it does not support concurrent deployment of multiple inter-dependent configs.

Hands-on “Cloud Foundation Toolkit 101” that adds (and test) a feature to a CF module in GitHub:

https://codelabs.developers.google.com/cft-onboarding#0

https://medium.com/datamindedbe/running-through-the-google-gcp-cloud-foundation-toolkit-setup-b4c5a912da56

modified from

CFT for various resources from the Cloud Deployment Manager:

https://cloud.google.com/deployment-manager/docs/reference/cloud-foundation-toolkit

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/tree/master/dm/templates

It’s heavily opinionated so may break production environments.

https://morioh.com/a/fcb2c083df4e/using-the-cloud-foundation-toolkit-with-terraform Tutorial

See https://github.com/GoogleCloudPlatform/policy-library/blob/master/docs/user_guide.md

* 0-bootstrap

https://googlecoursera.qwiklabs.com/focuses/29801820?parent=lti_session Securing Google Cloud with CFT Scorecard

https://cloud.google.com/blog/products/devops-sre/using-the-cloud-foundation-toolkit-with-terraform/

CFT Scorecard to secure GCP CAI

The “CFT Scorecard” may be a bit of a misnomer. The technology doesn’t have a GUI. So it’s not like a Baseball scorecard or a business “Balanced Scorecard” that displays statistics.

The CFT Scorecard is a CLI program that outputs text to provide visibility into misconfigurations and violations of standards for Google Cloud resources, projects, folders, in entire organizations.

https://www.coursera.org/projects/googlecloud-securing-google-cloud-with-cft-scorecard-dwrbx 25 minute FREE project

https://github.com/wilsonmar/DevSecOps/blog/main/gcp/gcp-cft-cai.sh

https://github.com/GoogleCloudPlatform/cloud-foundation-toolkit/blob/master/cli/docs/scorecard.md

https://stackoverflow.com/questions/62499204/error-in-google-cloud-shell-commands-while-working-on-the-lab-securing-google-c

Forseti Config Validator at https://github.com/GoogleCloudPlatform/policy-library/blob/master/docs/user_guide.md

This repo contains several distinct Terraform projects each within their own directory that must be applied separately, but in sequence. Each of these Terraform projects are to be layered on top of each other, running in the following order.

- https://www.linkedin.com/in/sg-dchen/

BLOG: “Google Cloud CFT Scorecard” by Jorge Liauw Calo at Xebia

Cloud Foundation Toolkit CFT Training Instance Group | Qwiklabs

- https://www.youtube.com/watch?v=8TQYnxd_F00

- https://www.youtube.com/watch?v=NXKhW3quAzg

- https://www.youtube.com/watch?v=qvbyGhhqtrg

https://www.youtube.com/watch?v=DC4aY6RsUS4 Cloud Foundation Toolkit (CFT) Training: Instance Group || #qwiklabs || #GSP801 Quick Lab

Terraform

PROTIP: My notes/tutorial on using HashiCorp Terraform is at:

https://wilsonmar.github.io/terraform

https://github.com/terraform-google-modules/ https://github.com/terraform-google-modules/terraform-example-foundation

PROTIP: Use my shell file to install Terraform: It performs these commands for Linux and macOS:

sudo yum install -y zip unzip # if not already installed # (replace x with your version) wget https://releases.hashicorp.com/terraform/0.X.X/terraform_0.X.X_linux_amd64.zip unzip terraform_0.11.6_linux_amd64.zip sudo mv terraform /usr/local/bin/ Confirm terraform binary is accessible: terraform — version

Import your Google Cloud resources into Terraform state;

https://cloud.google.com/docs/terraform/resource-management/import

https://www.cloudskillsboost.google/catalog

Resources:

- https://cloud.google.com/docs/terraform/blueprints/terraform-blueprints

Terraform blueprints and modules for Google Cloud - https://cloud.google.com/docs/terraform/get-started-with-terraform

Deploy a basic Flask web server on Compute Engine by using Terraform -

https://cloud.google.com/docs/terraform/resource-management/managing-infrastructure-as-code

Managing infrastructure as code with Cloud Build and GitOps https://cloud.google.com/build - HANDS-ON: Automating the Deployment of Infrastructure Using Terraform

- https://www.reddit.com/r/googlecloud/comments/yfv1zi/its_worth_apply_the_cft_cloud_foundation_toolkit/

https://developer.hashicorp.com/terraform/tutorials/gcp-get-started/google-cloud-platform-build

https://registry.terraform.io/providers/hashicorp/google/latest/docs/guides/getting_started using https://registry.terraform.io/providers/hashicorp/google/latest at https://registry.terraform.io/providers/hashicorp/google/latest/docs described at https://developer.hashicorp.com/terraform/tutorials/gcp-get-started and VIDEO: https://cloud.google.com/docs/terraform

Classes:

- https://www.cloudskillsboost.google/course_templates/443?utm_source=video&utm_medium=youtube&utm_campaign=youtube-video1 (FREE)

- https://acloudguru.com/course/deploying-resources-to-gcp-with-terraform (subscription)

- https://www.udemy.com/course/terraform-gcp/ by Rohit Abraham

Articles:

- A step by step guide to set up a Terraform to work with a GCP project using Cloud Storage as a backend by Edgar Ochoa

- https://tunzor.github.io/posts/terraform-docs-clarity/

- A complete GCP environment with Terraform by Zuhaib Raja

- https://docs.lacework.net/onboarding/gcp-compliance-and-audit-log-integration-terraform-using-google-cloud-shell

- https://learn.hashicorp.com/collections/terraform/gcp-get-started

- https://github.com/GoogleCloudPlatform/terraform-google-examples

New Project

All Google Cloud resources are associated with a project.

Project ID is unique among all other projects at Google and cannot be changed.

Project Names can be changed.

Principals

Service accounts are one type of principals, called “members” given permissions.

Service accounts are automatically created for each project:

project_number@cloudservices.gserviceaccount.com managed by Google. This service account runs internal Google processes and thus not listed among Service Accounts in the IAM section of the Cloud Console with the project editor role. It is deleted only when the project is deleted. Google services rely on the account having access to your project, so you should NOT remove or change the service account’s role on your project.

project_id@developer.gserviceaccount.com

project_id@developer.gserviceaccount.com

Principals can be these types:

-

@googlegroups.com members

- Individual @gmail.com free account

- Google Workspace (GSuite) account (corp_admin@abc.com)

-

Google Account (which are also accessed at gmail.com) for a paying host

- Cloud Identity domain

- my-sa-123@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com

-

user-created Google service account my-sa-123@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com

- All authenticated users

- All users

A Google Account represents a developer, an administrator, or any other person who interacts with Google Cloud. Any email address that’s associated with a Google Account can be an identity, including gmail.com or other domains.

Each project that contains an App Engine application gets a default App Engine service account such as:

PROJECT_ID@appspot.gserviceaccount.com.

"members": [

"user:ali@example.com",

"serviceAccount:my-other-app@appspot.gserviceaccount.com",

"group:admins@example.com",

"domain:google.com"

]

WARNING: Service Agents have their own (very powerful) roles.

https://www.cloudskillsboost.google/course_sessions/3783064/labs/379382

-

To grant roles to a service account:

gcloud projects add-iam-policy-binding $DEVSHELL_PROJECT_ID \ --member serviceAccount:my-sa-123@$DEVSHELL_PROJECT_ID.iam.gserviceaccount.com \ --role roles/editor

Credentials for Authentication

- Select a project at console.cloud.google.com

- Click navigation “hamburger”, menu “APIs & Services”, Credentials.

-

Click CREATE CREDENTIALS

PROTIP: The types of credentials:

- API Keys identify your project using a simple API key to check quota and access (not to encrypt)

- OAuth2.0 Client IDs requests user consent to an app accessing the user’s data via Google’s OAuth servers. Create a separate client ID for each platform.

- Service accounts enables server-to-server, app-level authentication using robot (non-human) accounts.

Create OAuth2 accounts

References:

- VIDEO by the great Jie Jenn

- Click OAuth client ID,

- CONFIGURE CONSENT SCREEN, External (if you’re not a Workspace owner)

- Click Application type > Desktop app. PROTIP: The easiest for Google APIs among the types:

- Web application

- Android

- Chrome app

- iOS

- TVs and limited input devices

- Desktop app

- Universal Windows (UWP)

- In the Name field, type a name for the credential. This name is only shown in the Google Cloud console.

- Click Create. The OAuth client created screen appears, showing your new Client ID and Client secret.

- Click OK. The newly created credential appears under OAuth 2.0 Client IDs.

- Save the downloaded JSON file as credentials.json, and move the file to your $HOME directory.

- TODO: Store in HashiCorp Vault?

- TODO: Add code to retrieve JSON from Vault.

Create OAuth page

References:

- VIDEO by the great Jie Jenn

- https://developers.google.com/docs/api/quickstart/python

- Select your browser’s profile at the upper-right corner.

-

If the account you’re using is a Google Workspace account, that account’s Administrator must grant you permission by clicking her picture, then “Manage your account”.

- In the Google Cloud console for your project.

- Select the Google project at the top.

- Click the “hamburger” navigation menu “APIs & Services”, “OAuth consent screen”.

- Select External: “Because you’re not a Google Workspace user, you can only make your app available to external (general audience) users.”

- CREATE.

- Type App name, User support email. You don’t need to enter App domain info if your work isn’t commercial.

- TODO: App logo

- SAVE AND CONTINUE for the scopes page.

- SAVE AND CONTINUE for Test users page.

- PUBLISH APP.

- SAVE AND CONTINUE.

Service Accounts

References:

- Click the Google Cloud Program menu “hamberger” icon at the upper-right to expose the menu.

- Click “IAM & Admin”, “Service Account”.

- PROTIP: Owner Roles should not have excessive permissions when not being actively used.

- Click “CREATE SERVICE ACCOUNT”.

-

PROTIP: Follow a Naming Convention when creating service account name. The service account created is of the form:

whatever-123@Project ID</strong>.iam.gserviceaccount.com

-

Tab for Google to automatically add a dash and number to construct the service account ID from the name.

- PROTIP: For Service account description, add ??? (you’ll add Tags later).

-

Click “CREATE AND CONTINUE”.

Roles

- Click “Select a role”.

-

Mouse over “Basic”.

Basic/Primitive Roles

Primitive/Basic/broad IAM roles apply to all resources within a project:

- Owner has full access (all permissions). The only role able to change permissions of members.

- Editor - view, update, delete

-

Viewer - most GCP resources

-

Billing Administrator

- Browser - ?

gcloud iam roles describe roles/browser

includedPermissions: - resourcemanager.folders.get - resourcemanager.folders.list - resourcemanager.organizations.get - resourcemanager.projects.get - resourcemanager.projects.getIamPolicy - resourcemanager.projects.listPROTIP: In production environments, rather than granting basic roles, grant more limited actions predefined for each service, such as:

- compute.instances.delete

- compute.instances.get

- compute.instances.list

- compute.instances.setMachineType