How to automate AI workflows in Microsoft’s Azure and Fabric, despite marketing rebrands, and passing AI-900 & AI-102 certification exams.

Overview

- Gallery

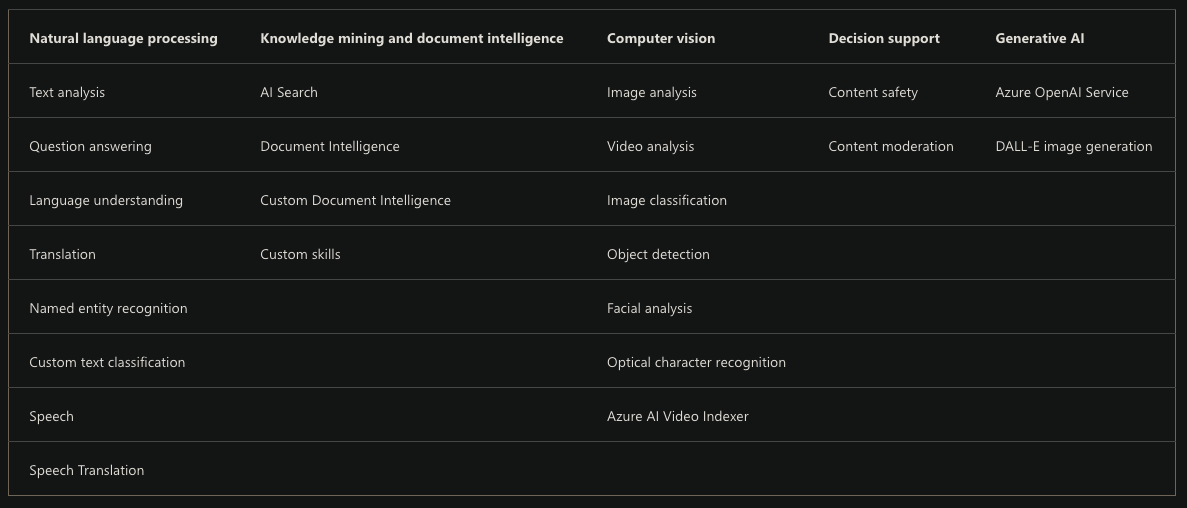

- Microsoft’s AI products portfolio

- Services Table

- Microsoft’s Confusing Branding

- Microsoft’s History with AI

- Competitive futures

- Prerequisites to this document

- Learning Sequence

- Automation necessary for PaaS

- Azure AI certifications

- AI Landing Zones (ALZ)

- Sample ML Code

- AI Services

- Vision services

- Computer Vision

- Face => AI

- CustomVision

- Form Recognizer = Document Intelligence

- Video Indexer

- AI Language services

- LUIS

- LUIS Regional websites

- Create LUIS resource

- Create a LUIS app in the LUIS portal

- Create a LUIS app with code

- Create entities in the LUIS portal

- Create a prediction key

- Install and run LUIS containers

- DEMO JSON responses

- LUIS CLI

- Luis.ai

- Text Analytics API Programming

- Retrieving Azure Cognitive Services API Credentials

- Speech Services

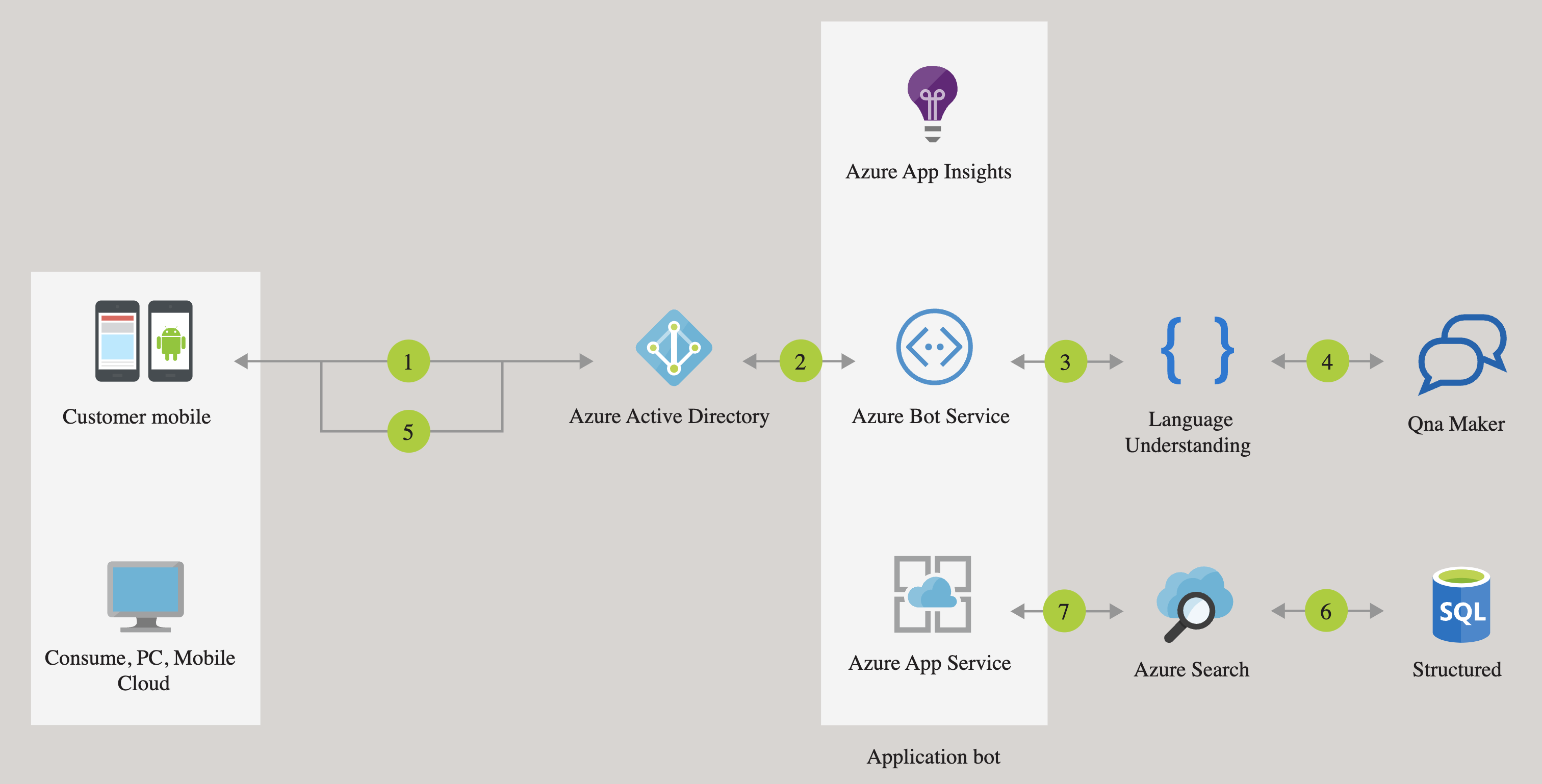

- Conversational AI

- QnA Maker

- Decision (Making)

- Anomaly Detector

- Content Moderator

- Metrics Advisor

- Personalizer

- Search

- Other services

- Bot Service

- Azure OpenAI

- Data Science VM

- Resources

- Notes to be inserted

- More

Here is a guided deep dive to introduce use of Microsoft’s Artificial Intelligence (AI) offerings running on the Azure cloud. My contribution to the world (to you) is a less overwhelming learning sequence, one that starts with the least complex of technologies used, then more complex ones.

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

Microsoft “democratizes” AI and Machine Learning by providing a front-end that hides some of the complexities, enabling them to be run possibly without programming.

Gallery

-

Microsoft’s Azure AI gallery of samples and users’ contributions:

https://gallery.azure.ai/browse

These are what are called “narrow” or “weak” AI.

-

Click (Azure cloud) SERVICES USED:

- Azure Machine Learning

- PowerBI

- Azure Blob Storage

- Azure HDInsight

- Azure Data Factory

- Azure SQL DataWarehouse

- Azure App Service

- Azure Sql

- Azure Event Hubs

- Azure Stream Analytics

- Azure Cognitive Service - LUIS

- Azure Data Lake Analytics

- Azure Data Lake Store

- Azure Virtual Machine

- Azure Batch

Hybrid workflow Pipelines

PROTIP: Although most of Microsoft’s product documents focus on one technology at a time, actual production work enjoyed by real end-users usually involves a pipeline consisting of several services. For example: ingesting (stream processing) a newsfeed:

That and other flows are in the Azure Architecture Center.

-

For more about ALGORITHMS USED, see my explanations at https://wilsonmar.github.io/machine-learning-algorithms, which lists them by alphabetical order and grouped by.

Case studies of how people are already making use of AI/ML to save time and money:

- Calculate a positive/negative sentiment score for each post in your social media account and send you an alert based on a trigger (such as negative sentiment less than 5).

- Use text-to-speech to generate a voice message sent to international phones as voice message (via Twillo)

-

Create a recommendation engine (such as what Netflix) from the Internet Movie Database (imdb.com)

- Predictive Maintenance data science webinar

- modsy.com 3D view

- Customer and Partner Success Stories for “bot”

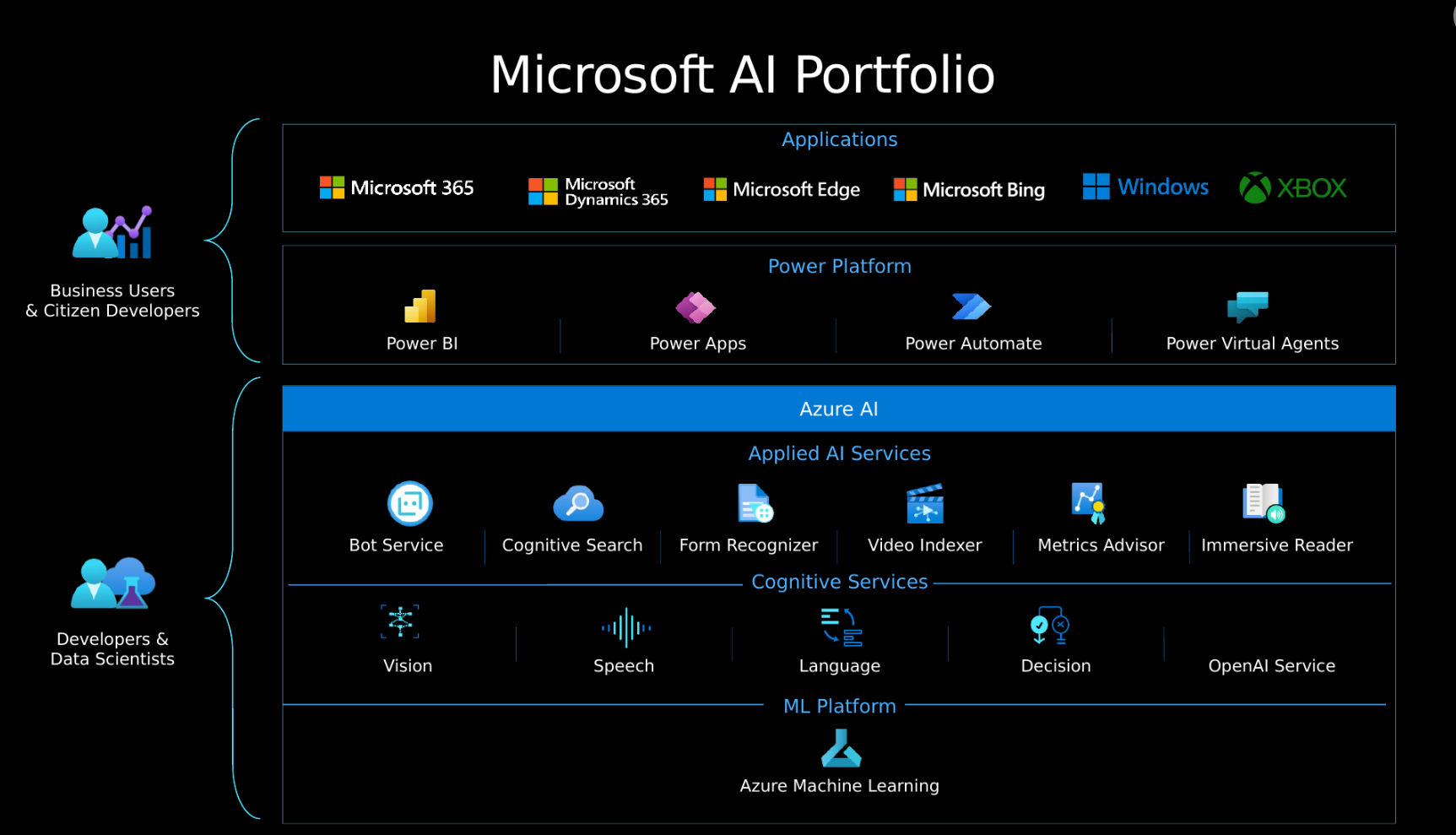

Microsoft’s AI products portfolio

Click picture for full-page view.

In the diagram above left, Microsoft makes a distinction between “Business Users & Citizen Developers” who use their Applications and “Power Platform” and geeky “Developers & Data Scientists” who use “Azure AI” in the Azure cloud.

In the diagram above, Microsoft categorize Azure’s AI services these groups (all of which have GUI, CLI, and API interfaces):

- Applied AI Services are part of automated workflows, but a service can be included among

- Cognitive Services of foundational utilities used to build custom apps

- ML (Machine Learning) Platform, which I cover in a separate article

PROTIP: Several services are NOT shown in the diagram above. The list in a Microsoft LEARN module show a different order:

Cognitive Services List

The method shown in this section gives you a list of all “kinds” of services Azure currently provides for your Azure subscription.

After you’ve met the prerequisite setup to run “az” commands on your Terminal, run this command:

az cognitiveservices account list-kindsResponse:

[ "AIServices", "AnomalyDetector", "CognitiveServices", "ComputerVision", "ContentModerator", "ContentSafety", "ConversationalLanguageUnderstanding", "CustomVision.Prediction", "CustomVision.Training", "Face", "FormRecognizer", "HealthInsights", "ImmersiveReader", "Internal.AllInOne", "LUIS.Authoring", "LanguageAuthoring", "MetricsAdvisor", "Personalizer", "QnAMaker.v2", "SpeechServices", "TextAnalytics", "TextTranslation" ]

PROTIP: “ContentModerator” has been deprecated.

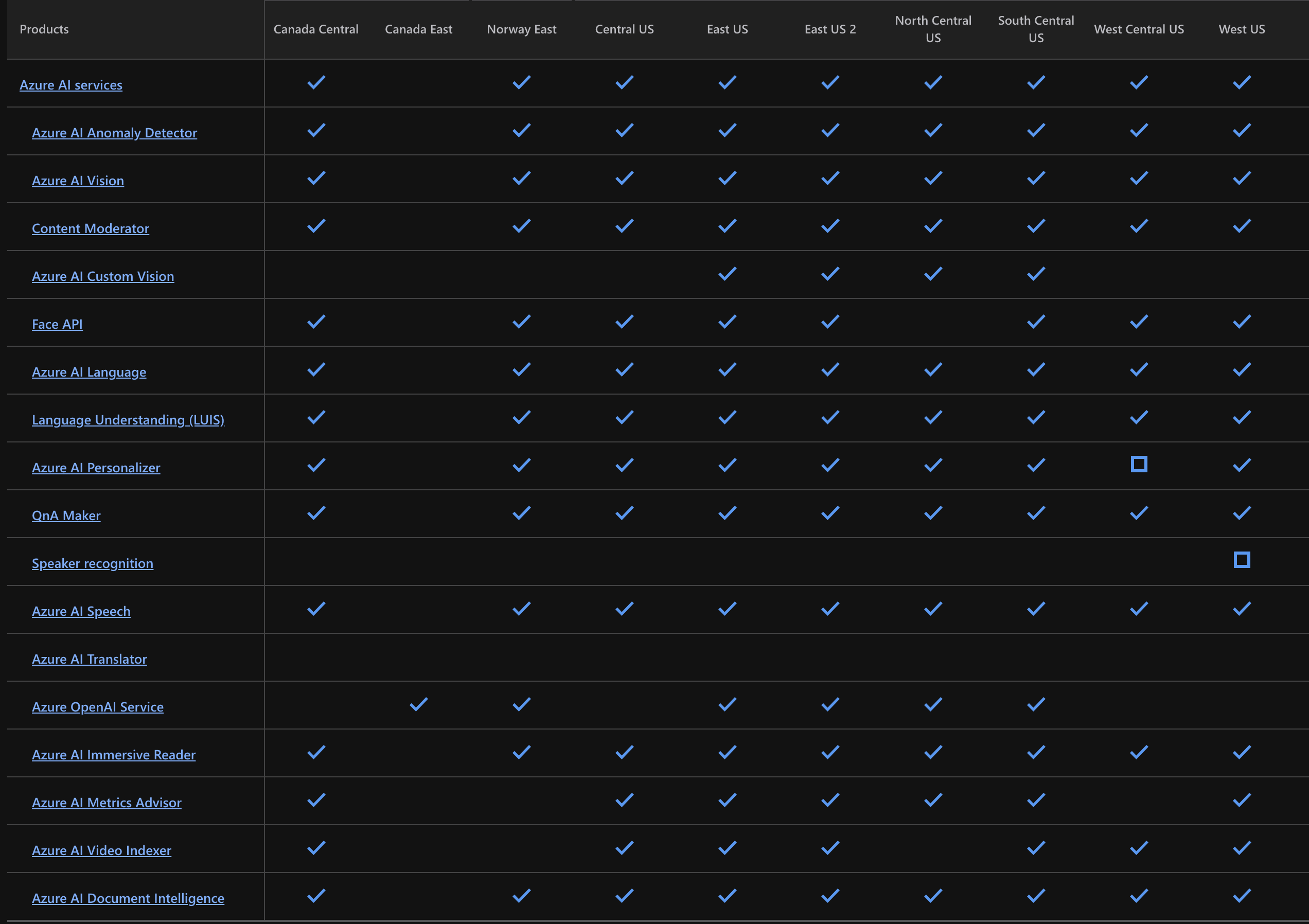

Availability

Even though you have permissions, each specific product may be in preview or not available, depending on region:

Cognitive Services Grouping

PROTIP: The services above are listed in random order. But the table below groups services to help you quickly get to links about features, tutorials, and SDK/API references quickly.

As of this writing, in various marketing and certification training DOCS, Azure Cognitive Services are grouped into these (which is the basis this article is arranged. Click on the underlined and bolded category name to jump to the list of services associated with it, in this order (like on the AI Products Portfolio diagram:

-

AI Vision (Visual Perception) provides the ability to use computer vision capabilities to accept, interpret, and process input from images, video streams, and live cameras. Interpret the world visually through cameras, videos, images

-

AI Speech - Text-to-Speech and Speech-to-Text to interpret written or spoken language, and respond in kind. This provides the ability to recognize speech as input and synthesize spoken output. The combination of speech capabilities together with the ability to apply NLP analysis of text enables a form of human-compute interaction that’s become known as conversational AI, in which users can interact with AI agents (usually referred to as bots) in much the same way they would with another human.

-

AI Language - aka Natural language Processing (NLP) to translate text (Text Analysis), etc.

-

AI Decision (Making) provides the ability to use past experience and learned correlations to assess situations and take appropriate actions. For example, recognizing anomalies in sensor readings and taking automated action to prevent failure or system damage. supervised and unsupervised machine learning

-

Other: OpenAI (to power your apps with large-scale AI models) is a recent add to this confusing category because of so many branding changes (Cortana, Bing, Cognitive, OpenAI, etc.)

Text analysis and conversion provides the ability to use natural language processing (NLP) to not only “read”, but also generate realistic responses and extract semantic meaning from text.

Pricing

PROTIP: The table below groups each kind of cognitive AI service along with how many FREE transactions Microsoft provides for each service on its Cognitive Services pricing page.

IMPORTANT PROTIP: Microsoft allows its free “F0” tier to be applied to only a single Cognitive Service at a time. To remain free, you would need to rebuild a new Cognitive Service with a different “Kind” between steps.

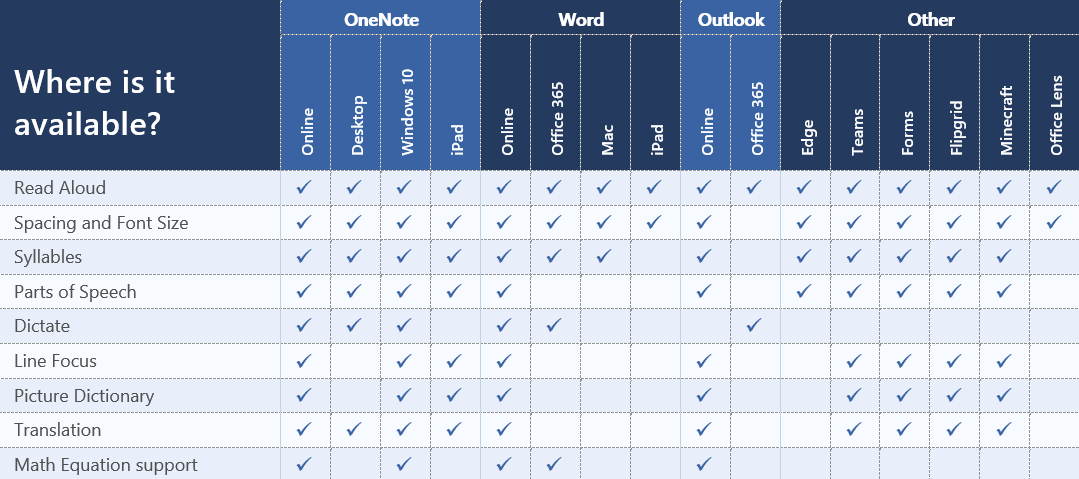

Services Table

Within each grouping, each service is listed in the sequence within that group’s LEARN module.

Links are provided for each service to its Features and API/SDK pages.

MSR CognitiveAllInOne GUI Responsible AI

“MSR” in the table above identifies a Multi-Service Resource accessed using a single key and endpoint to consolidate billing.

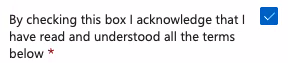

PROTIP: HANDS-ON: Create the CognitiveAllInOne resource using the GUI so that you can acknowledge Microsoft’s terms for Responsible AI use:

PROTIP: Apply of Terraform to create AI service resources will error out unless that is checked.

Using Microsoft’s API algorithms and data (such as celebrity faces, landmarks, etc.) means there has be some vetting by Microsoft’s FATE (Fairness, Accountability, Transparency, and Ethics) research group:

Microsoft’s ethical principles guiding the development and use of artificial intelligence with people:

- Fairness: AI systems should treat all people fairly.

- Reliability & Safety: AI systems should perform reliably and safely.

- Privacy & Security: AI systems should be secure and respect privacy.

- Inclusiveness: AI systems should empower everyone and engage people.

- Transparency: AI systems should be understandable.

- Accountability: AI systems should have algorithmic accountability.

-

DEMO: Hands-on with AI/Guidelines for Human-AI Interaction: Click each card to see examples of each guideline

https://aka.ms/hci-demo which redirects you to

https://aidemos.microsoft.com/guidelines-for-human-ai-interaction/demo- Initially - make clear what the system can do & how well the system can do what it can do.

- During interaction - Time services based on context; show contexually revelvant info; Match revelvant social norms; Migrate social biases.

- When Wrong - support efficient invocation, dismissal, correction; Scope services when in doubt.

- Over Time - remember recent interactions; learn from user behavior; update and adapt cautiously; encourage granular feedback

PROTIP: Some fonts are real small. Zoom in to read it.

Resources:

- https://www.microsoft.com/ai/responsible-ai-resources

- VIDEO: Ethics an Artificial Intelligence at the DotNet Day Zurich May 28th, 2019, by Laurent Bugnion.

Microsoft’s Confusing Branding

Microsoft has invented several names to refer to their AI offerings:

Cortana => Bing => Cognitive Services => OpenAI => Generative AI => AI Language

The 2023 branding for Microsoft’s AI services to mimic human intelligence is “AI Language”, which includes Cognitive Services and Bing.

“Cortana” was the brand-name for Microsoft’s AI. Cortana is the name of the fictional artificially intelligent character in the Halo video game series. Cortana was going to be Microsoft’s answer to Alexa, Siri, Hey Google, and other AI-powered personal assistants which respond to voice commands controlling skills that turn lights on and off, etc. However, since 2019, Cortana is considered a “skill” (app) that Amazon’s Alexa and Google Assistant can call, working across multiple platforms.

For Search, the Bing “Bing” brand, before OpenAI was separated out from “Cognitive Services” to its own at https://docs.microsoft.com/en-us/azure/search, although it’s used in “Conversational AI” using an “agent” (Azure Bot Service) to participate in (natural) conversations. BTW: in 2019 Cortana decoupled from Windows 10 search.

Since October 31st, 2020, Bing Search APIs transitioned from Azure Cognitive Services Platform to Azure Marketplace. The Bing Search v7 API subscription covers several Bing Search services (Bing Image Search, Bing News Search, Bing Video Search, Bing Visual Search, and Bing Web Search),

Azure IoT (Edge) Services are separate.

- https://www.computerworld.com/article/3252218/cortana-explained-why-microsofts-virtual-assistant-is-wired-for-business.html

PROTIP: As of Jan 8, 2024, https://aka.ms/language-studio has “coming soon” for Video and Learn, and “preview” for several services. Essentially Microsoft has two separate offerings by different groups:

- https://learn.microsoft.com/en-us/azure/ai-services/

Microsoft has three service “Providers”:

| Asset type | Resource provider namespace/Entity | Abbre- viation |

|---|---|---|

| Azure Cognitive Services | Microsoft.CognitiveServices/accounts | cog- |

| Azure Machine Learning workspace | Microsoft.MachineLearningServices/workspaces | mlw- |

| Azure Cognitive Search | Microsoft.Search/searchServices | srch- |

Microsoft’s History with AI

In April 2018 Microsoft reorganized into two divisions to offers AI:

-

The research division, headed by Harry Shum, put AI into Bing search, Cortana voice recognition and text-to-speech, ambient computing, and robotics. See Harry’s presentation in 2016.

-

Microsft’s “computing fabric” offerings, led by Scott Guthrie, makes AI services available for those building customizable machine learning with speech, language, vision, and knowledge services. Tools offered include Cognitive Services and Bot Framework, deep-learning tools like Azure Machine Learning, Visual Studio Code Tools for AI, and Cognitive Toolkit.

At Build 2018, Microsoft announced Project Brainwave to run Google’s Tensorflow AI code and Facebook’s Caffe2, plus Microsoft’s own “Cognitive Toolkit” (CNTK).

-

BrainScript uses a dynamically typed C-like syntax to express neural networks in a way that looks like math formulas. Brainscript has a Performance Profiler.

-

Hyper-parameters are a separate module (alongside Network and reader) to perform SGD (stochastic-gradient descent).

Microsoft has advanced hardware:

- [

This pdf white paper says the "high-performance, precision-adaptable FPGA soft processor is at the heart of the system, achieving up to 39.5 TFLOPs of effective performance at Batch 1 on a state-of-the-art Intel Stratix 10 FPGA."

Microsoft's use of field programmable gate arrays (FPGA) calculates AI reportedly "five times faster than Google's TPU hardware".

"Each FPGA operates in-line between the server’s network interface card (NIC) and the top-of-rack (TOR) switch, enabling in-situ processing of network packets and point-to-point connectivity between hundreds of thousands of FPGAs at low latency (two microseconds per switch hop, one-way)."

Microsoft Conversational AI Platform for Developers is a 2021 book published by Apress by Stephan Bisser of Siili Solutions in Finland. The book covers Microsoft’s Bot Framework, LUIS, QnA Maker, and Azure Cognitive Services. https://github.com/orgs/BotBuilderCommunity/dashboard

Microsoft Mechanics YouTube channel is focused on Microsoft’s AI work.

VIDEO: What runs ChatGPT? Inside Microsoft’s AI supercomputer

- Microsoft’s own Megatron-Turing model has 530 billion parameters

- [3:05] Onnx runtime for model portability

- [3:08] Deepspeed to componentize models has become the defacto framework for distributed training

- 285,000 AMD Infiniband CPU Cores & 10,000 NVIDIA V100 Tensor Core GPUs Infiniband connected to Quantum-2 Infiniband via NVLink

- Bisectional bandwidth to 3.6 TBps per server

- [10:45] Low Rank Adaptive (LoRA) Fine Tuning to update only portions of a model

NOTE: Wikipedia has 6,781,394 articles containing 4.5 billion words. English Wiktionary contains 1,439,188 definitions. Webster’s Third New International Dictionary has 470,000 English words.

https://azure.microsoft.com/en-us/services/virtual-machines/data-science-virtual-machines/

Microsoft Reactor ran a “Skills Challenge” to reward a badge for those who complete an AI tutorial.

https://learn.microsoft.com/en-us/training/challenges

- AI Builder

- Machine Learning

- MLOps

- Cognitive

https://www.youtube.com/watch?v=ss-kyogPRNo by Carlotta

Competitive futures

Microsoft competes for talent with Google, Amazon, IBM, China’s Tencent, and many start-ups.

BTW, by contrast, Bernard Marr identified four types of AI evolving:

-

“reactive” machines (such as Spam filters and the Netflix recommendation engine) are not able to learn or conceive of the past or future, so it responds to identical situations in the exact same way every time.

-

“limited memory” AI absorbs learning data and improve over time based on its experience, using historical data to make predictions. It’s similar to the way the human brain’s neurons connect. Deep-learning algorithms used today is the AI that is widely used and being perfected today.

-

“theory of mind” is when AI acquires decision-making capabilities equal to humans, and have the capability to recognize and remember emotions, and adjust behavior based on those emotions.

-

“self-aware”, also called artificial superintelligence (ASI), is “sentient” understanding of of its own needs and desires.

Prerequisites to this document

This document assumes that you have done the following

-

Get onboarded to a Microsoft Azure subscriptions and learn Portal GUI menu keyboard shortcuts.

-

Setup a CLI scripting environment in shell.azure.com. like I describe in my mac-setup page

-

Use CLI to Create a Cognitive Service to get keys to call the first REST API from among sample calls to many REST APIs: the Translator Text API.

-

Setup PowerShell scripts

-

On Windows 11, install Edge according to

https://microsoftlearning.github.io/mslearn-ai-services/Instructions/setup.html

The setup is for this LAB pop-up:

https://learn.microsoft.com/en-us/training/modules/create-manage-ai-services/5a-exercise-ai-services

Learning Sequence

This document covers:

-

Automatically shut down Resource Groups of a Subscription by creating a Logic App.

-

Run an API connecting to an established endpoint (SaaS) you don’t need to setup: Bing Search.

-

Create Functions

-

Create a Workspace resource to run …

- Create a Workspace resource and

Create Compute instance to run

Automated ML of regression of bike-rentals. - Create Compute instance to run a iPython notebook

- Create ML Workspace in Portal, then ml.azure.com

-

Us cognitivevision.com to Create Custom Vision for …

- QnA Maker Conversational AI

- Train a Machine Learning model using iPython notebook

- IoT - “Hey Google, ask Azure to shut down all my compute instances”.

Automation necessary for PaaS

IMPORTANT PROTIP: As of this writing, Microsoft Azure does NOT have a full SaaS offering for every AI/ML service. You are required to create your own computer instances, and thus manage machine sizes (which is a hassle). Resources you create continue to cost money until you shut them down.

So after learning to set up the first compute service, we need to cover automation to shut them all down while you sleep.

So that you’re not tediously recreating everything everyday, this tutorial focuses on automation scripts (CLI Bash and PowerShell scripts) to create compute instances, publish results, then shut itself down. Each report run overwrites files from the previous run so you’re not constantly piling up storage costs.

Another reason for being able to rebuild is that you if you find that the pricing tier chosen is no longer suitable for your solution, you must create a new Azure Cognitive Search resource and recreate all indexes and objects.

When you use my Automation scripts at https://github.com/wilsonmar/azure-quickly/ to create resources the way you like, using “Infrastructure as Code”, so you can throw away any Subscription and begin anew quickly.

My scripts also makes use of a more secure way to store secrets than inserting them in code that can be checked back into GitHub.

Effective deletion hygiene is also good to see how your instances behave when it takes advantage of cheaper spot instances which can disappear at any time. This can also be used for “chaos engineering” efforts.

To verify resource status and to discuss with others, you still need skill at clicking through the Portal.azure.com, ML.azure.com, etc.

References:

-

VIDEO: To release IP address, don’t stop machines, but delete the resource.

-

VIDEO: shut down automatically all your existing VMs (using a PowerShell script called by a scheduled Logic App), by Frank Boucher at github.com/FBoucher

-

Auto-shutdown by Resource Manager on a schedule is only for VMs in DevOps

-

https://www.c-sharpcorner.com/article/deploy-a-google-action-on-azure/

-

start/stop by an Automation Acount Runbook for specific tags attached to different Resource Groups: Assert: “AutoshutdownSchedule: Tuesday” run every hour. https://translate.google.com/translate?sl=auto&tl=en&u=https://github.com/chomado/GoogleHomeHack”>

Azure AI certifications

Among Microsoft’s Azure professional certifications:

-

Previous exam 774 is now been retired. It was based on VIDEO: Azure Machine Learning Studio (classic) web services, which reflected “All Microsoft all the time” using proprietary “pickle” (pkl) model files. Classes referencing it are now obsolete.

-

AI-900 is the entry-level exam ($99). It’s a pre-requisite for:

-

AI-102 ($165 with free re-cert after 1-year) replaced

AI-100(with free re-cert after 2-years) on June 30, 2021. The shift is from infrastructure (KeyVault, AKS, Stream Analytics) to programming C#, Python, and curl commands. AI-102 focuses on the use of pre-packaged services for AI development. -

DP-090 and this LAB goes into implementing a Machine Learning Solution with Databricks.

-

That’s covered by exam DP-100 covers development of custom models using Azure Machine Learning.

-

DP-203 Data Engineering on Microsoft Azure goes into how to use machine learning within Azure Synapse Analytics.

AI-900

PROTIP: Here’s a must-see website: http://aka.ms/AIFunPath which expands to Exam definitions are at Microsoft’s LEARN includes a free text-based tutorial called “Learning Paths” to learn skills:

- Describe AI workloads and considerations (15-20%)

- Describe fundamental principles of machine learning on Azure (30-35%)

- Describe features of computer vision workloads on Azure (15-20%)

- Describe features of Natural Language Processing (NLP) workloads on Azure (15-20%)

- Describe features of conversational AI workloads on Azure (15-20%)

The MS LEARN site refers to files in https://github.com/MicrosoftLearning/mslearn-ai900

- Ravi Kirans’ Study Guide contains links to MS Docs.

Tim Warner has created several video courses on AI-900 and AI-100:

-

CloudSkills.io Microsoft Azure AI Fundamentals course references

https://github.com/timothywarner/ai100cs -

On OReilly.com, his “Crash Course” Excel spreadsheet of exam objectives.

-

OReilly.com references

https://github.com/timothywarner/ai100 -

CloudAcademy’s 4h AI-900 video course includes lab time (1-2 hours at a time).

-

https://www.udemy.com/course/microsoft-ai-900/

-

https://www.itexams.com/info/AI-900

-

Emilio Melo on Linkedin Learning

Practice tests:

- https://www.whizlabs.com/learn/course/microsoft-azure-ai-900/

- https://www.examtopics.com/exams/microsoft/ai-900/

- https://www.whizlabs.com/learn/course/designing-and-implementing-an-azure-ai-solution/

2-hour AGuideToCloud video class by Susanth Sutheesh

AI-102

AI-102 is intended for software developers wanting to build AI-infused applications.

https://learn.microsoft.com/en-us/credentials/certifications/azure-ai-engineer

First setup development environments:

- Visual Studio Code and add-ons for C# and Python,

- NodeJs for Bot Framework Composer and Bot Framework Emulator

AI-102 exam, as defined at Microsoft’s LEARN has free written tutorials on each of the exam’s domains:

- Plan and manage an Azure AI Solution

Cognitive Services(15-20%) - Implement decision support solutions (10–15%)

- Implement computer vision solutions (15–20%)

- Implement natural language processing solutions (30–35%)

- Implement knowledge mining and document intelligence solutions (10–15%)

- Implement generative AI solutions (10–15%)

PROTIP: Unlike the AI-100 (which uses Python Notebooks), hands-on exercises at https://microsoftlearning.github.io/AI-102-AIEngineer/ in Microsoft’s 5-day live course AI-102T00: Designing and Implementing a Microsoft Azure AI Solution (with cloud time) consists of C# and Python programs at https://github.com/MicrosoftLearning/AI-102-AIEngineer (by Graeme Malcolm) was archived on Dec 23, 2023 after its content was distributed among these repos:

- https://microsoftlearning.github.io/mslearn-ai-services/

https://github.com/MicrosoftLearning/mslearn-ai-services

- AI Vision Tutorials

- AI Language Tutorials

- AI Language

- AI Decision Making

- Other: OpenAI Tutorials

- knowledge-mining Tutorials

- https://microsoftlearning.github.io/mslearn-ai-document-intelligence/

- https://github.com/MicrosoftLearning/mslearn-ai-document-intelligence

- Use prebuilt Document Intelligence models

- Extract Data from Forms

- Create a composed Document Intelligence model

DALL-E image generation

https://learn.microsoft.com/en-us/training/courses/ai-102t00 modules:

- Prepare to develop AI solutions on Azure

- Create and consume Azure AI services

- Secure Azure AI services

- Monitor Azure AI services

- Deploy Azure AI services in containers

On Coursera: Coursera video course: Developing AI Applications on Azure by Ronald J. Daskevich at LearnQuest is structured for 5 weeks. Coursera’s videos shows the text at each point of its videos. NOTE: It still sends people to https://notebooks.azure.com and covers Microsoft’s TDSP (Team Data Science Process) VIDEO:

- Business understanding (Charter, Objectives, Data sources, Data dictionaries)

- Data acquisition and understanding (Clean dataset, pipeline)

- Modeling

- Deployment model for use (testing) Flow: entry script to accept requests and score them, Deployment configs

- Customer Acceptance

Resources:

- https://www.whizlabs.com/microsoft-azure-certification-ai-102/ $49.90

- Preview 45 min. Exam: Designing and Implementing an Azure AI Solution (AI-102)

- https://medium.com/version-1/mastering-azure-ai-certifications-passing-the-ai-100-and-ai-102-exams-1st-time-c8a8fe223fcc

AI-100 (RETIRED)

On June 30, 2021 Microsft retired the AI-100 exam in favor of AI-102 exam (avilable in $99 beta since Feb 2021). AI-100 exam, as defined at Microsoft’s LEARN has free written tutorials on each of the exam’s domains:

- Analyze solution requirements (25-30%)

- Design AI solutions (40-45%)

- Implement and monitor AI solutions (25-30%)

https://github.com/MicrosoftLearning/AI-100-Design-Implement-Azure-AISol

https://github.com/MicrosoftLearning/Principles-of-Machine-Learning-Python

- Plan and manage an Azure Cognitive Services solution (15-20%)

- Implement Computer Vision solutions (20-25%)

- Implement natural language processing solutions (20-25%)

- Implement knowledge mining solutions (15-20%)

- Implement conversational AI solutions (15-20%) - chatbots

Raza Salehi (@zaalion) created on OReilly.com an AI-100 exam prep “crash course” which references his <a target=”_blank” href=”https://github.com/zaalion/oreilly-ai-100 and https://github.com/zaalion/uy-cognitve-services-crash-course

Guy Hummel’s CloudAcademy.com 7hr AI-100 video course.

Raza Salehi created on Pluralsight.com a series for Microsoft Azure AI Engineer (AI-100)

Practice tests:

- https://www.whizlabs.com/learn/course/microsoft-azure-ai-100/

- Ravi’s links still refer to AI-100

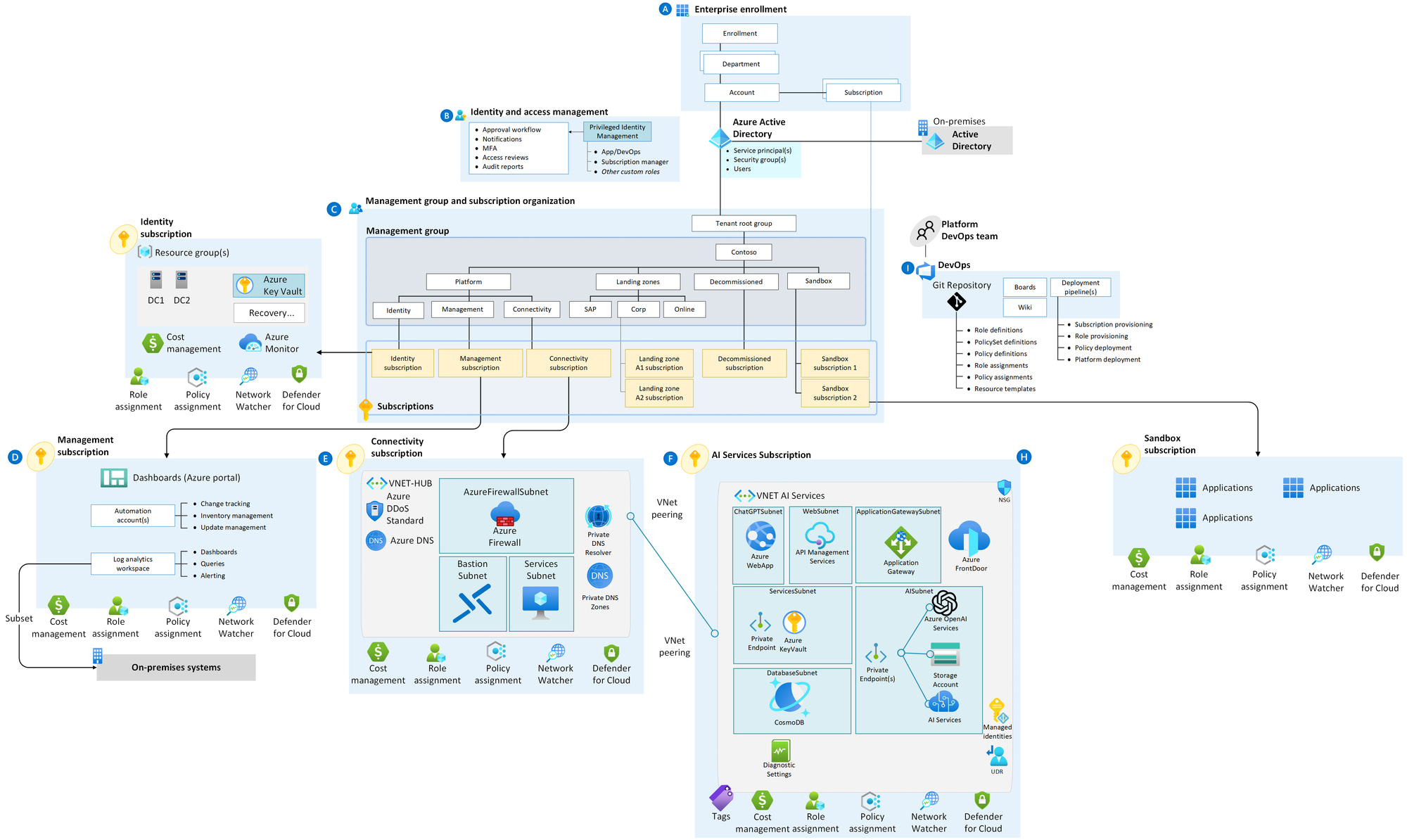

AI Landing Zones (ALZ)

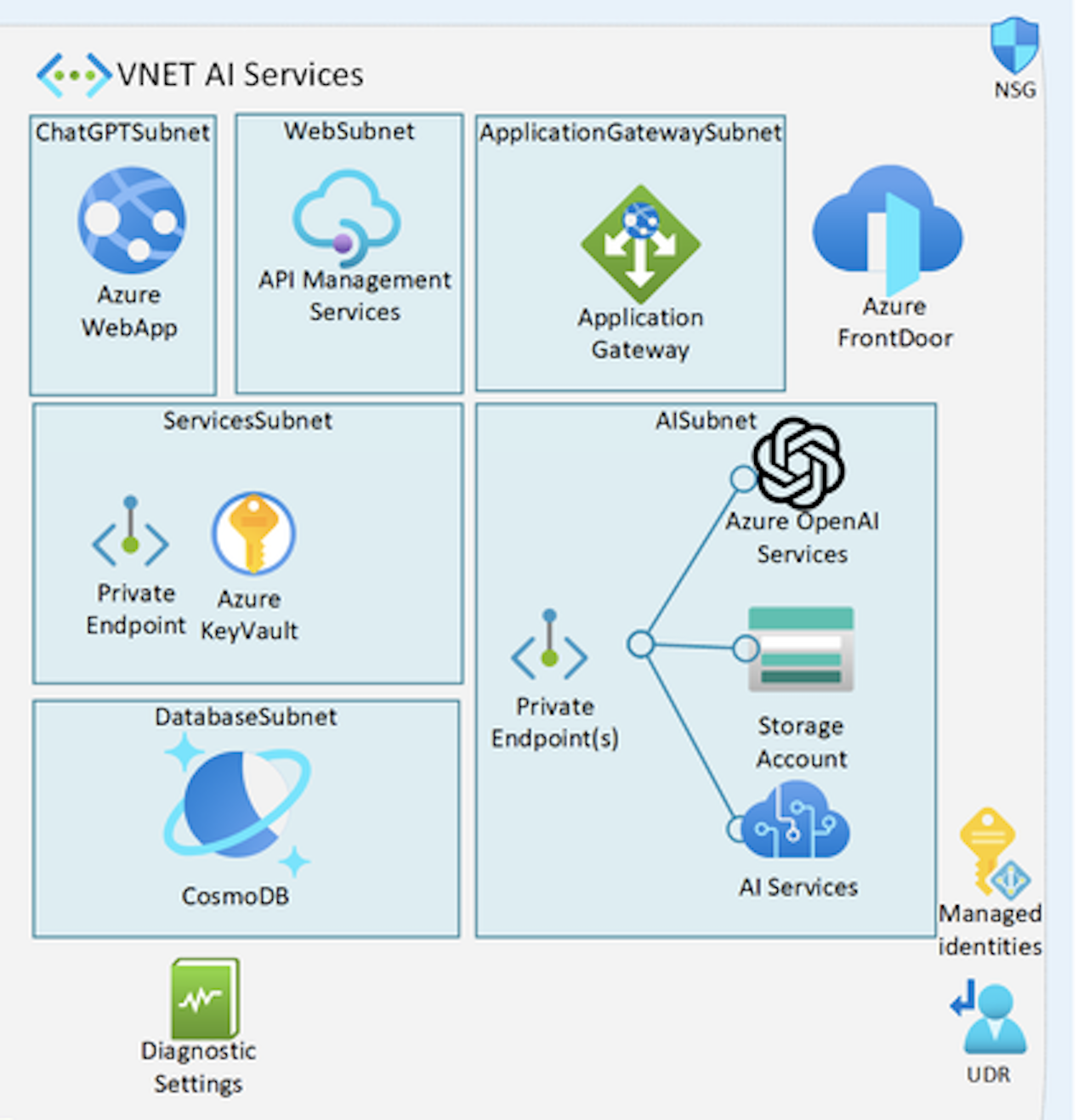

The above is the Azure Landing Zones OpenAI Reference Architecture defining how to envelope OpenAI with utilities to ensure a defensive security posture. It maps how resources are integrated in a structured, consistent manner, plus ensuring governance, compliance, and security.

The diagram above is an adaptation of Microsoft’s enterprise-scale Azure Landing Zone, a part of Microsoft’s Cloud Adoption Framework (CAF).

Each Design Area has:

A. Enterprise enrollment [TF]

B. Identity and accessment [TF]

C. Management group and subscription organization

D. Management subscription

E. Connectivity subscription

F. AI Services (Landing Zone) subscription

G. Monitoring?

H. Sandbox subscription

I. Platform DevOps Team [TF]</br />

Design Areas F (the Landing Zone for AI):

- Managed Identities

- UDR (User-Defined Routes) for each type of outbound connect from the VNet, defined by Azure service tags or IP addresses.

- Azure WebApp provides a GUI front-end

- DatabaseSubnet houses a CosmosDB to persist data to the WebApp

- API Management Services tracks usage by the API key assigned each user

- Application Gateway

- The Azure FrontDoor for DDOS attacks

- ServicesSubnet houses an Azure KeyVault on a Private Endpoint

- AISubnet houses Private Endpoints for:

- Storage Account

- Azure OpenAI Services

- Azure AI Services

To create the resources in the diagram:

If you prefer using Bicep:

- https://github.com/Azure/ALZ-Bicep/wiki/DeploymentFlow

- https://github.com/Azure/ALZ-Bicep/wiki/ConsumerGuide

- https://learn.microsoft.com/en-us/azure/architecture/landing-zones/bicep/landing-zone-bicep

- https://github.com/Azure/ALZ-Bicep/wiki/Accelerator

If you prefer using Terraform:

- https://registry.terraform.io/modules/Azure/caf-enterprise-scale/azurerm/latest

- https://github.com/Azure/terraform-azurerm-caf-enterprise-scale/wiki/Examples

- https://github.com/Azure/terraform-azurerm-caf-enterprise-scale?tab=readme-ov-file#readme

Included are Private Endpoints, Network Security Groups and Web Application Firewalls.

Sample ML Code

PROTIP: AI-102 is heavy on questions about coding.

Samples (unlike examples) are a more complete, best-practices solution for each of the snippets. They’re better for integrating into production code.

github.com/Azure-Samples from Microsoft offers samples code to use Cognitive Services REST API by each language:

https://docs.microsoft.com/en-us/samples/azure-samples/azure-sdk-for-go-samples/azure-sdk-for-go-samples/

A complete sample app is Microsoft’ Northwinds Traders consumer ecommerce store. install

IMPORTANT: Cognitive Services SDK Samples for:

Tim Warner’s https://github.com/timothywarner/ai100 includes Powershell scripts:

- keyvault-soft-delete-purge.ps1

- keyvault-storage-account.ps1

- python-keyvault.py

- ssh-to-aks.md - SSH into AKS cluster nodes

- xiot-edge-windows.ps1

- autoprice.py

Among Azure Machine Learning examples is a CLI at https://github.com/Azure/azureml-examples/tree/main/cli

PROTIP: CAUTION: Each service has a different maturity level in its documentation at azure.microsoft.com/en-us/downloads, such as SDK for Python open-sourced at github.com/azure/azure-sdk-for-python, described at docs.microsoft.com/en-us/azure/developer/python.

AI Services

The hands-on steps below enables you to operate offline on a macOS laptop to run the Azure AI SDK in a Docker container using VS Code Dev Containers.

https://learn.microsoft.com/en-us/azure/ai-services/

runs from VSCode to run Python 3.10 with Docker containers within Azure AI Studio at https://ai.azure.com

Following How to start:

Work with Azure AI projects in VS Code

-

Install Docker Desktop, https://code.visualstudio.com/docs/devcontainers/tutorial

- Install Visual Studio Code (VSCode). Within VSCode, install Dev Containers extension to use VS Code Dev Container

-

run the command

Dev Containers: Try a Dev Container Sample…

-

Install Git and configure it.

-

Create a folder “ai_env” from GitHub for the “Sample quickstart repo for getting started building an enterprise chat copilot in Azure AI Studio”:

FOLDER="ai_env" git clone https://github.com/azure/aistudio-copilot-sample "${FOLDER}" --depth 1 cd "${FOLDER}"Response:

Cloning into 'ai_env'... remote: Enumerating objects: 122, done. remote: Counting objects: 100% (122/122), done. remote: Compressing objects: 100% (107/107), done. remote: Total 122 (delta 11), reused 88 (delta 8), pack-reused 0 Receiving objects: 100% (122/122), 227.58 KiB | 951.00 KiB/s, done. Resolving deltas: 100% (11/11), done.

-

Define provenance:

git remote add upstream https://github.com/azure/aistudio-copilot-sample git remote -v

-

Open with VSCode to an error:

code ai_env

-

Select the “Reopen in Dev Containers” button. If it doesn’t appear, open the command palette (Ctrl+Shift+P on Windows and Linux, Cmd+Shift+P on Mac) and run the Dev Containers: Reopen in Container command.

- Install Homebrew

-

Install the Azure CLI using Homebrew (requires python@3.11?):

brew install azure-cli

-

Install the .NET (dotnet) CLI commands:

DOTNET_ROOT="/opt/homebrew/Cellar/dotnet/7.0.100/libexec" -

Add $HOME/.dotnet/tools folder to .zshrc file run at bootup.

- Intall miniconda3.

-

From any folder, create a Conda environment containing the Azure AI SDK:

conda create --name ai_env python=3.10 pip yes | conda activate ai_env

Sample response:

Collecting package metadata (current_repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 23.5.0 latest version: 24.1.1 Please update conda by running $ conda update -n base -c conda-forge conda Or to minimize the number of packages updated during conda update use conda install conda=24.1.1 ## Package Plan ## environment location: /Users/wilsonmar/miniconda3/envs/ai_env added / updated specs: - python=3.10 The following packages will be downloaded: package | build ---------------------------|----------------- python-3.10.13 |h00d2728_1_cpython 12.4 MB conda-forge setuptools-69.1.0 | pyhd8ed1ab_0 460 KB conda-forge ------------------------------------------------------------ Total: 12.9 MB The following NEW packages will be INSTALLED: bzip2 conda-forge/osx-64::bzip2-1.0.8-h10d778d_5 ca-certificates conda-forge/osx-64::ca-certificates-2024.2.2-h8857fd0_0 libffi conda-forge/osx-64::libffi-3.4.2-h0d85af4_5 libsqlite conda-forge/osx-64::libsqlite-3.45.1-h92b6c6a_0 libzlib conda-forge/osx-64::libzlib-1.2.13-h8a1eda9_5 ncurses conda-forge/osx-64::ncurses-6.4-h93d8f39_2 openssl conda-forge/osx-64::openssl-3.2.1-hd75f5a5_0 pip conda-forge/noarch::pip-24.0-pyhd8ed1ab_0 python conda-forge/osx-64::python-3.10.13-h00d2728_1_cpython readline conda-forge/osx-64::readline-8.2-h9e318b2_1 setuptools conda-forge/noarch::setuptools-69.1.0-pyhd8ed1ab_0 tk conda-forge/osx-64::tk-8.6.13-h1abcd95_1 tzdata conda-forge/noarch::tzdata-2024a-h0c530f3_0 wheel conda-forge/noarch::wheel-0.42.0-pyhd8ed1ab_0 xz conda-forge/osx-64::xz-5.2.6-h775f41a_0 Proceed ([y]/n)? _ -

Install the Azure AI CLI using the .NET (dotnet) CLI command:

dotnet tool install --global Azure.AI.CLI --prerelease

Response:

You can invoke the tool using the following command: ai Tool 'azure.ai.cli' (version '1.0.0-preview-20240216.1') was successfully installed.

-

Obtain Python dependency libraries defined in requirements.txt:

conda install pip pip install -r requirements.txt

Alternately, if there were instead an environment.yml.

-

Get the ai cli:

ai

Results with the prerelease version:

AI - Azure AI CLI, Version 1.0.0-preview-20240216.1 Copyright (c) 2024 Microsoft Corporation. All Rights Reserved. This PUBLIC PREVIEW version may change at any time. See: https://aka.ms/azure-ai-cli-public-preview ___ ____ ___ _____ / _ /_ / / _ |/_ _/ / __ |/ /_/ __ |_/ /_ /_/ |_/___/_/ |_/____/ USAGE: ai

[...] HELP ai help ai help init COMMANDS ai init [...] (see: ai help init) ai config [...] (see: ai help config) ai dev [...] (see: ai help dev) ai chat [...] (see: ai help chat) ai flow [...] (see: ai help flow) ai search [...] (see: ai help search) ai speech [...] (see: ai help speech) ai service [...] (see: ai help service) EXAMPLES ai init ai chat --interactive --system @prompt.txt ai search index update --name MyIndex --files *.md ai chat --interactive --system @prompt.txt --index-name MyIndex SEE ALSO ai help examples ai help find "prompt" ai help find "prompt" --expand ai help find topics "examples" ai help list topics ai help documentation -

Navigate to the folder

-

Initialize the ai project:

ai init

- Select “Initialize: Existing AI project”.

- Select “LAUNCH:

ai login(interactive device code)”

You have signed in to the Microsoft Azure Cross-platform Command Line Interface application on your device. You may now close this window.

- Highlight and copy the code.

-

Open an internet browser (Safari) to the page

- Click and paste the code from your laptop Clipboard (to authenticate).

- Select your user email.

- Click Continue to “Are you trying to sign in to Microsoft Azure CLI? Only continue if you downloaded the app from a store or website that you trust.”

-

Close the browser tab when you see

You have signed in to the Microsoft Azure Cross-platform Command Line Interface application on your device. You may now close this window.

- Confirm that the subscription shown is the one you want to use.

-

cd to the folder

Notice that file src/copilot_aisdk/requirements.txt contains:

openai azure-identity azure-search-documents==11.4.0b6 jinja2

- Select “(Create w/ standalone Open AI resource)” rather than integrated

- Select RESOURCE GROUP, CREATE RESOURCE GROUP, CREATE AZURE OPENAI RESOURCE

- Select a name for “** CREATING **”

-

For “AZURE OPENAI DEPLOYMENT (CHAT)” select “(Create new)”. FIXME:

CREATE DEPLOYMENT (CHAT) Model: *** No deployable models found *** CANCELED: No deployment selected

- Select one or skip:

- ada-embedding-002

- gpt-35-turbo-16k for chat,

- gpt-35-turbo-16k

- gpt4-32k evaluation

-

Azure AI search resource

This generates a config.json file in the root of the repo for the SDK to use when authenticating to Azure AI services.

Alternating:

https://github.com/azure-samples/azureai-samples

-

Select the Node sample from the list.

File devcontainer.json is a config file that determines how your dev container gets built and started.

Vision services

Vision Tututoral

https://microsoftlearning.github.io/mslearn-ai-vision/

- Analyze Images with Azure AI Vision

- Classify images with an Azure AI Vision custom model

- Detect Objects in Images with Custom Vision

- Detect and Analyze Faces

- Read Text in Images

- Analyze Video with Video Analyzer

- Classify Images with Azure AI Custom Vision

Use GUI

References:

- aka.ms/cognitivevision

-

In a web browser, navigate to Vision Studio:

- Sign in.

-

Select a Subscription.

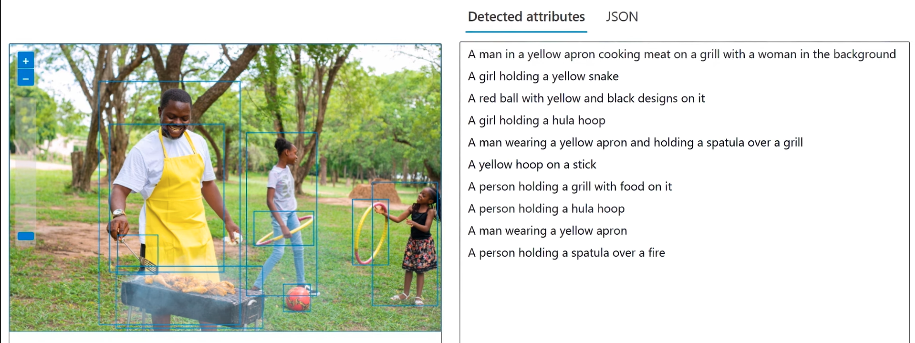

VIDEO: The menu of AI Vision service categories uses Microsoft’s “Florence foundational model” (new in 2023) models trained with an “open world” of billions of images combined with a large language model. That enables the identification of objects and their location in a frame such as this:

-

Click each, then “Try it out”:

Optical character recognition (OCR):

- Extract text from images - Extract printed and handwritten style text from images and documents for supported languages.

Spatial analysis - Video Retrieval and Summary: - Video Retrieval and Summary - Generate a brief summary of the main points shown in video. Locate specific keywords and jump to the relevant section.

- Count people in an area - Analyze real-time or recorded video to count the number of people in a designated zone in a camera’s field of view.

- Detect when people cross a line - Analyze real-time streaming video to detect when a person crosses a line in the camera’s field of view.

- Detect when people enter/exit a zone - Analyze real-time streaming video to detect when a person enters or exits a zone in the camera’s field of view.

- Monitor social distancing - Analyze real-time streaming video that tracks when people violate a distance rule in the camera’s field of view.

- Face - Detect faces in an image

Image analysis - click “Try it out” on each of these: - Recognize products on shelves - Identify products on shelves, gaps in product availability, and compliance for planograms.

- Customize models with images - Create custom image classification and object detection models with images using Vision Studio and Azure ML.

- Search photos with image retrieval - Retrieve specific moments within your photo album. For example, you can search for: a wedding you attended last summer, your pet, or your favorite city.

- Add dense captions to images - Generate human-readable captions for all important objects detected in your image.

- Remove backgrounds from images - Easily remove the background and preserve foreground elements in your image.

- Add captions to images - Generate a human-readable sentence that describes the content of an image.

- Detect common objects in images - Recognize the location of objects of interest in an image and assign them a label.

- Extract common tags from images - Use an AI model to automatically assign one or more labels to an image.

- Detect sensitive content in images - Detect sensitive content in images so you can moderate their usage in your applications.

- Create smart-cropped images - Create cropped image thumbnails based on the key areas of a larger image.

- Extract text from images - Extract printed and handwritten style text from images and documents for supported languages.

- Click “I acknowledge that this service will incur usage to my Azure account.”

-

Select a Region (East US, West Europe, West US, or West US 2)

The “cog-ms-learn-vision” resource is created for you automatically.

- Click “View all resources”

- Check “cog-ms-learn-vision” created for you automatically.

- Click “Select as default resource”.

- Close.

Use REST API

https://github.com/Azure-Samples/cognitive-service-vision-model-customization-python-samples

https://microsoftlearning.github.io/mslearn-ai-fundamentals/Instructions/Labs/05-ocr.html

-

On the Getting started with Vision landing page, select Optical character recognition, and then the Extract text from images tile.

-

Under the Try It Out subheading, acknowledge the resource usage policy by reading and checking the box.

-

download ocr-images.zip by selecting

https://aka.ms/mslearn-ocr-images

-

Open the folder.

-

On the portal, select Browse for a file and navigate to the folder on your computer where you downloaded ocr-images.zip. Select advert.jpg and select Open.

-

review what is returned:

In Detected attributes, any text found in the image is organized into a hierarchical structure of regions, lines, and words.

On the image, the location of text is indicated by a bounding box, as shown here:

App for the blind: VIDEO: INTRO: SeeingAI.com. Permissions for the “See It All” app are for its internal name “Mt Studio Web Prod”.

https://docs.microsoft.com/en-us/learn/paths/explore-computer-vision-microsoft-azure

HISTORY: In 2014, Microsoft showed off its facial recognition capabilities with a website (how-old.net which now is owned by others) to guess how old someone is. At conferences they built a booth that takes a picture.

LEARN: https://docs.microsoft.com/en-us/learn/modules/read-text-computer-vision/

DEMO: Seeing AI app talking camera narrates the world around blind people.

- Semantic segmentation is the ML technique which individual pixels in the image are classified according to the object to which they belong.

-

Image analysis

- Face detection, analysis, and recognition

- Optical character recognition (OCR) for small amounts of text

- The Read API works asynchronously on images with a lot of text, to parse pages, lines, and words.

- Video Indexer service analyzes the visual and audio channels of a video, and indexes its content.

Computer Vision

Computer Vision” analyzes images and video to extract descriptions, tags, objects, and text. API Reference, DOCS, INTRO:

- Read the text in the image

- Detects Objects

- Identifies Landmarks

- Categorize image

https://docs.microsoft.com/en-us/azure/cognitive-services/computer-vision/quickstarts-sdk/client-library?tabs=visual-studio&pivots=programming-language-csharp READ

Computer Vision demo

-

Select images and review the information returned by the Azure Computer Vision web service:

DEMO: https://aidemos.microsoft.com/computer-vision

-

Click an image to see results of “Analyze and describe images”. Objects are returned with a bounding box to indicate their location within the image.

- Click “Try another image” for another selection.

- Click “Next step”.

- Read text in imagery.

- Read handwriting

- Recognize celebrities & landmarks - the service has a specialized domain model trained to identify thousands of well-known celebrities from the worlds of sports, entertainment, and business. The “Landmarks” model can identify famous landmarks, such as the Taj Mahal and the Statue of Liberty.

Additionally, the Computer Vision service can:

- Detect image types - for example, identifying clip art images or line drawings.

- Detect image color schemes - specifically, identifying the dominant foreground, background, and overall colors in an image.

- Generate thumbnails - creating small versions of images.

- Moderate content - detecting images that contain adult content or depict violent, gory scenes.

Computer Vision API shows all the features.

-

- Right-click “Launch VM mode” for the “AI-900” lab on a Window VM.

- X: Click the Edge browser icon.

- X: Click to remove pop-ups.

- Go to portal.azure.com

- Sign in using an email which you have an Azure subscription.

- Type the password. You can’t copy outside the VM and paste into it.

- X: Do not save your password.

- Open Visual Studio to see

-

X: On another browser tab, view the repo (faster):

-

Follow the instructions in the notebook to create a resource, etc.

TODO: Incorporate the code and put it in a pipeline that minimizes manual actions.

- To take a quiz and get credit, click in the VM “here to complete the Learn module with a Knowledge Check.

Custom Vision

Azure Custom Vision trains custom models referencing custom (your own) images. Custom vision has two project types:

-

Image classification is a machine-learning based form of computer vision in which a model is trained to categorize images based on their (class or) primary subject matter they contain.

-

Object detection goes further than classification to identify the “class” of individual objects within the image, and to return the coordinates of a bounding box that indicates the object’s location.

HANDS-ON: LEARN hands-on lab

- Labfiles for C-Sharp and Python.

LAB: Steps:

- Create state-of-the-art computer vision models.

- Upload and tag training images.

- Train classifiers for active learning.

- Perform image prediction to identify probable matches to a trained model.

-

Perform object detection to locate elements within an image and return a bounding box.

-

Open

DOCS:

-

MS LEARN HANDS-ON LAB:

aka.ms/learn-image-classification which redirects to

docs.microsoft.com/en-us/learn/modules/classify-images-custom-vision -

Load the code from:

https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/03%20-%20Object%20Detection.ipynb

https://docs.microsoft.com/en-us/learn/modules/evaluate-requirements-for-custom-computer-vision-api/3-investigate-service-authorization Custom Vision APIs use two subscription keys, each control access to an API:

- A training key to access API members which train the model.

- A prediction key to access API members which classify images against a trained model.

https://docs.microsoft.com/en-us/learn/modules/evaluate-requirements-for-custom-computer-vision-api/4-examine-the-custom-vision-prediction-api

References: CV API

Face => AI

Azure “Face” is used to build face detection and facial recognition solutions in five categories:

- Face Verification: Check the likelihood that two faces belong to the same person.

- Face Detection: Detect human faces in an image.

- Face Identification: Search and identify faces.

- Face Similarity: Find similar faces.

- Face Grouping: Organize unidentified faces into (face list) groups, based on their visual similarity.

NOTE: On June 11, 2020, Microsoft announced that it will not sell facial recognition technology to police departments in the United States until strong regulation, grounded in human rights, has been enacted. As such, customers may not use facial recognition features or functionality included in Azure Services, such as Face or Video Indexer, if a customer is, or is allowing use of such services by or for, a police department in the United States.

FaceTutorials

HANDS-ON: LEARN tutorial using these Lab files.

A face location is face coordinates – a rectangular pixel area in the image where a face has been identified.

The Face API can return up to 27 landmarks for each identified face that you can use for analysis. Azure allows a person to can have up to 248 faces. There is a 6 MB limit on the size of each file (jpeg, png, gif, bmp).

Face attributes are predefined properties of a face or a person represented by a face. The Face API can optionally identify and return the following types of attributes for a detected face:

- Age

- Gender

- Smile intensity

- Facial hair

- Head pose (3D)

- Emotion

Emotions detected in JSON response is a floating point number:

- Neutral

- Anger

- Contempt

- Disgust

- Fear

- Hppiness

- Sadness

- Surprise

PROTIP: “Happiness: 9.99983543,” is near certainty at 1.0. 2.80234E-08” indicates 8

https://github.com/Azure-Samples/cognitive-services-FaceAPIEnrollmentSample

DEMO: LAB: https://github.com/microsoft/hackwithazure/tree/master/workshops/web-ai-happy-sad-angry

https://learn.microsoft.com/en-us/azure/ai-services/computer-vision/quickstarts-sdk/identity-client-library?tabs=windows%2Cvisual-studio&pivots=programming-language-csharp

Create a Face API subscription

Subscribe to the Face API:

- Sign in to the Azure portal.

- Go to

- Create a resource > AI + Machine Learning > Face</a>

-

Enter a unique name for your Face API subscription name in variable MY_FACE_ACCT

Paste in setme.sh

export MY_FACE_ACCT=faceme

- Choose “westus”, the Location nearest to you.

- Select F0 the free or lowest-cost Pricing tier.

- Check “By checking this box, I certify that use of this service or any service that is being created by this Subscription Id, is not by or for a police department in the United States.”

-

Click “Create” to subscribe to the Face API.

- When provisioned, “Go to resource”.

-

In “Keys and Endpoint”, copy Key1 and paste in setme.sh

export MY_FACE_KEY1=subscription_key

The endpoint used to make REST calls is “$MY_FACE_ACCT.cognitiveservices.azure.com/”

https://github.com/wilsonmar/azure-quickly/blob/main/az-face-init.sh

https://docs.microsoft.com/en-us/learn/modules/identify-faces-with-computer-vision/8-test-face-detection?pivots=csharp

References:

https://docs.microsoft.com/en-us/azure/cognitive-services/Face/Overview What is the Azure Face service?

https://docs.microsoft.com/en-us/azure/cognitive-services/Face/

https://docs.microsoft.com/en-us/azure/cognitive-services/Face/quickstarts/client-libraries?tabs=visual-studio&pivots=programming-language-csharp

https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/04%20-%20Face%20Analysis.ipynb

CustomVision

Form Recognizer = Document Intelligence

Video Indexer

Media Services & Storage Account:

-

In Portal, Media Services blade.

-

Specify Resource Group, account name, storage account, System-managed identity.

-

In a browser, go to the Video Indexer Portal URL:

NOTE: Video Indexer is under Media Services rather than Cognitive Services.

-

Click the provider to login: AAD (Entra) account, Personal Microsoft account, Google.

PROTIP: Avoid using Google due to the permissions you’re asked to give:

-

Say Yes to Video Indexer permission to: Access your email addresses & View your profile info and contact list, including your name, gender, display picture, contacts, and friends.

NOTE: You’ll get an email with subject “Your subscription to the Video Indexer API”.

On your mobile phone you’ll get a “Connected to new app” notice for Microsoft Authenticator.

-

Click “Account settings”. PRICING: up to 10 hours (600 minutes) of free indexing to website users and up to 40 hours (2,400 minutes) of free indexing to API users. Media reserved units are pre-paid. See FAQ

-

Switch to the file which defines Azure environment variable VIDEO_INDEXER_ACCOUNT (in setmem.sh) as described in

- Switch back.

- In Account settings, click “Copy” to get the Account ID GUID in your Clipboard.

-

Switch to the file which defines Azure environment variable VIDEO_INDEXER_ACCOUNT (in setmem.sh) as described in

- Highlight the sample value and paste (Command+V).

-

Switch back.

-

Go to the “Azure Video Analyzer for Media Developer Portal”:DOCS

-

Click “Sign In”. Click “Profile”

NOTE: The UI has changed since publication of Microsoft’s tutorial, which says “Go to the Products tab, then select Authorization.”

- Click “Show” on the “Primary key” line. Double-click on the subscription key to copy to Clipboard (Command+C).

-

Switch to the file which defines Azure environment variable VIDEO_INDEXER_API_KEY (in setmem.sh) as described in

https://wilsonmar.github.io/azure-quickly

- Highlight the sample value and paste (Command+V).

-

Switch back.

Upload video using Portal GUI

Upload video using program

https://api-portal.videoindexer.ai/api-details#api=Operations&operation=Get-Account-Access-Token

-

DOCS: Select the Azure Video Indexer option for uploading videos: upload from URL (there is also send file as byte array by an API call, which has limits of 2 GB in size and a 30-minute timeout.

az-video-upload.py in https://wilsonmar.github.io/azure-quickly

-

Make an additional call to retrieve insights.

-

Reference existing asset ID

Search Media files

-

In “Media files” at https://www.videoindexer.ai/media/library

-

Click “Samples”, and click on a video file to Play to see the media’s people, topics (keywords).

NOTE: Search results include exact start times where an insight exists, possibly multiple matches for the same video if multiple segments are matched.

-

Click a tag to see where it was mentioned in the timeline.

Alternately, use the API to search: ???

Model customizations

Each video consists of scenes grouping shots, which each contain keyframes.

-

A scene represents a single event within the video. It groups consecutive shots that are related. It will have a start time, end time, and thumbnail (first keyframe in the scene).

-

A shot represents a continuous segment of the video. Transitions within the video are detected which determine how it is split into shots. Shots have a start time, end time, and list of keyframes.

-

Keyframes are frames that represent the shot. Each one is for a specific point in time. There can be gaps in time between keyframes but together they are representative of the shot. Each keyframe can be downloaded as a high-resolution image.

-

-

Click “Model customizations”

- Set as thumbnail another keyframe.

https://github.com/Azure-Samples/media-services-video-indexer

https://dev.to/adbertram/getting-started-with-azure-video-indexer-and-powershell-3i32

Azure Form Recognizer

“Form Recognizer” extracts information from images obtained from scanned forms and invoices.

- Raza Salehi’s Recognizer

https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/06%20-%20Receipts%20with%20Form%20Recognizer.ipynb

https://docs.microsoft.com/en-us/samples/azure/azure-sdk-for-python/tables-samples/

https://docs.microsoft.com/en-us/samples/azure/azure-sdk-for-java/formrecognizer-java-samples/

https://docs.microsoft.com/en-us/samples/azure/azure-sdk-for-net/azure-form-recognizer-client-sdk-samples/

https://docs.microsoft.com/en-us/samples/azure/azure-sdk-for-python/formrecognizer-samples/

OCR

https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/05%20-%20Optical%20Character%20Recognition.ipynb

-

Image classification - https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/01%20-%20Image%20Analysis%20with%20Computer%20Vision.ipynb

-

Object detection - https://github.com/MicrosoftLearning/mslearn-ai900/blob/main/02%20-%20Image%20Classification.ipynb

Ink Recognizer

Ink converts handwriting to plain text, in 63+ core languages.

It was deprecated on 31 January 2021.

https://docs.microsoft.com/en-us/azure/cognitive-services/ink-recognizer/quickstarts/csharp Quickstart: Recognize digital ink with the Ink Recognizer REST API and C#

QUESTION: Does it integrate with a tablet?

Create Cognitive Services

Azure Machine Learning 2.0 CLI (preview) examples

https://github.com/Azure-Samples/Cognitive-Services-Vision-Solution-Templates

BTW https://docs.microsoft.com/en-us/samples/azure-samples/cognitive-services-quickstart-code/cognitive-services-quickstart-code/ https://github.com/Azure-Samples/cognitive-services-sample-data-files

My script does the same as these manual steps:

- In Portal.azure.com

- G+\ Cognitive Services

- Click the Name you created.

- Click “Keys and Endpoint” in the left menu.

-

Click the blue icon to the right of KEY 1 heading to copy it to your invisible Clipboard.

-

Endpoint: https://tot.cognitiveservices.azure.com/

TODO: DOCS: Automate above steps to create compute and server startpup script.

PROTIP: These instructions are not in Microsoft LEARN’s tutorial.

“Your document is currently not connected to a compute. Switch to a running compute or create a new compute to run a cell.”

- Click the Run triangle for “Your document is currently not connected to a compute.”

- “Create compute”

- Virtual machine type: CPU or GPU

- Virtual machine size: Select from all options (64 of them) QUESTION: What is the basis for “recommended”?

- The cheapest is “Standard_F2s_v2” with “2 cores, 4GB RAM, 16GB storage” for Compute optimized at “$0.11/hr”. See Microsoft’s description of virtual machine types here.

- Next

- Compute name: PROTIP: Use 3-characters only, such as “wow” or “eat”.

- Enable SSH access: leave unchecked

- Create

- Wait (5 minutes) for box to go from “Creating” to “Running”.

Kinds of Cognitive Services CLI

You would save money if you don’t leave servers running, racking up charges.

You can confidently delete Resource Groups and all resources attached if you have automation in CLI scripts that enable you to easily create them later.

Instead of the manual steps defined in this LAB, run my Bash script in CLI, as defined by this DOC:

-

G+\ Cognitive Services.

- Click the +Create a resource button, search for Cognitive Services, and create a Cognitive Services resource with the following settings:

- Subscription: Your Azure subscription.

- Resource group: Select or create a resource group with a unique name.

- Region: Choose any available region:

- Name: Enter a unique name.

- Pricing tier: S0

- I confirm I have read and understood the notices: Selected.

TODO: Instead of putting plain text of cog_key in code, reference Azure Vault. Have the code in GitHub.

Azure has a cognitiveservices CLI subcommand.

https://docs.audd.io/?ref=public-apis

AI Language services

Formerly called “NLP” (Natural Language Processing), Intro: Tutorial: https://docs.microsoft.com/en-us/learn/paths/explore-natural-language-processing

NLP enables the creation of software that can:

- Analyze and interpret text in documents, email messages, and other sources.

- Interpret spoken language, and synthesize speech responses.

- Automatically translate spoken or written phrases between languages.

- Interpret commands and determine appropriate actions.

- Recognize Speaker based on audio.

Within Microoft, NLP consists of these Azure services (described below):

- LUIS (Language Understanding Intelligent Service)

- Text Analytics

- Speech

- Translator Text

AI Language Tutorials

https://microsoftlearning.github.io/mslearn-ai-language/

- https://learn.microsoft.com/en-us/training/paths/develop-language-solutions-azure-ai/

- https://github.com/MicrosoftLearning/mslearn-ai-language

- Analyze text

- Create a Question Answering Solution

- Create a language understanding model with the Azure AI Language service

- Custom text classification

- Extract custom entities

- Translate Text

- Recognize and Synthesize Speech

- Translate Speech

Speaker Recognition

Speaker Recognition for authentication.

In contrast, Speaker Diarization groups segments of audio by speaker in a batch operation.

“In cloudinary”

LUIS

Think of “LUIS” as Amazon Alexa’s frienemy.

https://www.luis.ai, provides examples of how to use LUIS (Language Understanding Intelligent Service) thus:

A machine learning-based service to build natural language into apps, bots, and IoT devices. Quickly create enterprise-ready, custom models that continuously improve.

Bot Framework Emulator Follow the instructions at https://github.com/Microsoft/BotFramework-Emulator/blob/master/README.md to download and install the latest stable version of the Bot Framework Emulator for your operating system.

Bot Framework Composer Install from https://docs.microsoft.com/en-us/composer/install-composer.

Utterances are input from the user that your app needs to interpret.

https://github.com/Azure-Samples/cognitive-services-language-understanding

CHALLENGE: Add natural language capabilities to a picture-management bot.

- Create a LUIS Service Resource (in Python or C#)

- Add Intents

- Add utterances

- Work with Entities

- Use Prebuilt Domains and Entities

- Train and Publish the LUIS Model

https://www.slideshare.net/goelles/sharepoint-saturday-belgium-2019-unite-your-modern-workplace-with-microsofsts-ai-ecosystem

LUIS Regional websites

Perhaps for less latency, create the LUIS app in the same geographic location where you created the service:

- “(US) West US” in North America: www.luis.ai

- “(Europe) West Europe” and “(Europe) Switzerland North”: eu.luis.ai

- “(Asia Pacific) Australia East”: au.luis.ai

Create LUIS resource

NOTE: LUIS is not a service like “Cognitive Services”, but a Marketplace item:

- In the Azure portal, select + Create a resource.

- In the “Search services and Marketplace” box, type LUIS, and press Enter.

- In search results, select “Language Understanding from Microsoft”.

- Select Create.

- Leave the Create options set to Both (Authoring and Prediction).

- Choose a subscription.

- Create a new resource group named “LearnRG”.

-

Enter a unique name for your LUIS service. This is a public sub-domain to a LUIS Regional websites (above)

PROTIP: Different localities have different costs.

- For Authoring Location, choose the one nearest you.

- For Authoring pricing tier, select F0

- Set your Prediction location to the same region you chose for Authoring location

- For Prediction pricing tier, select F0. If F0 is not available, select S0 or other free/low cost tier.

- Select Review + Create.

- If the validation succeeds, select Create.

- After “Your deployment is complete” appears, click “Go to resource” (within Cognitive Services).

- Within the Resource Management section, select the “Keys and Endpoints” section.

- Click the blue button to the right of KEY 1’s value. That copies it to your Clipboard.

-

If you are making use of my framework, open the environment variables file:

code ../setmem.sh

-

Locate the variables and replace the sample values:

export MY_LUIS_AUTHORING_KEY=”abcdef1234567896b87f122281e9187e” export MY_LUIS_ENDPOINT=””

SECURITY PROTIP: Putting key values in a global variables definition file separate from the code.

authoring_key = env:MY_LUIS_AUTHORING_KEY authoring_endpoint = env:MY_LUIS_ENDPOINT

- Select a LUIS Regional websites (above)

- Login.

- Choose an authoring resource (Subscription). If there are several listed, select the top one.

-

Create a new authoring service (Azure service).

Create a LUIS app in the LUIS portal

-

Now at https://www.luis.ai/applications, notice at the upper-right “PictureBotLUIS (westus, F0)” where the Directory name usually appears in the Portal.

The pages displayed will be different if you have already created a LUIS app or have no apps created at all. Select either Go to apps, or the apps option that is available on your initial LUIS page.

-

Select “+ New app” for conversation Apps for the “Create new app” pop-up dialog.

The LUIS user interface is updated on a regular basis and the actual options may change, in terms of the text used. The basic workflow is the same but you may need to adapt to the UI changes for the text on some elements or instructions given here.

Take note of the other options such as the ability to import JSON or LU file that contains LUIS configuration options.

-

Give your LUIS app a name, for example, PictureBotLUIS.

-

For Culture, type “en-us” then select the appropriate choice for you language.

- Optionally, give your LUIS app a description so it’s clear what the app’s purpose is.

- Optionally, if you already created a Prediction Resource, you can select that in the drop-down for Prediction resource.

-

Select Done.

-

Dismiss the guidance dialog that may display.

DEFINITION: Each bot action has an intent invoked by an utterance, which gets processed by all models. The top scoring model LUIS selects as the prediction.

An utterance that don’t map to existing intents is called the catchall intent “None”.

Intents with significantly more positive examples (“data imbalance” toward that intent) are more likely to receive positive predictions.

Create a LUIS app with code

-

On your local machine (laptop), install Visual Studio Code for your operating system.

-

If you will be completing your coding with Python, ensure you have a Python environment installed locally. Once you have Python installed, you will need to install the extension for VS Code.

Alternately, to use C# as your code language, start by installing the latest .NET Core package for your platform. You can choose Windows, Linux, or macOS from the drop-down on this page. Once you have .NET Core installed, you will need to add the C# Extension to VS Code. Select the Extensions option in the left nav pane, or press CTRL+SHIFT+X and enter C# in the search dialog.

-

Create a folder within your local drive project folder to store the project files. Name the folder “LU_Python”. Open Visual Studio Code and Open the folder you just created. Create a Python file called “create_luis.py”.

cd cd projects mkdir LU_Python cd LU_Python touch create_luis.py code .

-

Install the LUIS package to gain access to the SDK.

sudo pip install azure-cognitiveservices-language-luis

-

Open an editor (Visual Studio Code)

Create entities in the LUIS portal

- Be signed in to your LUIS app and on the Build tab.

- In the left column, select Entities, and then select + Create.

- Name the entity facet (to represent one way to identify an image).

-

Select Machine learned for Type. Then select Create.

Create a prediction key

https://docs.microsoft.com/en-us/learn/modules/manage-language-understanding-intelligent-service-apps/2-manage-authoring-runtime-keys

- Sign in to your LUIS portal.

- Select the LUIS app that you want to create the prediction key for.

- Select the Manage option in the top toolbar.

-

Select Azure Resources in the left tool bar.

Unless you have already created a prediction key, your screen should look similar to this. The key information is obscured on purpose.

A Starter Key provides 1000 prediction endpoint requests per month for free.

Examples of utterances are on the Review endpoint utterances page on the Build tab.

Install and run LUIS containers

docker pull mcr.microsoft.com/azure-cognitive-services/luis:latest

-

Specify values for Billing LUIS authoring endpoint URI and ApiKey:

docker run --rm -it -p 5000:5000 --memory 4g --cpus 2 --mount type=bind,src=c:\input,target=/input --mount type=bind,src=c:\output\,target=/output mcr.microsoft.com/azure-cognitive-services/luis Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}”–mount type=bind,src=c:\output\,target=/output” indicates where the LUIS app saves log files to the output directory. The log files contain the phrases entered when users hit the endpoint with queries.

-

Get the AppID from your LUIS portal and paste it in the placeholder in the command.

curl -G \ -d verbose=false \ -d log=true \ --data-urlencode "query=Can I get a 5x7 of this image?" \ "http://localhost:5000/luis/v3.0/apps/{APP_ID}/slots/production/predict" -

Get the AppID from your LUIS portal and paste it in the placeholder in the command.

curl -X GET \ "http://localhost:5000/luis/v2.0/apps/{APP_ID}?q=can%20I%20get%20an%20a%205x7%20of%20this%20image&staging=false&timezoneOffset=0&verbose=false&log=true" \ -H "accept: application/json"

DEMO JSON responses

-

DEMO: voice control lighting in a virtual home.

-

Select suggested utterances to see the JSON response:

- Book me a flight to Cairo

- Order me 2 pizza

- Remind me to call my dad tomorrow

- Where is the nearest club?

Type instructions, use the microphone button to speak commands.

LUIS identifies from your utterance your intents and entities.

Entity types

- List - fixed, closed set of related words (small, tiny, smallest). Case-sensitve

- RegEx - (credit card numbers)

- Prebuilt

- Pattern.Any

- Machine Learned

LUIS CLI

Run my az-luis-cli.sh.

DOCS:

https://github.com/cloudacademy/using-the-azure-machine-learning-sdk

-

Create a Resource referenced when LUIS Authoring is defined. The resource name should be lower case as it is used for the endpoint URL, such as:

https://luis-resource-name.cognitiveservices.azure.com/

-

The Bot Framework CLI requires Node.js.

npm i -g npm

- Install Bot Framework Emulator per instructions at:

https://github.com/Microsoft/BotFramework-Emulator/blob/master/README.md

Download to downloads folder at:

https://aka.ms/bf-composer-download-mac - Install Bot Framework Composer per instructions at:

https://docs.microsoft.com/en-us/composer/install-composer

On a Mac, download the .dmg

https://aka.ms/bf-composer-download-mac -

Use Node.js to install the latest version of Bot Framework CLI from the command line:

npm i -g @microsoft/botframework-cli

https://docs.microsoft.com/en-us/azure/bot-service/bot-builder-howto-bf-cli-deploy-luis?view=azure-bot-service-4.0

LUIS can generate from models a TypeScript or C# typw (program code).

Luis.ai

-

DEMO: https://www.luis.ai

-

Sign in and create an authoring resource refercening the Resource Group.

PROTIP: In the list of Cognitive services kinds, resources and subscription keys created for LUIS authoring are separate than ones for prediction runs so that utilization for the two can be tracked separately.

https://aka.ms/AI900/Lab4 which redirects to

Create a language model with Language Understanding which trains a (LUIS) language model that can understand spoken or text-based commands. He’s Alexa’s boyfriend, ha ha. -

Process Natural Lanaguage using Azure Cognitive Language Services

https://github.com/MicrosoftLearning/AI-102-LUIS contains image files for reference by https://github.com/MicrosoftLearning/AI-102-Code-Repos https://github.com/MicrosoftLearning/AI-102-Process-Speech

PROTIP: LUIS does not perform text summarization. That’s done by another service in the pipeline.

- Utterance is the user’s input that a model needs to interpret, such as “turn the lights on”.

- Entity is the word (or phrase) that is the focus of the utterance, such as “light” in our example.

- Intent is the action or task that the user wants to execute. It reflects in utterance as a goal or purpose. For example, “TurnOn”.

References:

- VIDEO: Starship commander enabled in-game voice commands using Azure.

-

Adding Language Understanding to Chatbots With LUIS by Emilio Meira

- github.com/chomado/GoogleHomeHack in slides by Madoka Chiyoda

Text Analytics API Programming

NOTE: The previous version references interactive Python Notebooks such as MS LEARN HANDS-ON LAB referencing “07 - Text Analytics.ipynb”.

-

Look at the DEMO GUI at:

-

Click “Next Step” through the various processing on a sentence:

- Sentiment analysis (how positive or negative a document is)

-

Key phrase extraction

- Entity Linking to show link in Wikipedia.

-

Bing Entity Search

- Text to Speech services:

- Language Detection (is it English, German, etc.)

- Translator Text

API:

Some Text Analytics API services are synchronous and asynchronous

Cloud Academy lab “Using Text Analytics in the Azure Cognitive Services API”

docs.microsoft.com/en-us/samples/azure/azure-sdk-for-python/textanalytics-samples

Azure Text Analytics client library samples for JavaScript has step-by-step instructions:

-

Logging into the Microsoft Azure Portal

<a target=”_blank” href=”https://portal.azure.com/#blade/HubsExtension/BrowseResource/resourceType/Microsoft.CognitiveServices%2Faccounts”“>Cognitive Services</a>

-

Select the service already defined for you.

Retrieving Azure Cognitive Services API Credentials

-

At the left menu click “Keys and Endpoint”. The Endpoint URL contains:

https://southcentralus.api.cognitive.microsoft.com/

The Endpoint is the location you’ll be able to make requests to in order to interact with the Cognitive Services API. The Key1 value is the key that will allow you to authenticate with the API. Without the Key1 value, you will receive unauthenticated errors.

-

In the Azure Portal, type Function App into the search bar and click Function App:

alt

- Click the only option:

alt

You’ll be brought to the Function App blade. While a complete summary of function apps is outside the scope of this lab, you should know the function apps allow you to create custom functions using a variety of programming languages, and to trigger them using any number of events.

In the case of this lab step, you’ll set up a function app to interact with the Cognitive Services API using Node.js. You’ll then configure the function to be triggered by visiting its URL in a browser tab.

-

On the left side of the blade, click on Functions and then + Add.

-

Click the HTTP Trigger option:

alt

Important: If you don’t see the HTTP trigger option, click More Templates and then Finish and view templates to see the correct option.

- Name the function language-detection and click Create:

alt

-

Click Code + Test in the left sidebar then look at the upper part of the console and switch to the function.json file:

-

Replace the contents of the file with the following snippet and click Save:

{ "bindings": [ { "authLevel": "function", "type": "httpTrigger", "direction": "in", "name": "req", "methods": [ "get", "post" ] }, { "type": "http", "direction": "out", "name": "$return" } ], "disabled": false }

The function.json file manages the behavior of your function app.

-

Switch to the index.js file:

-

Replace the contents of the file with the following code:

'use strict';

let https = require('https');